Loading AI tools

Mathematical model of atmospheric motions From Wikipedia, the free encyclopedia

In atmospheric science, an atmospheric model is a mathematical model constructed around the full set of primitive, dynamical equations which govern atmospheric motions. It can supplement these equations with parameterizations for turbulent diffusion, radiation, moist processes (clouds and precipitation), heat exchange, soil, vegetation, surface water, the kinematic effects of terrain, and convection. Most atmospheric models are numerical, i.e. they discretize equations of motion. They can predict microscale phenomena such as tornadoes and boundary layer eddies, sub-microscale turbulent flow over buildings, as well as synoptic and global flows. The horizontal domain of a model is either global, covering the entire Earth (or other planetary body), or regional (limited-area), covering only part of the Earth. Atmospheric models also differ in how they compute vertical fluid motions; some types of models are thermotropic,[1] barotropic, hydrostatic, and non-hydrostatic. These model types are differentiated by their assumptions about the atmosphere, which must balance computational speed with the model's fidelity to the atmosphere it is simulating.

Forecasts are computed using mathematical equations for the physics and dynamics of the atmosphere. These equations are nonlinear and are impossible to solve exactly. Therefore, numerical methods obtain approximate solutions. Different models use different solution methods. Global models often use spectral methods for the horizontal dimensions and finite-difference methods for the vertical dimension, while regional models usually use finite-difference methods in all three dimensions. For specific locations, model output statistics use climate information, output from numerical weather prediction, and current surface weather observations to develop statistical relationships which account for model bias and resolution issues.

The main assumption made by the thermotropic model is that while the magnitude of the thermal wind may change, its direction does not change with respect to height, and thus the baroclinicity in the atmosphere can be simulated using the 500 mb (15 inHg) and 1,000 mb (30 inHg) geopotential height surfaces and the average thermal wind between them.[2][3]

Barotropic models assume the atmosphere is nearly barotropic, which means that the direction and speed of the geostrophic wind are independent of height. In other words, no vertical wind shear of the geostrophic wind. It also implies that thickness contours (a proxy for temperature) are parallel to upper level height contours. In this type of atmosphere, high and low pressure areas are centers of warm and cold temperature anomalies. Warm-core highs (such as the subtropical ridge and Bermuda-Azores high) and cold-core lows have strengthening winds with height, with the reverse true for cold-core highs (shallow arctic highs) and warm-core lows (such as tropical cyclones).[4] A barotropic model tries to solve a simplified form of atmospheric dynamics based on the assumption that the atmosphere is in geostrophic balance; that is, that the Rossby number of the air in the atmosphere is small.[5] If the assumption is made that the atmosphere is divergence-free, the curl of the Euler equations reduces into the barotropic vorticity equation. This latter equation can be solved over a single layer of the atmosphere. Since the atmosphere at a height of approximately 5.5 kilometres (3.4 mi) is mostly divergence-free, the barotropic model best approximates the state of the atmosphere at a geopotential height corresponding to that altitude, which corresponds to the atmosphere's 500 mb (15 inHg) pressure surface.[6]

Hydrostatic models filter out vertically moving acoustic waves from the vertical momentum equation, which significantly increases the time step used within the model's run. This is known as the hydrostatic approximation. Hydrostatic models use either pressure or sigma-pressure vertical coordinates. Pressure coordinates intersect topography while sigma coordinates follow the contour of the land. Its hydrostatic assumption is reasonable as long as horizontal grid resolution is not small, which is a scale where the hydrostatic assumption fails. Models which use the entire vertical momentum equation are known as nonhydrostatic. A nonhydrostatic model can be solved anelastically, meaning it solves the complete continuity equation for air assuming it is incompressible, or elastically, meaning it solves the complete continuity equation for air and is fully compressible. Nonhydrostatic models use altitude or sigma altitude for their vertical coordinates. Altitude coordinates can intersect land while sigma-altitude coordinates follow the contours of the land.[7]

The history of numerical weather prediction began in the 1920s through the efforts of Lewis Fry Richardson who utilized procedures developed by Vilhelm Bjerknes.[8][9] It was not until the advent of the computer and computer simulation that computation time was reduced to less than the forecast period itself. ENIAC created the first computer forecasts in 1950,[6][10] and more powerful computers later increased the size of initial datasets and included more complicated versions of the equations of motion.[11] In 1966, West Germany and the United States began producing operational forecasts based on primitive-equation models, followed by the United Kingdom in 1972 and Australia in 1977.[8][12] The development of global forecasting models led to the first climate models.[13][14] The development of limited area (regional) models facilitated advances in forecasting the tracks of tropical cyclone as well as air quality in the 1970s and 1980s.[15][16]

Because the output of forecast models based on atmospheric dynamics requires corrections near ground level, model output statistics (MOS) were developed in the 1970s and 1980s for individual forecast points (locations).[17][18] Even with the increasing power of supercomputers, the forecast skill of numerical weather models only extends to about two weeks into the future, since the density and quality of observations—together with the chaotic nature of the partial differential equations used to calculate the forecast—introduce errors which double every five days.[19][20] The use of model ensemble forecasts since the 1990s helps to define the forecast uncertainty and extend weather forecasting farther into the future than otherwise possible.[21][22][23]

The atmosphere is a fluid. As such, the idea of numerical weather prediction is to sample the state of the fluid at a given time and use the equations of fluid dynamics and thermodynamics to estimate the state of the fluid at some time in the future. The process of entering observation data into the model to generate initial conditions is called initialization. On land, terrain maps available at resolutions down to 1 kilometer (0.6 mi) globally are used to help model atmospheric circulations within regions of rugged topography, in order to better depict features such as downslope winds, mountain waves and related cloudiness that affects incoming solar radiation.[24] The main inputs from country-based weather services are observations from devices (called radiosondes) in weather balloons that measure various atmospheric parameters and transmits them to a fixed receiver, as well as from weather satellites. The World Meteorological Organization acts to standardize the instrumentation, observing practices and timing of these observations worldwide. Stations either report hourly in METAR reports,[25] or every six hours in SYNOP reports.[26] These observations are irregularly spaced, so they are processed by data assimilation and objective analysis methods, which perform quality control and obtain values at locations usable by the model's mathematical algorithms.[27] The data are then used in the model as the starting point for a forecast.[28]

A variety of methods are used to gather observational data for use in numerical models. Sites launch radiosondes in weather balloons which rise through the troposphere and well into the stratosphere.[29] Information from weather satellites is used where traditional data sources are not available. Commerce provides pilot reports along aircraft routes[30] and ship reports along shipping routes.[31] Research projects use reconnaissance aircraft to fly in and around weather systems of interest, such as tropical cyclones.[32][33] Reconnaissance aircraft are also flown over the open oceans during the cold season into systems which cause significant uncertainty in forecast guidance, or are expected to be of high impact from three to seven days into the future over the downstream continent.[34] Sea ice began to be initialized in forecast models in 1971.[35] Efforts to involve sea surface temperature in model initialization began in 1972 due to its role in modulating weather in higher latitudes of the Pacific.[36]

A model is a computer program that produces meteorological information for future times at given locations and altitudes. Within any model is a set of equations, known as the primitive equations, used to predict the future state of the atmosphere.[37] These equations are initialized from the analysis data and rates of change are determined. These rates of change predict the state of the atmosphere a short time into the future, with each time increment known as a time step. The equations are then applied to this new atmospheric state to find new rates of change, and these new rates of change predict the atmosphere at a yet further time into the future. Time stepping is repeated until the solution reaches the desired forecast time. The length of the time step chosen within the model is related to the distance between the points on the computational grid, and is chosen to maintain numerical stability.[38] Time steps for global models are on the order of tens of minutes,[39] while time steps for regional models are between one and four minutes.[40] The global models are run at varying times into the future. The UKMET Unified model is run six days into the future,[41] the European Centre for Medium-Range Weather Forecasts model is run out to 10 days into the future,[42] while the Global Forecast System model run by the Environmental Modeling Center is run 16 days into the future.[43]

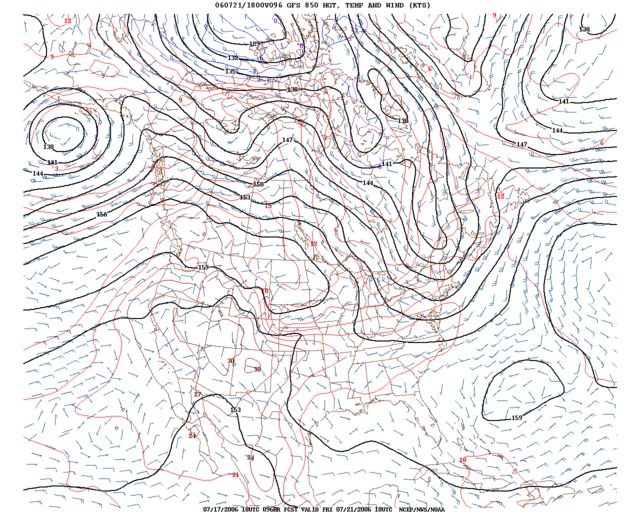

The equations used are nonlinear partial differential equations which are impossible to solve exactly through analytical methods,[44] with the exception of a few idealized cases.[45] Therefore, numerical methods obtain approximate solutions. Different models use different solution methods: some global models use spectral methods for the horizontal dimensions and finite difference methods for the vertical dimension, while regional models and other global models usually use finite-difference methods in all three dimensions.[44] The visual output produced by a model solution is known as a prognostic chart, or prog.[46]

Weather and climate model gridboxes have sides of between 5 kilometres (3.1 mi) and 300 kilometres (190 mi). A typical cumulus cloud has a scale of less than 1 kilometre (0.62 mi), and would require a grid even finer than this to be represented physically by the equations of fluid motion. Therefore, the processes that such clouds represent are parameterized, by processes of various sophistication. In the earliest models, if a column of air in a model gridbox was unstable (i.e., the bottom warmer than the top) then it would be overturned, and the air in that vertical column mixed. More sophisticated schemes add enhancements, recognizing that only some portions of the box might convect and that entrainment and other processes occur. Weather models that have gridboxes with sides between 5 kilometres (3.1 mi) and 25 kilometres (16 mi) can explicitly represent convective clouds, although they still need to parameterize cloud microphysics.[47] The formation of large-scale (stratus-type) clouds is more physically based, they form when the relative humidity reaches some prescribed value. Still, sub grid scale processes need to be taken into account. Rather than assuming that clouds form at 100% relative humidity, the cloud fraction can be related to a critical relative humidity of 70% for stratus-type clouds, and at or above 80% for cumuliform clouds,[48] reflecting the sub grid scale variation that would occur in the real world.

The amount of solar radiation reaching ground level in rugged terrain, or due to variable cloudiness, is parameterized as this process occurs on the molecular scale.[49] Also, the grid size of the models is large when compared to the actual size and roughness of clouds and topography. Sun angle as well as the impact of multiple cloud layers is taken into account.[50] Soil type, vegetation type, and soil moisture all determine how much radiation goes into warming and how much moisture is drawn up into the adjacent atmosphere. Thus, they are important to parameterize.[51]

The horizontal domain of a model is either global, covering the entire Earth, or regional, covering only part of the Earth. Regional models also are known as limited-area models, or LAMs. Regional models use finer grid spacing to resolve explicitly smaller-scale meteorological phenomena, since their smaller domain decreases computational demands. Regional models use a compatible global model for initial conditions of the edge of their domain. Uncertainty and errors within LAMs are introduced by the global model used for the boundary conditions of the edge of the regional model, as well as within the creation of the boundary conditions for the LAMs itself.[52]

The vertical coordinate is handled in various ways. Some models, such as Richardson's 1922 model, use geometric height () as the vertical coordinate. Later models substituted the geometric coordinate with a pressure coordinate system, in which the geopotential heights of constant-pressure surfaces become dependent variables, greatly simplifying the primitive equations.[53] This follows since pressure decreases with height through the Earth's atmosphere.[54] The first model used for operational forecasts, the single-layer barotropic model, used a single pressure coordinate at the 500-millibar (15 inHg) level,[6] and thus was essentially two-dimensional. High-resolution models—also called mesoscale models—such as the Weather Research and Forecasting model tend to use normalized pressure coordinates referred to as sigma coordinates.[55]

Some of the better known global numerical models are:

Some of the better known regional numerical models are:

Because forecast models based upon the equations for atmospheric dynamics do not perfectly determine weather conditions near the ground, statistical corrections were developed to attempt to resolve this problem. Statistical models were created based upon the three-dimensional fields produced by numerical weather models, surface observations, and the climatological conditions for specific locations. These statistical models are collectively referred to as model output statistics (MOS),[60] and were developed by the National Weather Service for their suite of weather forecasting models.[17] The United States Air Force developed its own set of MOS based upon their dynamical weather model by 1983.[18]

Model output statistics differ from the perfect prog technique, which assumes that the output of numerical weather prediction guidance is perfect.[61] MOS can correct for local effects that cannot be resolved by the model due to insufficient grid resolution, as well as model biases. Forecast parameters within MOS include maximum and minimum temperatures, percentage chance of rain within a several hour period, precipitation amount expected, chance that the precipitation will be frozen in nature, chance for thunderstorms, cloudiness, and surface winds.[62]

In 1956, Norman Phillips developed a mathematical model that realistically depicted monthly and seasonal patterns in the troposphere. This was the first successful climate model.[13][14] Several groups then began working to create general circulation models.[63] The first general circulation climate model combined oceanic and atmospheric processes and was developed in the late 1960s at the Geophysical Fluid Dynamics Laboratory, a component of the U.S. National Oceanic and Atmospheric Administration.[64]

By 1975, Manabe and Wetherald had developed a three-dimensional global climate model that gave a roughly accurate representation of the current climate. Doubling CO2 in the model's atmosphere gave a roughly 2 °C rise in global temperature.[65] Several other kinds of computer models gave similar results: it was impossible to make a model that gave something resembling the actual climate and not have the temperature rise when the CO2 concentration was increased.

By the early 1980s, the U.S. National Center for Atmospheric Research had developed the Community Atmosphere Model (CAM), which can be run by itself or as the atmospheric component of the Community Climate System Model. The latest update (version 3.1) of the standalone CAM was issued on 1 February 2006.[66][67][68] In 1986, efforts began to initialize and model soil and vegetation types, resulting in more realistic forecasts.[69] Coupled ocean-atmosphere climate models, such as the Hadley Centre for Climate Prediction and Research's HadCM3 model, are being used as inputs for climate change studies.[63]

Air pollution forecasts depend on atmospheric models to provide fluid flow information for tracking the movement of pollutants.[70] In 1970, a private company in the U.S. developed the regional Urban Airshed Model (UAM), which was used to forecast the effects of air pollution and acid rain. In the mid- to late-1970s, the United States Environmental Protection Agency took over the development of the UAM and then used the results from a regional air pollution study to improve it. Although the UAM was developed for California, it was during the 1980s used elsewhere in North America, Europe, and Asia.[16]

The Movable Fine-Mesh model, which began operating in 1978, was the first tropical cyclone forecast model to be based on atmospheric dynamics.[15] Despite the constantly improving dynamical model guidance made possible by increasing computational power, it was not until the 1980s that numerical weather prediction (NWP) showed skill in forecasting the track of tropical cyclones. And it was not until the 1990s that NWP consistently outperformed statistical or simple dynamical models.[71] Predicting the intensity of tropical cyclones using NWP has also been challenging. As of 2009, dynamical guidance remained less skillful than statistical methods.[72]

Seamless Wikipedia browsing. On steroids.

Every time you click a link to Wikipedia, Wiktionary or Wikiquote in your browser's search results, it will show the modern Wikiwand interface.

Wikiwand extension is a five stars, simple, with minimum permission required to keep your browsing private, safe and transparent.