Twitch, the livestreaming service that largely caters to gamers, has exploded in popularity since being acquired by Amazon in 2014—but toxicity on the platform has also increased. This week, Twitch took an important step toward getting a handle on its applause-like "chat" feature, and it goes beyond the usual dictionary-based approach of flagging inappropriate or abusive language.

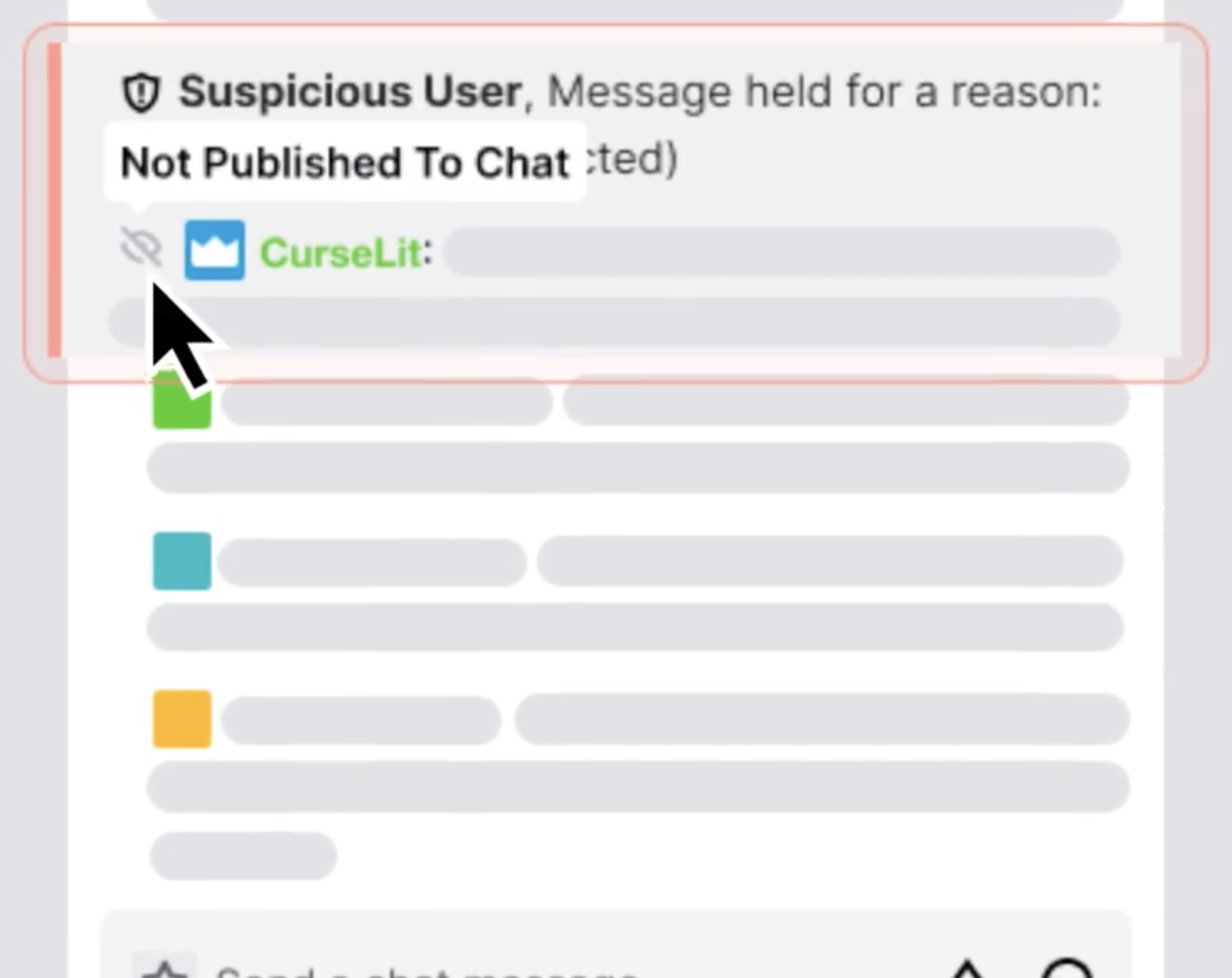

The Tuesday rollout of a new "ban evasion" flag came with a surprising amount of fanfare, and it puts Twitch in a position to do what many other platforms won't. The company is not only paying attention to "sockpuppet" account generation; it is pledging to squash it.

Spinning up attacks

Pretty much any modern online platform faces the same issue: Users can join, view, and comment on content with little more than an email address. If you want to say nasty things about Ars Technica across the Internet, for example, you could make a ton of new accounts on various sites in a matter of minutes. Your veritable anti-Ars mini-mob requires little more than a series of free email addresses. Should a service require some form of two-factor authentication, you could simply attach spare physical devices or spin up additional phone numbers.

In less hypothetical terms, Twitch creators have dealt with this "hate mob" problem for some time now, with the issue peaking in intensity after Twitch added an "LGBTQIA+" category. Abusive users charged up hyperfocused lasers of hateful speech, usually directed at smaller creators who could be discovered in Twitch's category directory. As I explained in September:

While Twitch includes built-in tools to block or flag messages that trigger a dictionary full of vulgar and hateful terms, many of the biggest hate-mob perpetrators have turned to their own dictionary-combing tools.

These tools allow perpetrators to evade basic moderation tools because they construct words using non-Latin characters—and can generate thousands of facsimiles of notorious slurs by mixing and matching characters, thus looking close enough to the original word. Their power for hate and bigotry explodes thanks to context that turns arguably innocent words into targeted insults, depending on the marginalized group they're aimed at.

Battling these attacks on a dictionary-scanning level isn't so cut and dried, however. As any social media user will tell you, context matters—especially as language evolves and as harassers and abusers co-opt seemingly innocent phrases to target and malign marginalized communities.

Loading comments...

Loading comments...