LiteRT (short for Lite Runtime) is the new name for TensorFlow Lite (TFLite). While the name is new, it's still the same trusted, high-performance runtime for on-device AI, now with an expanded vision.

Since its debut in 2017, TFLite has enabled developers to bring ML-powered experiences to over 100K apps running on 2.7B devices. More recently, TFLite has grown beyond its TensorFlow roots to support models authored in PyTorch, JAX, and Keras with the same leading performance. The name LiteRT captures this multi-framework vision for the future: enabling developers to start with any popular framework and run their model on-device with exceptional performance.

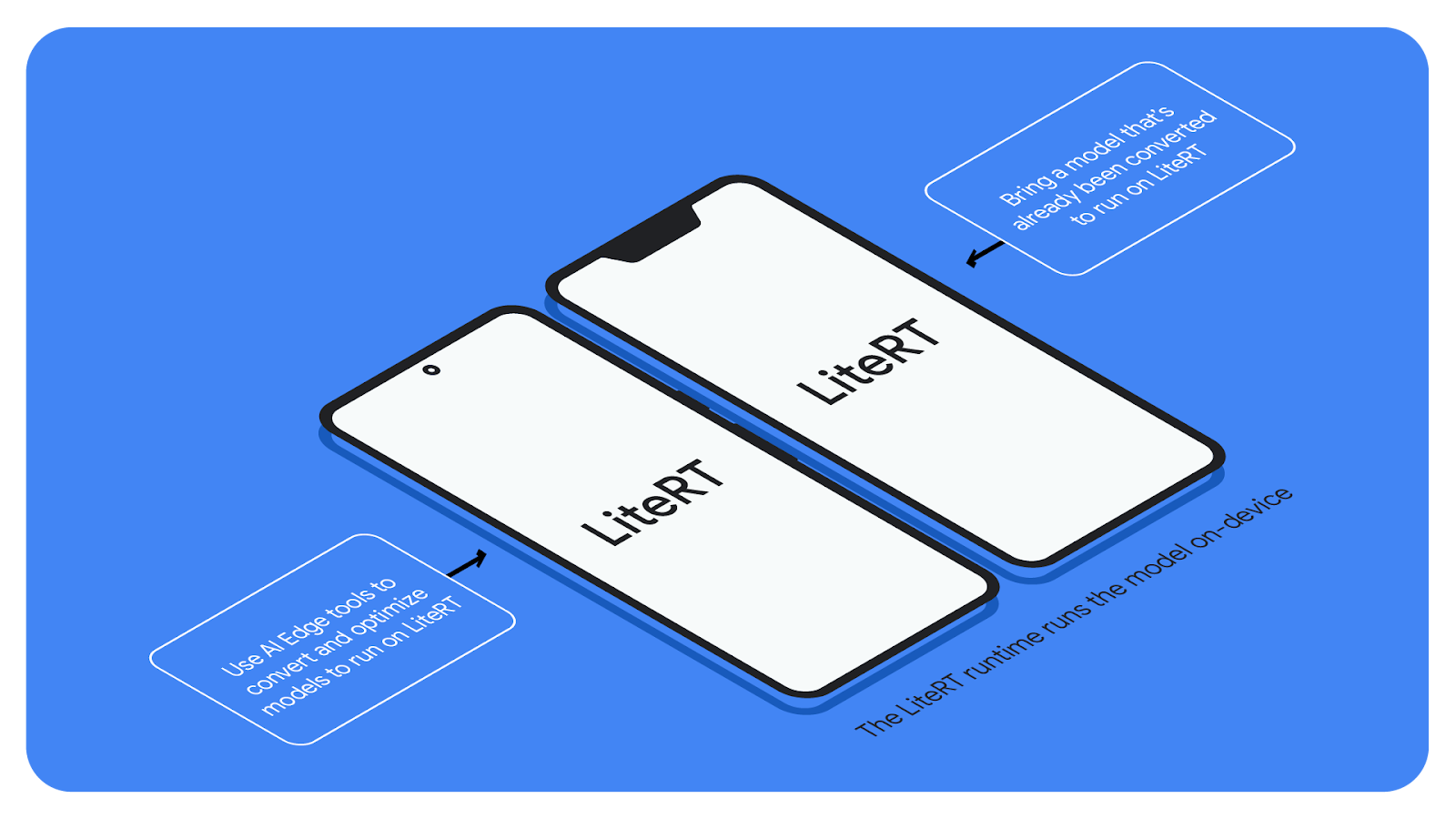

LiteRT, part of the Google AI Edge suite of tools, is the runtime that lets you seamlessly deploy ML and AI models on Android, iOS, and embedded devices. With AI Edge's robust model conversion and optimization tools, you can ready both open-source and custom models for on-device development.

This change will roll out progressively. Starting today, you’ll see the LiteRT name reflected in the developer documentation, which is moving to ai.google.dev/edge/litert, and in other references across the AI Edge website. The documentation at tensorflow.org/lite now redirects to corresponding pages at ai.google.dev/edge/litert.

The main TensorFlow brand will not be affected, nor will apps already using TensorFlow Lite.

Our goal is that this change is minimally disruptive, requiring as few code changes from developers as possible.

If you currently use TensorFlow Lite via packages, you’ll need to update any dependencies to use the new LiteRT from Maven, PyPi, Cocoapods.

If you currently use TensorFlow Lite via Google Play Services, no change is necessary at this time.

If you currently build TensorFlow Lite from source, please continue building from the TensorFlow repo until code has been fully moved to the new LiteRT repo later this year.

1. What is changing beyond the new name, LiteRT?

For now, the only change is the new name, LiteRT. Your production apps will not be affected. With a new name and refreshed vision, look out for more updates coming to LiteRT, improving how you deploy classic ML models, LLMs, and diffusion models with GPU and NPU acceleration across platforms.

2. What’s happening to the TensorFlow Lite Support Library (including TensorFlow Lite Tasks)?

The TensorFlow Lite support library and TensorFlow Lite Tasks will remain in the /tensorflow repository at this time. We encourage you to use MediaPipe Tasks for future development.

3. What’s happening to TensorFlow Lite Model Maker?

You can continue to access TFLite Model Maker via https://pypi.org/project/tflite-model-maker/

4. What if I want to contribute code?

For now, please contribute code to the existing TensorFlow Lite repository. We’ll make a separate announcement when we’re ready for contributions to the LiteRT repository.

5. What’s happening to the .tflite file extension and file format?

No changes are being made to the .tflite file extension or format. Conversion tools will continue to output .tflite flatbuffer files, and .tflite files will be readable by LiteRT.

6. How do I convert models to .tflite format?

For Tensorflow, Keras and Jax you can continue to use the same flows. For PyTorch support check out ai-edge-torch.

7. Will there be any changes to classes and methods?

No. Aside from package names, you won’t have to change any code you’ve written for now.

8. Will there be any changes to TensorFlow.js?

No, TensorFlow.js will continue to function independently as part of the Tensorflow codebase.

9. My production app uses TensorFlow Lite. Will it be affected?

Apps that have already deployed TensorFlow Lite will not be affected. This includes apps that access TensorFlow Lite via Google Play Services. (TFLite is compiled into the apps at build time, so once they’re deployed, apps have no dependency.)

10. Why “LiteRT”?

“LiteRT” (short for Lite Runtime) reflects the legacy of TensorFlow Lite, a pioneering “lite”, on-device runtime, plus Google’s commitment to supporting today’s thriving multi-framework ecosystem.

11. Is TensorFlow Lite still being actively developed?

Yes, but under the name LiteRT. Active development will continue on the runtime (now called LiteRT), as well as the conversion and optimization tools. To ensure you're using the most up-to-date version of the runtime, please use LiteRT.

12. Where can I see examples of LiteRT in practice?

You can find examples for Python, Android, and iOS in the official LiteRT samples repo.

We’re excited for the future of on-device ML, and are committed to our vision of making LiteRT the easiest to use, highest performance runtime for a wide range of models.