Objective

For the "typical" time it takes for a commit to be approved and landed in master to be 5 minutes or less.

Status quo

As of 11 June 2019, the gate usually takes around 20 minutes.

The two slowest jobs typically take 13-17 minutes each. The time for the gate overall is rarely under 15 minutes, because we run multiple of these jobs (increasing the chances of random slowness), and while they can run in parallel, they don't always start immediately - given limited CI execution slots.

Below a sample from a MediaWiki commit (master branch):

These can be grouped in two kinds of jobs:

- wmf-quibble: These install MW with the gated extensions, and then run all PHPUnit, Selenium and QUnit tests.

- mediawiki-quibble: These install MW bundled extensions only, and then run PHPUnit, Selenium and QUnit tests.

Stats from wmf-quibble-core-vendor-mysql-php72-docker

- 9-15 minutes (wmf-gated, extensions-only)

- Sample:

- PHPUnit (dbless): 1.91 minutes / 15,782 tests.

- QUnit: 29 seconds / 1286 tests.

- Selenium: 143 seconds / 43 tests.

- PHPUnit (db): 3.85 minutes / 4377 tests.

Stats from mediawiki-quibble-vendor-mysql-php72-docker:

- 7-10 minutes (plain mediawiki-core)

- Sample:

- PHPUnit (unit+dbless): 1.5 minutes / 23,050 tests.

- QUnit: 4 seconds / 437 tests.

- PHPUnit (db): 4 minutes / 7604 tests.

Updated status quo

As of 11 May 2021, the gate usually takes around 25 minutes.

The slowest job typically takes 20-25 minutes per run. The time for the gate overall can never be faster than the slowest job, and can be worse as though we run other jobs in parallel, they don't always start immediately, due to given limited CI execution slots.

Below is the time results from a sample MediaWiki commit (master branch):

[Snipped: Jobs faster than 5 minutes]

- 9m 43s: mediawiki-quibble-vendor-mysql-php74-docker/5873/console

- 9m 47s: mediawiki-quibble-vendor-mysql-php73-docker/8799/console

- 10m 03s: mediawiki-quibble-vendor-sqlite-php72-docker/10345/console

- 10m 13s: mediawiki-quibble-composer-mysql-php72-docker/19129/console

- 10m 28s: mediawiki-quibble-vendor-mysql-php72-docker/46482/console

- 13m 11s: mediawiki-quibble-vendor-postgres-php72-docker/10259/console

- 16m 44s: wmf-quibble-core-vendor-mysql-php72-docker/53990/console

- 22m 26s: wmf-quibble-selenium-php72-docker/94038/console

Clearly the last two jobs are dominant in the timing:

- wmf-quibble: This jobs installs MW with the gated extensions, and then runs all PHPUnit and QUnit tests.

- wmf-quibble-selenium: This job installs MW with the gated extensions, and then runs all the Selenium tests.

Note that the mediawiki-quibble jobs each install just the MW bundled extensions, and then run PHPUnit, Selenium and QUnit tests.

Stats from wmf-quibble-core-vendor-mysql-php72-docker:

- 13-18 minutes (wmf-gated, extensions-only)

- Select times:

- PHPUnit (unit tests): 9 seconds / 13,170 tests.

- PHPUnit (DB-less integration tests): 3.31 minutes / 21,067 tests.

- PHPUnit (DB-heavy): 7.91 minutes / 4,257 tests.

- QUnit: 31 seconds / 1421 tests.

Stats from wmf-quibble-selenium-php72-docker:

- 20-25 minutes

Scope of task

This task represents the goal of reaching 5 minutes or less. The work tracked here includes researching ways to get there, trying them out, and putting one or more ideas into practice. The task can be closed once we have reached the goal or if we have concluded it isn't feasible or useful.

Feel free to add/remove subtasks as we go along and consider different things.

Stuff done

- T202116: LoadBalancer opening extra connections in different connection categories doesn't work with PHPUnit & temporary tables

- T225496: Improve caching in CI tests

- T225901: Don't deduplicate archive table on new installs

- T225719: HashRingTest::testHashRingKetamaMode takes 3+ seconds

- T225184: CirrusSearch\SearcherTest::testSearchText PHPUnit tests take a while and runs for everyone

- T227067: ReleaseNotesTest:testReleaseNotesFilesExistAndAreNotMalformed takes ~ 4 seconds

- T196347: Quibble may need to rebuild localization cache before running tests

- Speed up LocalisationCache in Quibble jobs by switching from DB to file-based (by setting $wgCacheDirectory). – https://gerrit.wikimedia.org/r/528933, https://gerrit.wikimedia.org/r/529057

- Skip "npm test" step during Quibble jobs (covered by separate node10-test job). – T233143

- T230701: Migrate Scribunto to stop using MediaWikiIntegrationTestCase on unit tests

- T232759: Move CI selenium/qunit tests of mediawiki repository to a standalone job

- Wikibase: T231862: Selenium tests for Wikibase are being ran twice

- T225068: Add a PHPUnit group to skip test on gated CI runs

- T225248: Consider moving browser based tests (Selenium and QUnit) to a non-voting pipeline

- Use npm ci: T234738: Quibble jobs re-download npm packages every build (Castor not loading?)

Ideas to explore and related work

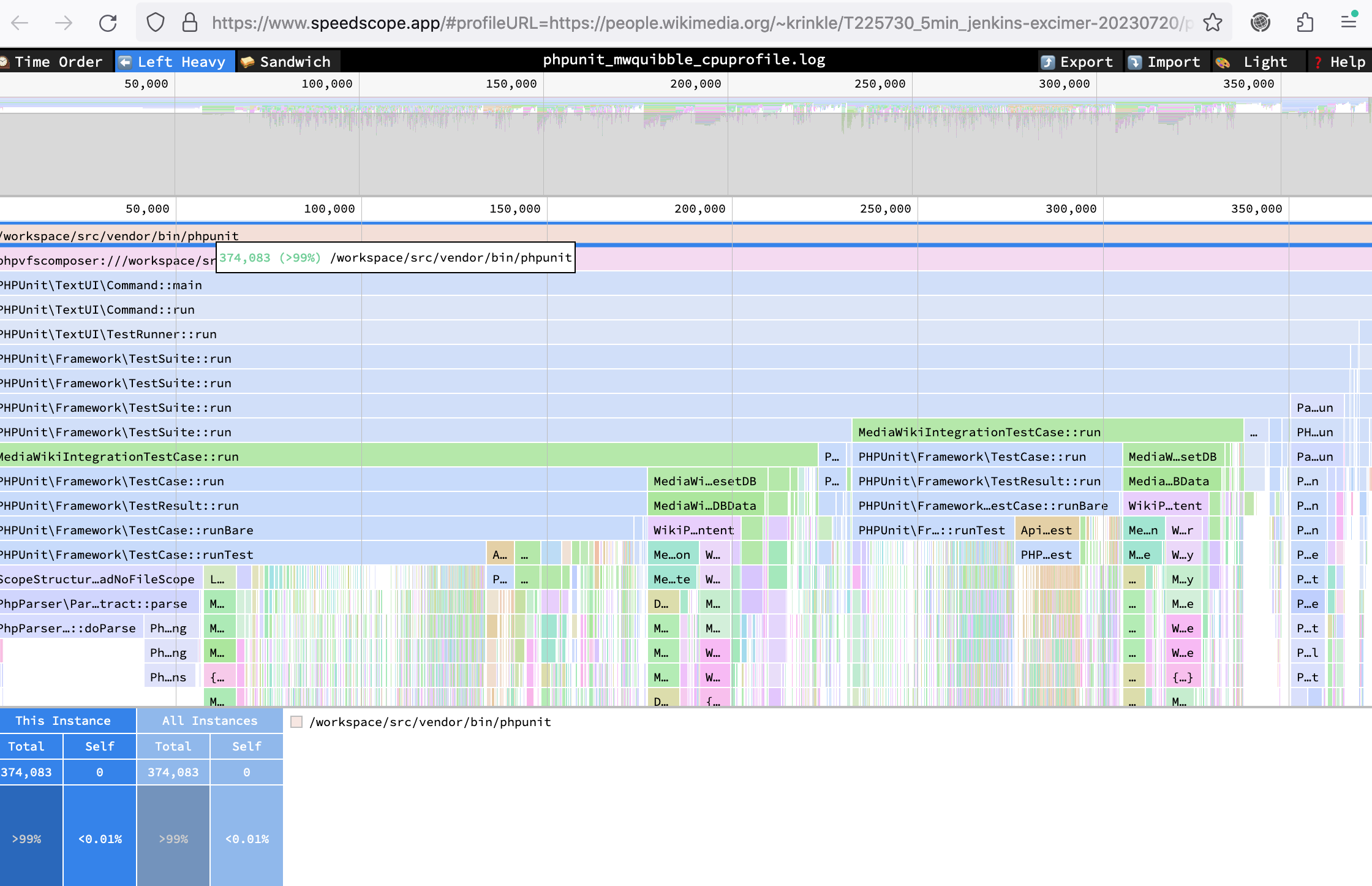

- Look at the PHPUnit "Test Report" for a commit and sort the root by duration. Find the slowest ones and look at its test suite to look for ways to improve it. Is it repeating expensive setups? Perhaps that can be skipped or re-used. Is it running hundreds of variations for the same integration test? Perhaps reduce it to just 1 case for that story, and apply the remaining cases to a lighter unit test instead.

- T50217: Speed up MediaWiki PHPUnit build by running integration tests in parallel

- T226869: Run browser tests in parallel

- T221434: Ensure we're testing appropriately and not over-testing across Wikimedia-deployed code

- T225218: Consider httpd for quibble instead of php built-in server

- Wikibase: T228739: Jenkins runs Scribunto tests in Wikibase patches

- T323750: Provide early feedback when a patch has job failures