Measurement Reliability and Validity For a measure to

Measurement: Reliability and Validity • For a measure to be useful, it must be both reliable and valid • Reliable = consistent in producing the same results every time the measure is used • Valid = measuring what it is supposed to measure

Reliability Observed Score = true score + systematic error + random error • Observed Scores are the data gathered by the researcher • True Scores are the actual unknown values that correspond to the construct of interest • Systematic Error is variations that results from constructs of disinterest • Random Error is nonsystematic variations in the observed scores

Sources of Variability • Construct of interest – corresponds to the IV – “effect” • Constructs of disinterest – “systematic error” • Non-systematic variation – “random error” – “error variance”

Observed Score = True Score Systematic Error More Reliable: Less Reliable: Random Error

How many o’s? Test 1 Xxxxxoxxxxxxxxxxxxxoxxxxxxxaxxxxxuxxxxoxxxqxxxxxxxc Test 2 Xxxxxoxxxxxxxxxxxxxoxxxxxxxaxxxxxuxxxxoxxxqxxxxxxxc

How many o’s? Test 1 Xxxxxoxxxxxxxxxxxxxoxxxxxo xxxxxxxaxxxxxuxxxxoxxxqxxxxxxxc Test 2 Xxxxxoxxxxxxxxxxxxxoxxxxxo xxxxxxxaxxxxxuxxxxoxxxqxxxxxxxc

How do we determine whether a measure is reliable? Types of reliability • Test-retest • Internal Consistency – Split-half – Cronbach’s alpha: average of all possible splithalf reliabilities

Factors that increase reliability • Number of items • High variation among individuals being tested • Clear instructions • Optimal testing situation

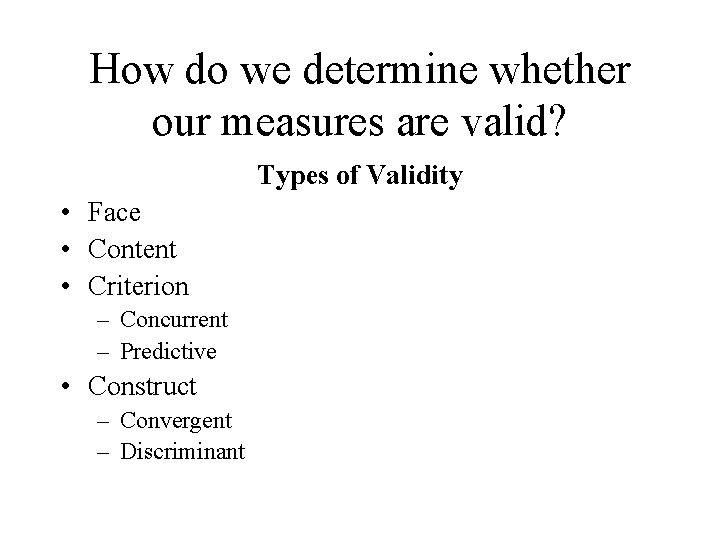

How do we determine whether our measures are valid? Types of Validity • Face • Content • Criterion – Concurrent – Predictive • Construct – Convergent – Discriminant

Threats to reliability and validity Score = effect + systematic + random error • Systematic error: threat to validity • Random error: threat to reliability

- Slides: 10