Abstract

The National Lung Screening Trial is evaluating the effectiveness of low-dose spiral CT and conventional chest X-ray as screening tests for persons who are at high risk for developing lung cancer. This multicenter trial requires quality assurance (QA) for the image quality and technical parameters of the scans. The electronic system described here helps manage the QA process. The system includes a workstation at each screening center that de-identifies the data, a DICOM storage service at the QA Coordinating Center, and Web-based systems for presenting images and QA evaluation forms to the QA radiologists. Quality assurance data are collated and analyzed by an independent statistical organization. We describe the design and implementation of this electronic QA system, emphasizing issues relating to data security and privacy, the various obstacles encountered in the installation of a common system at different participating screening centers, and the functional success of the system deployed.

Key words: Clinical trial, quality assurance, VPN, NLST

Introduction

The National Lung Screening Trial (NLST) aims to compare the effectiveness of two screening tests, low-dose spiral CT scan and chest X-ray (CXR), in reducing lung cancer-specific mortality in persons who are at high risk for developing lung cancer. The trial is sponsored by the National Cancer Institute and conducted within two separate administrative organizations: the Prostate, Lung, Colorectal, and Ovarian (PLCO) cancer screening trial network and the American College of Radiology Imaging Network (ACRIN), under a harmonized protocol. Recruitment through 10 PLCO screening centers (SCs) began at the end of September 2002 and was completed in January 2004. Total NLST accrual at PLCO SCs was 34,614. Participants were randomized to CT and CXR groups. The protocol calls for imaging studies to be performed at baseline, then annually for 2 years (three studies per participant). Each SC provides diagnostic interpretation of local imaging studies. Diagnostic summaries, as well as other clinical and demographic data, are maintained by Westat (Rockville, MD), an independent research corporation, contracted to provide coordinating and statistical services for the PLCO trial network.

Computed tomography and CXR image-acquisition devices at the SCs vary with respect to vendor and model. All CT scanners are multidetector devices. Many CXR devices are digital, but film interpretation is still used at some SCs. To monitor image quality and provide quality control throughout the study, an electronic network incorporating the various devices was established. This network provides the means to collect randomly selected digital imaging studies from SCs in electronic format, distribute the studies to QA radiologists, and collect the image quality review data. Here we describe the requirements, components, and function of the entire network and QA system.

Methods

Prior to the launch of the NLST, the National Cancer Institute (NCI) PLCO project officers and Westat convened a Quality Assurance Working Group for the purpose of maintaining quality control for the PLCO-NLST sites over the life of the trial. This committee outlined an approach to quality control consisting of three components: (1) CT and radiography equipment quality control; (2) image quality control; and (3) study interpretation quality control. For the image quality control component, the committee decided that blinded review of the image quality by board-certified radiologists would be essential to maintain quality standards for imaging studies.

The Electronic Radiology Laboratory at the Mallinckrodt Institute of Radiology (MIR) was enlisted as the Quality Assurance Coordinating Center (QACC) to design and develop an electronic QA system that would be used by QA radiologists to review a statistical sample of the screening studies. The Quality Assurance Working Group defined the following requirements for an electronic QA system.

The QA system should sample the screening studies based on data provided by Westat. Westat should provide the list of studies from each screening center to be reviewed and assign a QA radiologist to each study. The sample rate of approximately 432 CT studies and 432 chest X-rays per year was calculated to assure detection of a 3% defect rate, while allowing a defect rate of 1% to go undetected.

The QA system should allow screening centers to submit studies to the QACC electronically over the Internet. Data submission should be rapid and simple for each screening center.

Data submission should follow guidelines established in consideration of local Institutional Review Board (IRB) and HIPAA policies. Images should have protected health information (PHI) removed before submission to the QACC. Furthermore, data submitted over the Internet must be secured using encryption or other means.

Quality assurance radiologists should be able to review each study (CT or chest X-ray) with a responsive system that is efficient for the QA radiologist.

Each study that is to be reviewed should be displayed with a combination of technical parameters (kVp, mAs, effective mAs, etc.) from the images and subjective questions regarding image quality (e.g., field of view, motion artifacts). Quality assurance radiologists should be able to evaluate all parameters in each of the categories.

Planning the image quality QA system structure and procedures occurred over several months prior to the start of the trial. This involved presentation of design and procedural options to the Quality Assurance Working Group and to other NCI and NLST SC personnel at Steering Committee meetings. Feedback and suggestions from these groups were used to develop the system implemented.

Results

Design of a Quality Assurance System

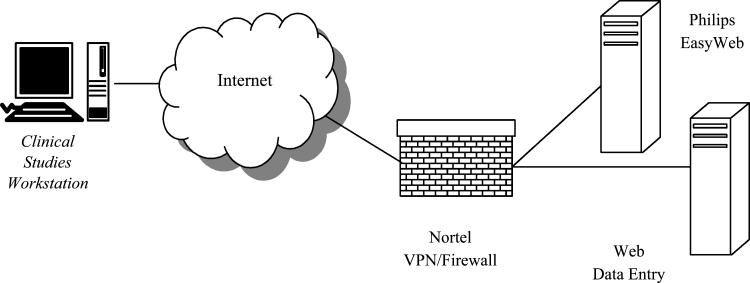

The QA system can be divided into two components: an input system and a Web-based review system. The QA input system is shown in Figure 1. The Clinical Studies Workstation (CSW)1 is a hardware/software combination developed previously at the MIR Electronic Radiology Laboratory. Each CSW consists of a standard Dell desktop computer (Precision 340) with Sony SDM-P82 TFT LCD color flat-panel display monitor. The monitor was chosen after a quantitative performance evaluation of several different flat-panel systems and was considered to provide an appropriate balance between performance and cost for the intended purposes. This system was not designed for use as a clinical diagnostic device. One copy of the CSW was placed at each screening center. The CSW has several functions.

The CSW contains a DICOM Storage Service Class Provider (SCP) that accepts DICOM C-Store requests and stores images in a local cache. Images are accepted without change from scanners (CT, X-ray) and stored for later de-identification by a technologist or study coordinator at the screening center.

The CSW has a (Microsoft) Windows-based application with a graphical user interface (GUI) that allows the coordinator to de-identify an individual study and place the modified study in a queue for transmission to the QACC. The application produces a text file that describes changes to be made to each image in a study (remove patient name, change patient ID to a Westat provided value, remove private groups) as it is sent to the QACC.

An export service is installed on the CSW that processes the text files and sends images to the QACC using the DICOM protocol (C-Store). This export service applies the changes to each image during the transmission process. The original images are left unchanged on the workstation. The text file with the transmission instructions is retained as a historical record.

Fig. 1.

QA input system consists of CSW, Nortel VPN, and QACC storage service (DICOM).

The input system uses a Virtual Private Network (VPN)2 product from Nortel to establish a secure, encrypted tunnel (128-bit key) across the Internet. A VPN concentrator (Nortel model CES1700D) is used to manage the access to the private network at the QACC. A client application from Nortel is loaded on each CSW. Prior to image transmission, the user establishes a VPN session using a log-in/password assigned by the QACC. Once the VPN session is in place, images can be sent over the network using unaltered DICOM C-Store functions. The DICOM applications (both client and server) are unaware of the Nortel software/hardware.

An example of the GUI for the workstation de-identification and image transmission application is shown in Figure 2 below. The user at the SC sees the name of the participant and fields that are used to change certain values in the DICOM header. The workstation has been configured such that the patient name is always erased, even if the user forgets to change it. This eliminates one class of mistakes that can be made at the SC. The diagram shows that the GUI allows the user to replace the patient identifier used by the local institution with one generated by Westat. This blinds the QACC to the identity of the participant per HIPAA guidelines. Other identifying information, such as date of birth, is automatically removed by directives found in the configuration files.

Fig. 2.

GUI for CSW showing one entry for a screening exam.

When the CSW transmits images to the QACC, the images are stored by a DICOM Storage SCP. The images are held in a staging area in anticipation of processing by QACC staff.

The second component of the QA system is the Web-based review system shown in Figure 3. The CSW shown in Figure 3 is the same workstation shown in Figure 1. In the review system, a Web browser on this computer is used to retrieve images from the QACC storage system and display them for the QA radiologist. The QA radiologist is unable to view the images stored on the CSW for de-identification; there is no viewing software included with the de-identification program.

Fig. 3.

Block diagram of Web-based review system.

A manual step in the review process is handled by staff at the QACC. Each input study is compared with a master list provided by Westat to ensure that the proper studies for QA have been received at the QACC. This includes checking theWestat identifiers entered in the images for accuracy. The input process allows for correction of obvious typographical errors, whereas significant errors (estimated at less than 2% of transmitted studies), such as receipt of the wrong study oran incomplete study, require consultation withpersonnel at the screening center submitting the image.

After receipt of the proper study is confirmed, the QACC staff uses several custom applications to extract parameters from the DICOM header and make entries in a QA database; parameters that are not made directly available in the DICOM header by certain vendors are calculated from the available data by QACC staff (e.g., mAs can be calculated from mA and time). The appropriate set of images for QA is identified and used for analysis. For CT, the screening protocol calls for slices up to 2.5 mm thick reconstructed with specific filters. Screening centers routinely reconstruct with the appropriate parameters, but also may send additional reconstructions. For example, slices reconstructed at 5-mm thickness and/or using different filters may be submitted in addition to those required but are not needed for QAreview.

The database entries include the QA radiologist assigned by Westat for this screening study and technical parameters that are listed in Tables 1 and 2. An accession number is assigned by the QACC and used to identify a specific screening exam. The QACC is blinded to the identity of the participant. The accession number generated by the QACC is linked to the Westat identifier but cannot be used to determine the actual identity of an NLST participant.

Table 1.

Technical parameters for CT defined by the NLST protocol

| Technical parameter | Acceptable value or range |

|---|---|

| kVp | 120–140 |

| mAs | 40–80 |

| Effective mAs | 20–60 |

| Pitch | 1.25–2.00 |

| Reconstruction filter | Depends on equipment manufacturer |

| Reconstruction thickness | Up to 2.5 mm |

| Reconstruction interval | ≤slice thickness |

Table 2.

Technical parameters for CR defined by the NLST protocol

| Technical parameter | Expected value or range |

|---|---|

| kVp | 100–150 |

| mAs | 0.1–20 |

| Exposure time | Up to 40 ms |

The QACC also assigns a new participant identifier that replaces the Westat participant identifier. These two numbers are randomly assigned at the QACC and blind the QA radiologist to the screening-center origin of the exam.

After the parameters are entered in the QA database, the one series of images required for QAreview is sent to a Philips (Philips Medical Systems, Andover, MA) EasyWeb system. This is a commercial, Web-based product that can be used to navigate and display common radiological images, including the CT and CR images typically used for this screening protocol. The images sent to the EasyWeb system contain the same QACC-assigned participant ID and accession number that were entered in the QA database. A final manual step is performed on the EasyWeb system to assign the new imaging study to a specific QA radiologist. The EasyWeb software allows each QA radiologist to see only his or her assigned cases, reducing the navigation time.

The QA radiologist uses a Web browser (Internet Explorer) to connect to the Web Data Entry system. This custom-written software gives each radiologist a worklist of studies to review. An example worklist is shown in Figure 4 for a fictitious reviewer.

Fig. 4.

Example of worklist page for QA radiologist.

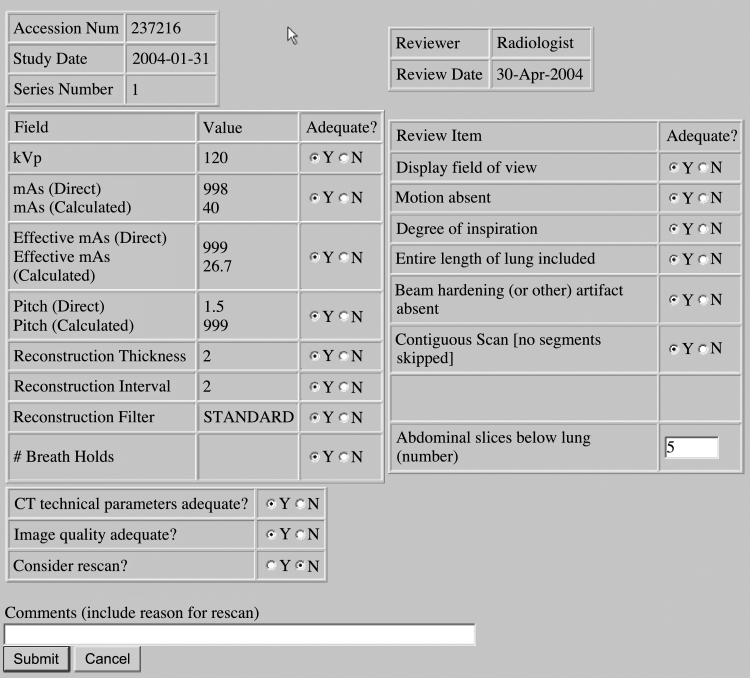

In the real system, each reviewer sees only their own worklist, which is associated with the individual reviewer’s log-in user name and password. When the QA radiologist selects one entry from the list, the Web system produces a page that lists the technical parameters for the study and a series of QA questions concerning both the technical parameters and image quality. The technical parameters are automatically extracted from the QA database. A sample CT review form is shown in Figure 5.

Fig. 5.

Example of screening form for CT QA review.

The QA radiologist opens a second Web browser to connect to the Philips EasyWeb system to review the CT and chest X-ray images. The QACC coordinates a worklist on the EasyWeb system so a radiologist sees his or her assigned QA studies. Once the radiologist locates the appropriate images on the EasyWeb system, he or she reviews the study using the interface shown in Figure 6. The QA radiologist is not able to view images in the CSW input storage that will be transmitted to the QACC; the radiologist can only view de-identified images using the EasyWeb system.

Fig. 6.

Graphical user interface of Philips EasyWeb system.

The QA radiologist evaluates the correctness of the technical parameters as well as the qualitative aspects of the imaging study (e.g., presence of motion artifact, appropriate field of view, overall image quality, etc.). The evaluation of the QA radiologist is submitted electronically, and the radiologist’s entries are automatically transmitted to the QA database.

One QA radiologist evaluates each screening exam. If that radiologist recommends that an exam be repeated (because of poor quality), the QACC enters that study for review by two other radiologists, who are both unaware that the study has been entered for review by the others. If one or both of the additional reviewers recommend the study for repeat, Westat notifies the screening center and recommends considering that the screen be repeated. The screening center has the final decision on repeat screens, as there may be participant-related extenuating circumstances to consider.

The QACC submits monthly reports to Westat that include the technical parameters recorded for each imaging study and the QA radiologists’ observations concerning those parameters and image quality. Westat uses these raw reports to generate quarterly and yearly reports that are sent to the QA Committee and used to provide feedback to individual screening centers and suggest improvements if necessary.

Installation of Clinical Studies Workstation

The CSW was originally designed for a different clinical study1 and modified for use in the NLST. Each monitor/workstation pair was calibrated at the QACC before shipment to the screening centers. This was essential so that QA radiologists would see consistent display quality during the QA process. Although only three QA radiologists served during the first year, all systems were calibrated in the event that this duty was rotated among the sites. The QA radiologists use these calibrated systems for QA review and do not use other desktop machines. This allows the QACC to maintain quality control over the imaging displays. Calibration is easily checked by viewing a test pattern placed on each CSW.

After on-site assistance was provided by QACC staff for the first two screening centers, site installation of the CSW was performed by SC staff, with E-mail and telephone support from theQACC. Each site chose its own location for the workstation: some near the PACS, others in the office of a research coordinator. Network configuration to support the Nortel VPN was the most time-consuming task. The VPN client software on the Windows PC communicates with the Nortel concentrator at the QACC and requires placement of conduits in firewalls both at the QACC and at each screening center. Addressing this requirement with each screening center network group and testing took several months during the installation phase.

Software Components

The QACC system uses a number of different software components to build the applications found in the system. Table 3 lists the applications in the CSW and the software technology used to construct those applications.

Table 3.

Software Components in QACC

| Application | Software component | Use |

|---|---|---|

| CSW | MIR CTN | DICOM communication and image storage |

| Microsoft Visual Studio (C++) | GUI and system services | |

| QA database | PostgreSQL | Relational database |

| QA web server | Apache Tomcat | Web server |

| Java servlets | Underlying component for servicing web requests and providing web pages | |

| Imaging web server | Philips EasyWeb | Displays images to QA radiologists. Standard installation with no modifications |

| Virtual private network | Nortel VPN Concentrator | Provides secure transmission |

Timeline

Table 4 below shows some of the major events in the design and deployment of this system. Unpredictable delays in the initiation of the QA process occurred because of SC-specific issues in getting the network configuration settled that required variable lengths of time to resolve and the need to implement changes in the custom Web-based system for QA responses requested by the QA Working Group. Note from the table that recruitment and screening began before the QA system was operational. This led to a backlog of studies from the first few months of screening, which was promptly dispatched by the QA radiologists.

Table 4.

Milestone dates in deployment of NLST QA review system

| Date | Event |

|---|---|

| July 2002 | Begin design work of QA system |

| August 2002 | Begin receiving test images from screening centers, developing QA database, and user interface |

| October 2002 | NLST begins recruiting participants. EasyWeb server received and installed |

| December 2002 | Begin shipping CSW PCs to screening centers. Begin installation with network administrators |

| March 2003 | Begin receiving studies selected for QA review from screening centers |

| May 2003 | Begin QA process with radiologists |

Table 5 shows the number of CT and chest X-ray exams reviewed during the first 6 months ofoperation. The CT counts are higher than the X-ray counts for two reasons. Some of the chest X-rays are interpreted on film and reviewed during a quarterly QA radiologist SC visit. In addition, obtaining accurate kVp and mAs from the DICOM image headers with many CR devices proved difficult and, in some cases, impossible. Many chest X-ray reviews were delayed until more accurate data recorded on screening data forms by the technologists at the screening centers could be obtained.

Table 5.

QA Review for Initial Nine Months

| Month | CT studies reviewed | CR studies reviewed |

|---|---|---|

| May 2003 | 8 | 0 |

| June 2003 | 169 | 39 |

| July 2003 | 61 | 22 |

| August 2003 | 45 | 9 |

| September 2003 | 27 | 2 |

| October 2003 | 43 | 7 |

| November 2003 | 38 | 22 |

| December 2003 | 29 | 15 |

| Total | 420 | 116 |

The system is designed to allow an arbitrary number of QA radiologists. The first year of review was completed using three radiologists. A fourth radiologist was subsequently added to distribute the QA load (both by softcopy and for site visits).

Discussion

Our experience with acquiring digital images in a multicenter trial was similar to that of Ingeholm et al.,3 with several notable differences.

Installing VPN-enabled software at 10 external sites proved to be a challenging task. Network administrators at each site had their own requirements for documenting the network changes required to enable the VPN communication. Ingeholm et al. were forced to resort to custom network protocols for different sites (e.g., ftp) because of firewall issues at some external sites. All PLCO-NLST SCs were capable of modifying their firewalls, so it was not necessary to resort to ftp.

We installed custom software and provided a PC for demographic scrubbing and image transmission; PHI is removed before images are transmitted to the QACC. Supplying the PC with preloaded software may have made it easier to maintain a uniform approach to networking. It certainly simplified the image registration process at the QACC.

Our system removed PHI at each screening center rather than removing it at the central location. This was preferable based on IRB policies at the cooperating institutions.

Obtaining the correct values for certain technical parameters from the equipment of different manufacturers was a time-consuming process because of variation in their locations in the DICOM headers. For example, multidetector CT scanners have two measures of exposure, mAs andeffective mAs. Effective mAs is calculated asthe ratio of mAs/pitch, where pitch is a measureof how far the table moves during one cycleof the instrument. Greater table movement per cycle (higher pitch) implies lower effective mAs and, therefore, lower exposure. Because pitch and mAs impact image quality and radiation exposure, and have protocol-defined acceptable ranges, their monitoring is essential to quality control.

Some manufacturers report mAs in the DICOM attribute 0018 1152: Exposure; other manufacturers use that attribute to report effective mAs. Some DICOM conformance statements are helpful in gleaning this information, but for some scanners, we had to contact the engineering divisions of the corporations. In the end, we had to write custom software for each model of CT scanner that we encountered (a total of eight different models from four different manufacturers). Custom software modifications were needed to calculate pitch, mAs, and effective mAs for each type of instrument.

In addition, some of the CT technical parameters are stored in private groups. This is mainly because the newer multidetector spiral scanners required in this protocol were designed after the original definition of the DICOM CT image object, before parameters such as pitch and number of active channels were created. The CSW software was configured to pass the private group with this information on a per site basis depending on manufacturer. During testing, we found that one manufacturer included both the information that we need (CT pitch data) and patient demographics in the same private element (a composite value with a number of data fields). We modified the CSW software to perform the needed calculation at the screening center and to pass the technical parameters to us without the PHI.

Availability of technical parameters from the CR devices (four different models from four different manufacturers) also was inconsistent. Some equipment does not provide values for mAs and/or kVp. Other scanners are configured to provide estimates of these values based on the assumed protocol, but there is no guarantee that these were the values used during the imaging process. For chest radiographs, it has been necessary to rely more on the parameters recorded on data forms by the SC technologists; these values are provided to the QACC by Westat and manually entered into the database for electronic display to the QA radiologists.

Westat delivers the list of images for QA review to the SCs and the QACC 15 days after the end of each month. Screening centers begin sending images to the QACC within a few days of receiving the request from Westat, but the SCtransmission activity is usually uneven. The QACC personnel enter the images for review into our system over a period of 1–2 weeks. E-mail notification is sent to QA reviewers as soon as images are entered into the system, so QA review can begin immediately.

Potential improvements to the system have been recognized. The current process for entering studies into the QA system requires multiple manual steps and command-line input to programs that could be automated. Software will be developed during the third year of the process to include a Web-based user interface to reduce the time required for data entry.

Conclusion

The QACC hardware, software, and network design provide the infrastructure to support an efficient multicenter image QA monitoring program.

Acknowledgments

This work was performed under the NIH/NCI project National Lung Screening Trial, contract number N01-CN-25516. We are grateful to the following individuals for their help during this project: John Gohagen, former PLCO and NLST Project Officer, for his leadership and direction during the design and implementation of the image QA process; the QA radiologists for feedback in helping to guide the GUI design; the network administrators at the screening centers for their persistence in establishing the necessary network connections; the screening center coordinators in keeping up with the requirements for data submission; our system manager, Paul Koppel, and network administrator, Stan Phillips; and Kirk Smith at the QACC for help during initial database design and image management.

Footnotes

*Quality Assurance Working Group: Timothy Church, Ph.D., Jill Cordes, R.N., Richard Fagerstrom, Ph.D., Glenn Fletcher, Ph.D., Mike Flynn, Ph.D., Melissa Ford, Ph.D., Melissa Fritz, M.P.H., Kavita Garg, M.D., David Gierada, M.D., Fred Larke, M.S., Guillermo Marquez, Ph.D., Alisha Moore, M.S.N., C.R.N.P., Hrudaya Nath, M.D., Pete Ohan, B.S., Tom Payne, Ph.D., and Xizeng Wu, Ph.D.

References

- 1.Moore SM, Maffitt DR, Blaine GJ, Bae KT: A work station acquisition node for multi-center imaging studies. Medical Imaging 2001, PACS Design and Evaluation: Engineering and Clinical Issues, Vol. 4323. San Diego, CA: SPIE-The International Society for Optical Engineering, Bellingham, WA., 2001, pp 271–277

- 2.Newman D, Olewnick D: IPSec VPNs: How Safe? How Speedy?, CommWeb.com. http://www.Commweb.com/article/COM20000912S0009, 2000.

- 3.Ingeholm ML, Levine BA, Fatemi SA, Moser HW. A pragmatic discussion on establishing a multicenter digital imaging network. J Digit Imaging. 2002;15(Suppl 1):180–183. doi: 10.1007/s10278-002-5058-1. [DOI] [PubMed] [Google Scholar]