Abstract

Artificial intelligence (AI)-based methods are showing substantial promise in segmenting oncologic positron emission tomography (PET) images. For clinical translation of these methods, assessing their performance on clinically relevant tasks is important. However, these methods are typically evaluated using metrics that may not correlate with the task performance. One such widely used metric is the Dice score, a figure of merit that measures the spatial overlap between the estimated segmentation and a reference standard (e.g., manual segmentation). In this work, we investigated whether evaluating AI-based segmentation methods using Dice scores yields a similar interpretation as evaluation on the clinical tasks of quantifying metabolic tumor volume (MTV) and total lesion glycolysis (TLG) of primary tumor from PET images of patients with non-small cell lung cancer. The investigation was conducted via a retrospective analysis with the ECOG-ACRIN 6668/RTOG 0235 multi-center clinical trial data. Specifically, we evaluated different structures of a commonly used AI-based segmentation method using both Dice scores and the accuracy in quantifying MTV/TLG. Our results show that evaluation using Dice scores can lead to findings that are inconsistent with evaluation using the task-based figure of merit. Thus, our study motivates the need for objective task-based evaluation of AI-based segmentation methods for quantitative PET.

Keywords: Task-based evaluation, artificial intelligence, segmentation, quantification, positron emission tomography

1. INTRODUCTION

Quantitative positron emission tomography (PET) is showing substantial promise in multiple clinical applications. A major area of interest is in evaluating tumor volumetric features, including metabolic tumor volume (MTV) and total lesion glycolysis (TLG), as prognostic and predictive biomarkers.1,2 Similarly, there is substantial interest in evaluating PET-derived radiomic features (e.g., intra-tumor heterogeneity) as biomarkers for predicting cancer treatment outcomes.3,4 In all these applications, accurate segmentation of tumors on PET images is required. Given this clinical importance, multiple segmentation methods are being actively developed for oncologic PET. In particular, artificial intelligence (AI)-based segmentation methods have been showing substantial promise in yielding tumor delineations on oncologic PET images.5–8 For clinical translation of these methods, appropriate evaluation procedures are required.

Medical images are acquired for a specified clinical task. Thus, it is widely recognized that imaging systems and algorithms be evaluated on their performance in the clinical task. In this context, strategies for performing task-based assessment of image quality have been developed.9–12 However, AI-based algorithms are often evaluated using metrics that may not account for the clinical task of interest. This can be an issue since evaluation using these task-agnostic metrics may yield interpretations that are not consistent with evaluation on the clinical task. For example, Yu et al.13 observed that evaluation of an AI-based denoising method using conventional fidelity-based metrics such as the structural similarity index measure (SSIM) led to findings that were inconsistent with evaluation on the clinical task of detecting myocardial perfusion defects. Similar findings have been observed in multiple other studies,14–16 all motivating the need for task-based evaluation of AI-based denoising algorithms.

Similar to the AI-based denoising methods, currently, AI-based segmentation methods are also typically evaluated using metrics that are not explicitly designed to measure performance on clinical tasks.12,17 These metrics quantify the similarity between the estimated segmentation and a reference standard (e.g., manual segmentation). One such commonly used metric is the Dice score.18 This metric measures the spatial overlap between the reference standard and estimated segmentation. A higher value of the Dice score is often used to infer a more accurate performance. Other similar metrics include the Hausdorff distance and Jaccard similarity coefficient. However, as mentioned above, these metrics may not correlate with the task performance. For example, on the clinical task of quantifying the MTV of primary tumor, it is unclear how the value of Dice score correlates with the accuracy in the quantified MTV.

Given the wide use of task-agnostic metrics to evaluate AI-based segmentation methods for oncologic PET, the goal of our work was to investigate whether such evaluation yields a similar inference to evaluation based on the performance in clinical tasks. Towards this goal, we conducted a retrospective study with the ECOG-ACRIN 6668/RTOG 0235 multi-center clinical trial data.19,20 In this study, we compared the inferences obtained from evaluation of a commonly used AI-based segmentation method using the task-agnostic metric of Dice score vs. performance on the clinical tasks of quantifying the MTV and TLG of primary tumor from PET images of patients with stage IIB/III non-small cell lung cancer (NSCLC).

2. METHODS

In this section, we first present the procedure to collect the oncologic PET images and obtain segmentation annotations (sec. 2.1). We then describe the considered AI-based method to segment the primary tumor on those PET images in section 2.2. Finally, in sections 2.3 and 2.4, we describe the procedure to evaluate this method based on the Dice score and task-based figure of merit.

2.1. Data collection and curation

In this study, de-identified 18F-fluorodeoxyglucose (FDG)-PET and computed tomography (CT) images of 225 patients with stage IIB/III NSCLC were collected from The Cancer Imaging Archive (TCIA) database.21 All the FDG-PET/CT images were obtained prior to chemoradiotherapy. For each patient, a board-certified physician (J.C.M) with more than 10 years of experience in reading nuclear medicine scans segmented the primary tumor on the PET images. Specifically, the physician first identified the primary tumor by reviewing the PET/CT images in axial, sagittal, and coronal planes using a vendor-neutral software (MIM Encore 6.9.3; MIM Software Inc., Cleveland, OH). The physician then used an edge-detection tool provided by MIM Encore to obtain an initial boundary of the tumor. This boundary, after being examined and adjusted by the physician, was used to obtain the eventual segmentation of the primary tumor. In our evaluation study, these manual delineations were considered as a surrogate for ground truth for training and evaluating the considered AI-based segmentation method, as will be described in the next section.

2.2. Considered AI-based segmentation method

2.2.1. Network architecture

We considered a commonly used U-net-based convolutional neural network (CNN) model5,6,22 to segment the primary tumor on 3-D PET images on a per-slice basis. Specifically, given a PET image slice that contains the primary tumor, the CNN model segmented the tumor by performing voxel-wise classification (figure 1). This CNN model was partitioned into an encoder and a decoder. The encoder was designed to extract spatially local features of the input PET images through successive blocks of convolutional layers. The decoder was then designed to use these extracted features to segment the primary tumor through blocks of transposed convolutional layers. After each layer in the encoder and decoder, a leaky rectified linear unit activation function was applied. Additionally, between the block of layers in the encoder and decoder, skip connections with element-wise addition were applied to stabilize the network training.23 Further, dropout was applied in each layer to reduce network overfitting. In the final layer of the CNN model, a softmax function was applied to the output of the decoder to perform the voxel-wise tumor classification.

Figure 1.

Architecture of the considered commonly used U-net-based convolutional neural network model.

2.2.2. Network training

Of the total 225 patients, 180 were used for training the considered CNN model. During training, 2-D PET images with the corresponding surrogate ground truth (i.e., manual segmentations obtained following the procedure described in section 2.1) were input to the network. The network was then trained to minimize a cost function based on the binary cross-entropy (BCE) loss between the true and estimated segmentations, using the Adam optimization algorithm.24 Network hyperparameters were optimized via five-fold cross-validation on the training data. Data from the remaining 45 patients were reserved for evaluating the trained network, as will be described in the next section.

2.3. Evaluation of the considered AI-based method

An important factor that is known to impact the performance of AI-based methods is the choice of network depth.25 Thus, we investigated whether evaluating the considered CNN model with different network depths based on the Dice scores yields a similar interpretation to evaluation based on clinical-task performance. Specifically, we varied the depth of the CNN model by setting the paired number of blocks of layers in the encoder and decoder (section 2.2.1) to 2, 3, 4, and 5. For each choice of depth, the network was independently trained and cross-validated on images of 180 patients (section 2.2.2). The trained network was then evaluated on the 45 test patients based on both the task-agnostic metric of Dice scores and performance on quantifying the MTV/TLG of the primary tumor, as will be detailed in the next section.

2.4. Figures of Merit for evaluation

The performance of the considered CNN model was first quantified using the task-agnostic figure of merit of Dice score. As introduced in section 1, the Dice score measures the spatial overlap between the true and estimated tumor segmentations, denoted by St and Se, respectively. Denote the overlap between St and Se by St ∩ Se, with |St ∩ Se| denoting the number of voxels that are correctly classified as belonging to the tumor class. For each patient, the Dice score is given by

| (1) |

We then evaluated the CNN model on the clinical tasks of quantifying the MTV and TLG of the primary tumor. The accuracy in estimating the MTV/TLG was quantified based on absolute ensemble normalized bias (NB) between the MTV/TLG obtained with the manual segmentation (considered as ground truth) and those obtained with the segmentation yielded by the CNN model. Denote the number of patients by P. For the pth patient, denote the true and estimated MTV by Vp and , respectively. Similarly, denote the true and estimated TLG of the pth patient by Gp and , respectively. The absolute ensemble NB of the estimated MTV and TLG are given by

| (2a) |

| (2b) |

A lower value of absolute ensemble NB indicates a higher accuracy in the estimated MTV/TLG.

3. RESULTS

Figure 2(A) shows the performance of the considered CNN model with different network depths, as quantified using both the task-agnostic metric of Dice scores and the task-based figure of merit of absolute ensemble NB. Based on the Dice scores, we observe that there was no significant difference (p < 0.01) between the different network depths. However, deeper networks (with more than three paired blocks of convolutional layers) actually yielded substantially lower values of absolute ensemble NB in estimated MTV/TLG.

Figure 2.

Task-based evaluation of the impact of network depth on the performance of the considered CNN model. (A) Performance quantified using the task-agnostic Dice scores and task-based figure of merit of absolute ensemble normalized bias; (B) Violin plot showing the distributions of the Dice scores and normalized error in estimated MTV/TLG for a shallower and deeper network, respectively.

Figure 2(B) shows the distributions of the Dice scores and normalized error in estimated MTV/TLG for a shallower and a deeper network. We observe that the deeper network yielded normalized errors that were more evenly distributed around zero and less positively skewed. Consequently, this led to a lower value of absolute ensemble NB approaching zero.

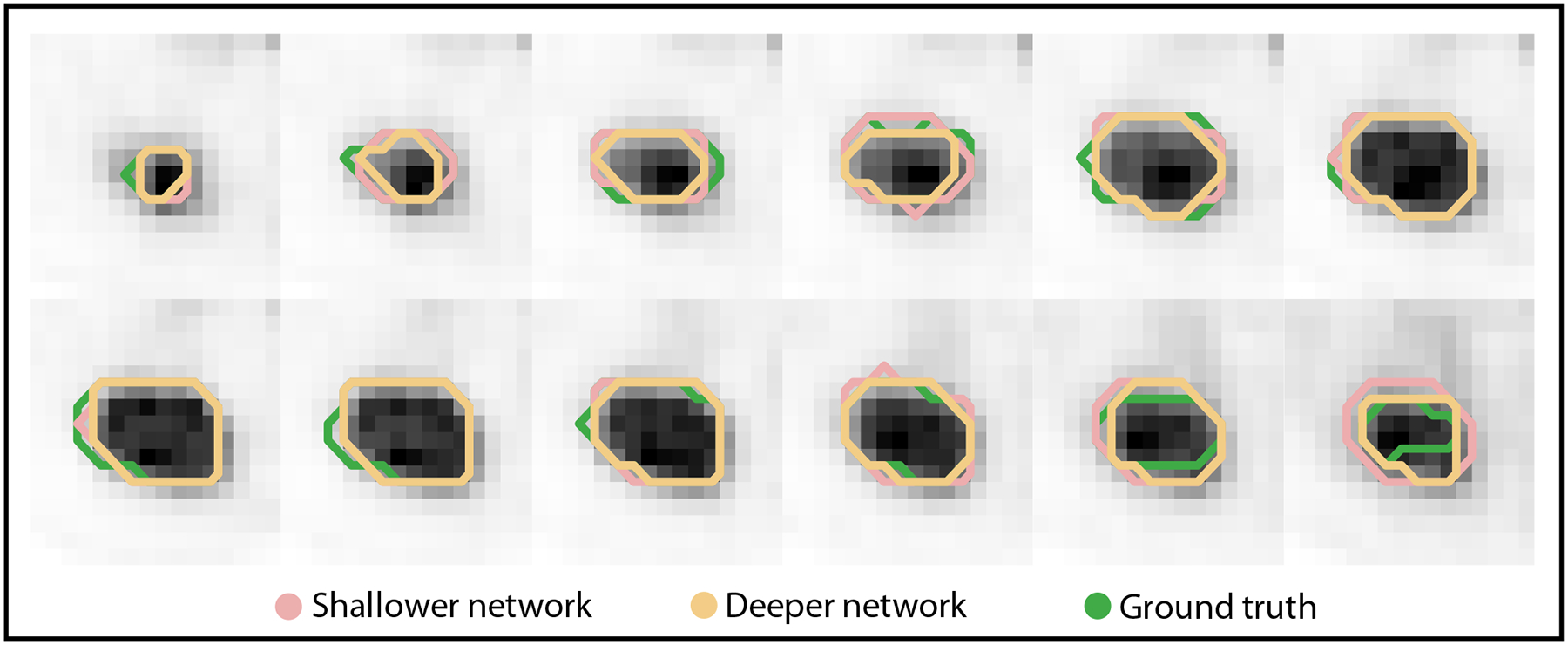

Figure 3 shows the qualitative comparison of segmentations of the primary tumor in a representative test patient as yielded by the shallower and deeper network. For this patient, the deeper network yielded very similar Dice scores compared to the shallower network (0.90 vs. 0.91). However, the deeper network yielded substantially lower normalized error in both the estimated MTV (0.02 vs. 0.11) and TLG (0.03 vs. 0.09).

Figure 3.

Segmentations of the primary tumor in a representative patient obtained with a shallower and a deeper network. The segmentations for each of the slices that contained the tumor are shown. For this patient, the Dice scores were very similar between the shallower and deeper network, even though the errors in estimating MTV/TLG were very different. We also note that visually, the segmentations are different for the shallower vs. deeper networks.

4. DISCUSSION AND CONCLUSION

AI-based segmentation methods are showing substantial promise in oncologic PET. However, these methods have typically been evaluated using metrics that are task-agnostic, such as the Dice score. Since medical images are acquired for a specified clinical task, it is important that the AI-based methods be objectively evaluated using figures of merit that directly correlate with that task. The key contribution of our study is to verify this important need by investigating whether evaluation of AI-based segmentation methods using the task-agnostic Dice scores yields similar interpretations to evaluation on the clinical tasks of quantifying the MTV/TLG of primary tumor from PET images of patients with NSCLC.

Our results from section 3 indicate that evaluation of a commonly used AI-based segmentation method using the task-agnostic Dice score could lead to findings that were discordant with evaluation on the clinical tasks of MTV/TLG quantification. Initially, we observe in figure 2(A) that deeper networks yielded very similar Dice scores compared to shallower networks. This could then lead to the inference that a deeper network did not improve the performance on segmenting the tumor. Given the high demand for computational resources when training AI-based methods, one may prefer to deploy shallower networks in clinical studies from this initial inspection. However, our results show that a deeper network actually demonstrated more accurate performance on the clinical tasks of MTV/TLG quantification by yielding substantially lower values of absolute ensemble NB. These results indicate the limited ability of the Dice score to objectively evaluate AI-based segmentation methods, thus demonstrating the importance of task-based evaluation.

Evaluation of the effect of AI-based segmentation methods on quantitative tasks requires the knowledge of true quantitative values of interest. However, such ground truth may typically be unavailable in clinical studies. We circumvented this challenge by considering the manual segmentation as defined by the physician as the ground truth. However, we recognize this as a limitation of this study. To address this limitation, no-gold-standard evaluation techniques have been developed.26–29 These techniques have demonstrated the efficacy in evaluating PET segmentation methods on clinically relevant tasks even without any knowledge of the ground truth.30–32 Thus, these techniques could provide a mechanism to perform objective task-based evaluation of segmentation methods in the absence of ground truth. Another limitation of this study is that we evaluated the considered AI-based segmentation method only on the clinical tasks of quantifying MTV/TLG. Expanding our evaluation for other tasks such as quantifying intra-tumor heterogeneity is an important future research direction.

The findings from our study further motivate the recently published Recommendations for Evaluation of AI for NuClear medicinE (RELAINCE) guideline33 proposed by the SNMMI AI task force. The task force advocated that while task-agnostic metrics such as the Dice score are valuable for assessing the initial promise of a segmentation method, it is important to further quantify the technical performance of the method on the clinical task for which imaging is performed. Our results further confirmed the important need for this task-based evaluation.

In conclusion, the conducted retrospective analysis with the ECOG-ACRIN 6668/RTOG 0235 multi-center clinical trial data shows that evaluation of a commonly used AI-based segmentation method using the task-agnostic metric of Dice scores can lead to findings that are inconsistent with task-based evaluation. Thus, the results emphasize the need for objective task-based evaluation of AI-based segmentation methods for quantitative PET.

ACKNOWLEDGEMENTS

Financial support for this work was provided by the National Institute of Biomedical Imaging and Bioengineering R01-EB031051, R01-EB031962, R56-EB028287, and R21-EB024647 (Trailblazer Award).

REFERENCES

- [1].Chen HH, Chiu N-T, Su W-C, Guo H-R, and Lee B-F, “Prognostic value of whole-body total lesion glycolysis at pretreatment FDG PET/CT in non–small cell lung cancer,” Radiology 264(2), 559–566 (2012). [DOI] [PubMed] [Google Scholar]

- [2].Ohri N, Duan F, Machtay M, Gorelick JJ, Snyder BS, Alavi A, Siegel BA, Johnson DW, Bradley JD, DeNittis A, et al. , “Pretreatment FDG-PET metrics in stage III non–small cell lung cancer: ACRIN 6668/RTOG 0235,” J. Natl. Cancer Inst 107(4) (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Mena E, Sheikhbahaei S, Taghipour M, Jha AK, Vicente E, Xiao J, and Subramaniam RM, “18F-FDG PET/CT Metabolic tumor volume and intra-tumoral heterogeneity in pancreatic adenocarcinomas: Impact of dual-time-point and segmentation methods,” Clin. Nucl. Med 42(1), e16 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Lee JW and Lee SM, “Radiomics in oncological PET/CT: clinical applications,” Nucl. Med. Mol. Imaging 52(3), 170–189 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Zhao X, Li L, Lu W, and Tan S, “Tumor co-segmentation in PET/CT using multi-modality fully convolutional neural network,” Phys. Med. Biol 64(1), 015011 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Leung KH, Marashdeh W, Wray R, Ashrafinia S, Pomper MG, Rahmim A, and Jha AK, “A physics-guided modular deep-learning based automated framework for tumor segmentation in PET,” Phys. Med. Biol 65(24), 245032 (2020). [DOI] [PubMed] [Google Scholar]

- [7].Liu Z, Mhlanga JC, Laforest R, Derenoncourt P-R, Siegel BA, and Jha AK, “A Bayesian approach to tissue-fraction estimation for oncological PET segmentation,” Phys. Med. Biol 66(12), 124002 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Yousefirizi F, Jha AK, Brosch-Lenz J, Saboury B, and Rahmim A, “Toward high-throughput artificial intelligence-based segmentation in oncological PET imaging,” PET Clin. 16(4), 577–596 (2021). [DOI] [PubMed] [Google Scholar]

- [9].Barrett HH, Denny J, Wagner RF, and Myers KJ, “Objective assessment of image quality. II. Fisher information, Fourier crosstalk, and figures of merit for task performance,” JOSA A 12(5), 834–852 (1995). [DOI] [PubMed] [Google Scholar]

- [10].Barrett HH, Abbey CK, and Clarkson E, “Objective assessment of image quality. III. ROC metrics, ideal observers, and likelihood-generating functions,” JOSA A 15(6), 1520–1535 (1998). [DOI] [PubMed] [Google Scholar]

- [11].Barrett HH, Myers KJ, Hoeschen C, Kupinski MA, and Little MP, “Task-based measures of image quality and their relation to radiation dose and patient risk,” Phys. Med. Biol 60(2), R1 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Jha AK, Myers KJ, Obuchowski NA, Liu Z, Rahman MA, Saboury B, Rahmim A, and Siegel BA, “Objective task-based evaluation of artificial intelligence-based medical imaging methods: Framework, strategies, and role of the physician,” PET Clin. 16(4), 493–511 (2021). [DOI] [PubMed] [Google Scholar]

- [13].Yu Z, Rahman MA, Laforest R, Schindler TH, Gropler RJ, Wahl RL, Siegel BA, and Jha AK, “Need for objective task-based evaluation of deep learning-based denoising methods: A study in the context of myocardial perfusion SPECT,” Med. Phys, Conditionally accepted (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Badal A, Cha KH, Divel SE, Graff CG, Zeng R, and Badano A, “Virtual clinical trial for task-based evaluation of a deep learning synthetic mammography algorithm,” in [Medical Imaging 2019: Physics of Medical Imaging], 10948, 164–173, SPIE (2019). [Google Scholar]

- [15].Li K, Zhou W, Li H, and Anastasio MA, “Assessing the impact of deep neural network-based image denoising on binary signal detection tasks,” IEEE Trans. Med. Imaging 40(9), 2295–2305 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Prabhat K, Zeng R, Farhangi MM, and Myers KJ, “Deep neural networks-based denoising models for CT imaging and their efficacy,” in [Medical Imaging 2021: Physics of Medical Imaging], 11595, 105–117, SPIE (2021). [Google Scholar]

- [17].Liu Z, Moon HS, Li Z, Laforest R, Perlmutter JS, Norris SA, and Jha AK, “A tissue-fraction estimation-based segmentation method for quantitative dopamine transporter SPECT,” Med. Phys 49(8), 5121–5137 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Foster B, Bagci U, Mansoor A, Xu Z, and Mollura DJ, “A review on segmentation of positron emission tomography images,” Comput. Biol. Med 50, 76–96 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Machtay M, Duan F, Siegel BA, Snyder BS, Gorelick JJ, Reddin JS, Munden R, Johnson DW, Wilf LH, DeNittis A, et al. , “Prediction of survival by [18F] fluorodeoxyglucose positron emission tomography in patients with locally advanced non–small-cell lung cancer undergoing definitive chemoradiation therapy: results of the ACRIN 6668/RTOG 0235 trial,” J. Clin. Oncol 31(30), 3823 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Kinahan P, Muzi M, Bialecki B, Herman B, and Coombs L, “Data from the ACRIN 6668 Trial NSCLC-FDG-PET,” Cancer Imaging Arch 10 (2019). [Google Scholar]

- [21].Clark K, Vendt B, Smith K, Freymann J, Kirby J, Koppel P, Moore S, Phillips S, Maffitt D, Pringle M, et al. , “The Cancer Imaging Archive (TCIA): maintaining and operating a public information repository,” J. Digit. Imaging 26, 1045–1057 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Blanc-Durand P, Van Der Gucht A, Schaefer N, Itti E, and Prior JO, “Automatic lesion detection and segmentation of 18F-FET PET in gliomas: a full 3D U-Net convolutional neural network study,” PLoS One 13(4), e0195798 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Mao X, Shen C, and Yang Y-B, “Image restoration using very deep convolutional encoder-decoder networks with symmetric skip connections,” Adv. Neural Inf. Process. Syst 29 (2016). [Google Scholar]

- [24].Kingma DP and Ba J, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980 (2014). [Google Scholar]

- [25].Sun S, Chen W, Wang L, Liu X, and Liu T-Y, “On the depth of deep neural networks: A theoretical view,” in [Proceedings of the AAAI Conference on Artificial Intelligence], 30(1) (2016). [Google Scholar]

- [26].Kupinski MA, Hoppin JW, Clarkson E, Barrett HH, and Kastis GA, “Estimation in medical imaging without a gold standard,” Acad. Radiol 9(3), 290–297 (2002). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Hoppin JW, Kupinski MA, Kastis GA, Clarkson E, and Barrett HH, “Objective comparison of quantitative imaging modalities without the use of a gold standard,” IEEE Trans. Med. Imaging 21(5), 441–449 (2002). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Jha AK, Caffo B, and Frey EC, “A no-gold-standard technique for objective assessment of quantitative nuclear-medicine imaging methods,” Phys. Med. Biol 61(7), 2780 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Liu Z, Li Z, Mhlanga JC, Siegel BA, and Jha AK, “No-gold-standard evaluation of quantitative imaging methods in the presence of correlated noise,” in [Medical Imaging 2022: Image Perception, Observer Performance, and Technology Assessment], 12035, SPIE (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Jha AK, Mena E, Caffo B, Ashrafinia S, Rahmim A, Frey E, and Subramaniam RM, “Practical no-gold-standard evaluation framework for quantitative imaging methods: application to lesion segmentation in positron emission tomography,” J. Med. Imaging 4(1), 011011–011011 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Liu J, Liu Z, Moon HS, Mhlanga J, and Jha A, “A no-gold-standard technique for objective evaluation of quantitative nuclear-medicine imaging methods in the presence of correlated noise,” J. Nucl. Med 61(supplement 1), 523–523 (2020). [Google Scholar]

- [32].Zhu Y, Yousefirizi F, Liu Z, Klyuzhin I, Rahmim A, and Jha A, “Comparing clinical evaluation of PET segmentation methods with reference-based metrics and no-gold-standard evaluation technique,” J. Nucl. Med 62(supplement 1), 1430–1430 (2021).33608426 [Google Scholar]

- [33].Jha AK, Bradshaw TJ, Buvat I, Hatt M, Prabhat K, Liu C, Obuchowski NF, Saboury B, Slomka PJ, Sunderland JJ, et al. , “Nuclear medicine and artificial intelligence: best practices for evaluation (the RELAINCE guidelines),” J. Nucl. Med 63(9), 1288–1299 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]