Moving around a very large files especially Virtualbox Virtual Machines or other VM formats between Windows host and OneDrive might be a problem due to either Azure Cloud configured limitations, or other reasons that your company Domain Administrator has configured, thus if you have to migrate your old Hardware Laptop PC Windows 10 to a newer faster better Harware / Better Performance Notebook Computer with Windows 11 and you still want to keep and move your old large files in this short and trivial article, will explain how.

The topic is easily and most of novice sysadmins should have already be faced to bump into something like this but anyways i found useful to mention about Git for Windows, as it is really useful too thus wrote this small article.

The moved huge files, in my case an experimental Virtual Machines Images which I needed to somehow migrate on the new Freshly installed Windows laptop, the Large files were 40 / 80 etc. Gigabytes or whatever large amount of files from your PC to the Cloud Onedrive and of course the most straight forward thing i tried was to simply add the file for inclusion into the Onedrive storage (via OneDrive tool setup interface), however this file, failed due to OneDrive Cloud file format security limitations or Antivirus solutions configured to filter out the large file copying or even a prohibition to be able to include any kind of Virtual Machines ISOs straight into the cloud.

With this big files comes the question:

How to copy the Virtual Machines from your Old Hardware Laptop to the Cloud (without being able to use an external SSD Hard Drive or a USB SSD Flash drive, due to Domain policy configured for your windows to be unable to copy to externally connected Drive but only to read from such.) ?

Here are few sample approaches to do it both from command line (useful if you have to repeat the process or script it and deploy to multiple hosts) or for single hosts via an Archiver tool:

1. Using split command Git for Windows (Bash) MINGW64 shell

Download Git for Windows – https://git-scm.com/download install it and you will get the MINGW64 bash for Windows executable.

Run it either invoke bash command from command line or trigger Windows Run command prompt (Windows button + R) and type full path to executable

C:\Program Files\Git\git-bash.exe

Use the integrated program split and to cut it into pieces use:

# split MyVeryLargeFileVM.vdi -b 800m

To split the .VDI virtualbox file to lets say 5 Gigabite pieces:

# split MyVeryLargeFile.vdi -b 5g

The output files will be named pieces will be named as in a normal UNIX / GNU split command in the format and each piece of 5GB will be named like:

xaa

xab

xac

xad

If you want to get a more meaningful name for the spilitted files you can set a generated split file prefix with suffixes to be 5 digits long:

# split MyVeryLargeFile.vdi MyVeryLargeFileVM-parts_ -b 5g -d -a 5

- the -d flag for using numerical suffixes (instead of default aa, ab, ac, etc…),

- and the option -a 5 to tell it I want the suffixes to be 5 digits long:

2. Split large files by Archiving them with Winrar (ShareWare) tool

If you have already Winrar installed and you don't want to bother with too much typing from the command line, You can use good old WinRAR as a file splitter/joiner as well.

To split a file into smaller files, select "Store" as the compression method and enter the desired value (bytes) into "Split to volumes" box.

This way you can have split files named as filename.part1.rar, filename.part2.rar, etc.

3. Split files with 7-Zip (FreeWare)

Assuming you have the 7-Zip installed on the PC, you can do the archiving of the Big file to a smaller pieces one, you can create the splitted file from 7Zip interfaces menus:

Or directly cut the single file into multiple volumes, directly from Windows Explorer by Selecting the file and using fall down menus :

Sum it up what learned ?

What we learned is how to cut large files into multiple single consequential ones for easy copy between Network sides, via both Git 4 Windows and manual copy paste of parted multiple files to OneDrive / DropBox / pCloud or Google Drive.

There is a plenty of other approaches to take as there is also file GUI tools, besides using GNU Win / Gnu Tools for Windows or Cygwin / Gsplit GUI tool or some kind of the many Archiver toolsavailable for Windows, another option to split the large files is to use a bunch of PowerShell and Batch scripts written that can help you do the file split for both binaries files or Text files. but i'll stop here as I believe that is pretty much enough for most basic needs.

Report haproxy node switch script useful for Zabbix or other monitoring

Tuesday, June 9th, 2020For those who administer corosync clustered haproxy and needs to build monitoring in case if the main configured Haproxy node in the cluster is changed, I've developed a small script to be integrated with zabbix-agent installed to report to a central zabbix server via a zabbix proxy.

The script is very simple it assumed DC1 variable is the default used haproxy node and DC2 and DC3 are 2 backup nodes. The script is made to use crm_mon which is not installed by default on each server by default so if you'll be using it you'll have to install it first, but anyways the script can easily be adapted to use pcs cmd instead.

Below is the bash shell script:

To configure it with zabbix monitoring it can be configured via UserParameterScript.

The way I configured it in Zabbix is as so:

1. Create the userpameter_active_node.conf

Below script is 3 nodes Haproxy cluster

Once pasted to save the file press CTRL + D

The version of the script with 2 nodes slightly improved is like so:

The haproxy_active_DC_zabbix.sh script with a bit of more comments as explanations is available here

2. Configure access for /usr/sbin/crm_mon for zabbix user in sudoers

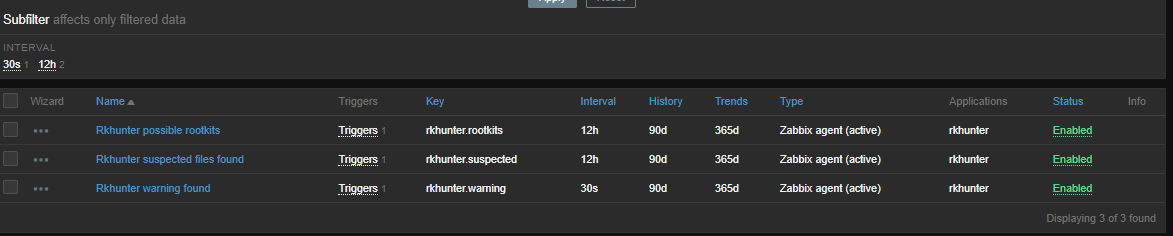

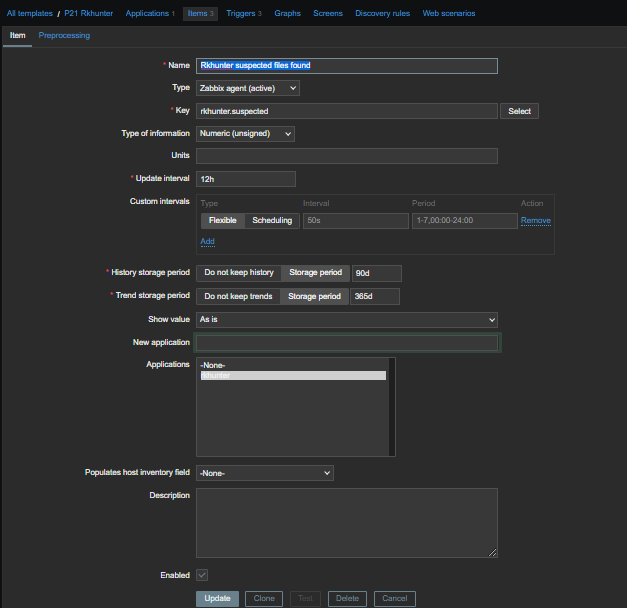

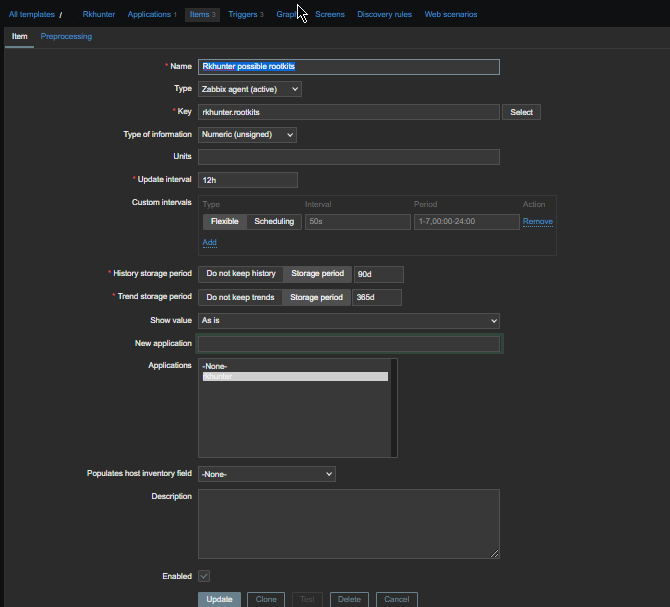

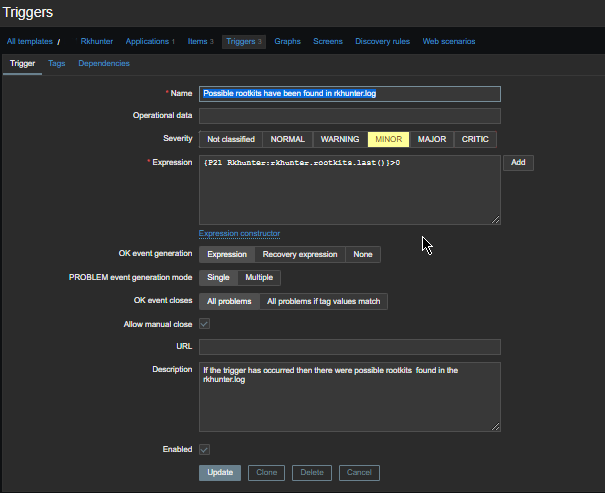

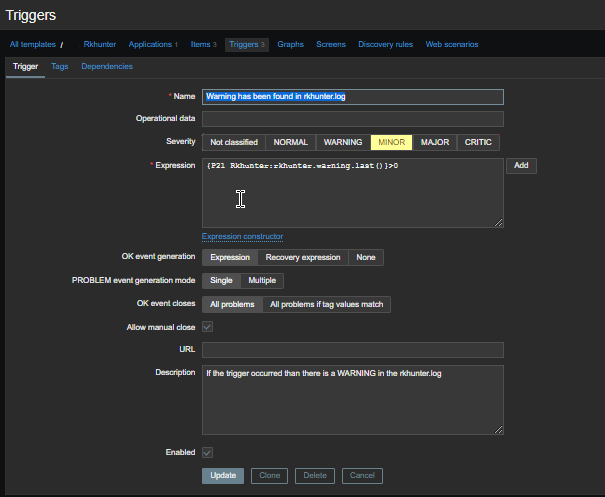

3. Configure in Zabbix for active.dc key Trigger and Item

Tags: access, ALL, and, Anyways, are, available, awk, bash shell, bash shell script, Below, bit, case, cat, Central, check, Cluster, cmd, Comments, conf, configure

Posted in Linux, Monitoring, Zabbix | No Comments »