Review of Data Management Maturity Models

- 1. Review of Data Management Maturity Models Alan McSweeney

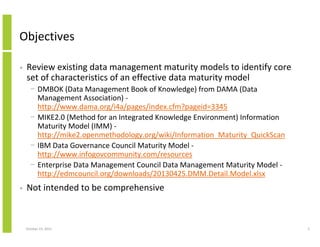

- 2. Objectives • Review existing data management maturity models to identify core set of characteristics of an effective data maturity model − DMBOK (Data Management Book of Knowledge) from DAMA (Data Management Association) http://www.dama.org/i4a/pages/index.cfm?pageid=3345 − MIKE2.0 (Method for an Integrated Knowledge Environment) Information Maturity Model (IMM) http://mike2.openmethodology.org/wiki/Information_Maturity_QuickScan − IBM Data Governance Council Maturity Model http://www.infogovcommunity.com/resources − Enterprise Data Management Council Data Management Maturity Model http://edmcouncil.org/downloads/20130425.DMM.Detail.Model.xlsx • Not intended to be comprehensive October 23, 2013 2

- 3. Maturity Models (Attempt To) Measure Maturity Of Processes And Their Implementation and Operation • Processes breathe life into the organisation • Effective processes enable the organisation to operate efficiently • Good processes enable efficiency and scalability • Processes must be effectively and pervasively implemented • Processes should be optimising, always seeking improvement where possible October 23, 2013 3

- 4. Basis for Maturity Models • Greater process maturity should mean greater business benefit(s) − Reduced cost − Greater efficiency − Reduced risk October 23, 2013 4

- 5. Proliferation of Maturity Models • Growth in informal and ad hoc maturity models • Lack rigour and detail • Lack detailed validation to justify their process structure • Not evidence based • Lack the detailed assessment structure to validate maturity levels • Concept of a maturity model is becoming devalued through overuse and wanton borrowing of concepts from ISO/IEC 15504 without putting in the hard work October 23, 2013 5

- 6. Issues With Maturity Models • How to know you are at a given level? • How do you objectively quantify the maturity level scoring? • What are the business benefits of achieving a given maturity level? • What are the costs of achieving a given maturity level? • What work is needed to increase maturity? • Is the increment between maturity levels the same? • What is the cost of operationalising processes? • How do you measure process operation to ensure maturity is being maintained? • Are the costs justified? • What is the real value of process maturity? October 23, 2013 6

- 7. ISO/IEC 15504 – Original Maturity Model - Structure Part 1 Part 9 Concepts and Introductory Guide Vocabulary Part 6 Part 7 Part 8 Guide to Qualification of Assessors Guide for Use in Process Improvement Guide for Determining Supplier Process Capacity Part 3 Performing an Assessment Part 2 A Reference Model for Processes and Process Capability October 23, 2013 Part 4 Guide to Performing Assessments Part 5 An Assessment Model and Indicator Guidance 7

- 8. ISO/IEC 15504 – Original Maturity Model • Originally based on Software process Improvement and Capability Determination (SPICE) • Detailed and rigorously defined framework for software process improvement • Validated • Defined and detailed assessment framework October 23, 2013 8

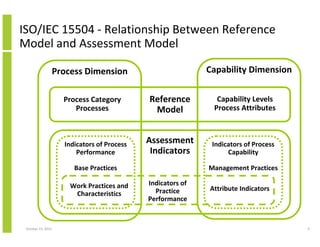

- 9. ISO/IEC 15504 - Relationship Between Reference Model and Assessment Model Capability Dimension Process Dimension Process Category Processes Indicators of Process Performance Reference Model Capability Levels Process Attributes Assessment Indicators Indicators of Process Capability Base Practices Work Practices and Characteristics October 23, 2013 Management Practices Indicators of Practice Performance Attribute Indicators 9

- 10. ISO/IEC 15504 - Relationship Between Reference Model and Assessment Model • Parallel process reference model and assessment model • Correspondence between reference model and assessment model for process categories, processes, process purposes, process capability levels and process attributes October 23, 2013 10

- 11. ISO/IEC 15504 - Indicator and Process Attribute Relationships Process Attribute Ratings Based On Evidence of Process Performance Evidence of Process Capability Provided By Provided By Indicators of Process Performance Indicators of Process Capability Consist Of Consist Of Best Practices Management Practices Assessed By Assessed By Work Product Characteristics October 23, 2013 Practice Performance Characteristics Resources and Infrastructure Characteristics 11

- 12. ISO/IEC 15504 - Indicator and Process Attribute Relationships • Two types of indicator − Indicators of process performance • Relate to base practices defined for the process dimension − Indicators of process capability • Relate to management practices defined for the capability dimension • Indicators are attributes whose existence that practices are being performed • Collect evidence of indicators during assessments October 23, 2013 12

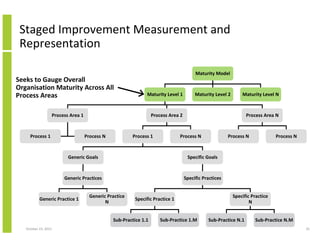

- 13. Structure of Maturity Model Maturity Model Maturity Level 1 Process Area 1 Process 1 Maturity Level 2 Maturity Level N Process Area 2 Process N Process 1 Process Area N Process N Process N Generic Goals Specific Goals Generic Practices Specific Practices Generic Practice 1 Generic Practice N Specific Practice 1 Specific Practice N Sub-Practice 1.1 Sub-Practice N.1 Sub-Practice 1.M October 23, 2013 Process N Sub-Practice N.M 13

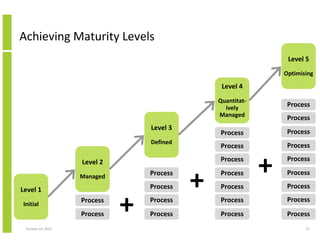

- 14. Structure of Maturity Model • Set of maturity levels on an ascending scale − − − − − • 5 - Optimising process 4 - Predictable process 3 - Established process 2 - Managed process 1 - Initial process Each maturity level has a number of process areas/categories/groupings − Maturity is about embedding processes within an organisation • • Each process area has a number of processes Each process has generic and specific goals and practices − Specific goals describes the unique features that must be present to satisfy the process area − Generic goals apply to multiple process areas − Generic practices are applicable to multiple processes and represent the activities needed to manage a process and improve its capability to perform − Specific practices are activities that are contribute to the achievement of the specific goals of a process area October 23, 2013 14

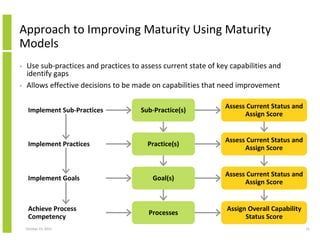

- 15. Approach to Improving Maturity Using Maturity Models • • Use sub-practices and practices to assess current state of key capabilities and identify gaps Allows effective decisions to be made on capabilities that need improvement Sub-Practice(s) Assess Current Status and Assign Score Practice(s) Assess Current Status and Assign Score Implement Goals Goal(s) Assess Current Status and Assign Score Achieve Process Competency Processes Assign Overall Capability Status Score Implement Sub-Practices Implement Practices October 23, 2013 15

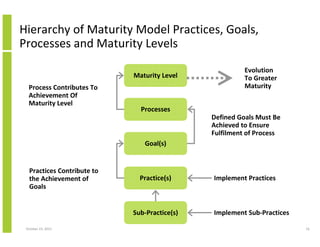

- 16. Hierarchy of Maturity Model Practices, Goals, Processes and Maturity Levels Maturity Level Process Contributes To Achievement Of Maturity Level Evolution To Greater Maturity Processes Defined Goals Must Be Achieved to Ensure Fulfilment of Process Goal(s) Practices Contribute to the Achievement of Goals Practice(s) Sub-Practice(s) October 23, 2013 Implement Practices Implement Sub-Practices 16

- 17. Achieving a Maturity Level Improvement Maturity Level Maturity Level Process Process Process Goal Goal Goal Practice Practice Practice Sub-Practice October 23, 2013 Maturity Level Sub-Practice Sub-Practice 17

- 18. Maturity Levels • Maturity levels are intended to be a way of defining a means of evolving improvements in processes associated with what is being measured October 23, 2013 18

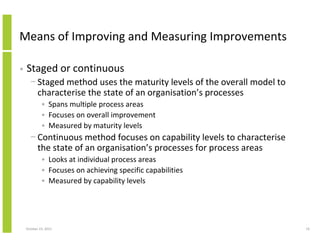

- 19. Means of Improving and Measuring Improvements • Staged or continuous − Staged method uses the maturity levels of the overall model to characterise the state of an organisation’s processes • Spans multiple process areas • Focuses on overall improvement • Measured by maturity levels − Continuous method focuses on capability levels to characterise the state of an organisation’s processes for process areas • Looks at individual process areas • Focuses on achieving specific capabilities • Measured by capability levels October 23, 2013 19

- 20. Staged and Continuous Improvements Level Continuous Improvement Capability Levels Staged Improvement Maturity Levels Level 0 Incomplete Level 1 Performed Initial Level 2 Managed Managed Level 3 Defined Defined Level 4 Level 5 October 23, 2013 Quantitatively Managed Optimising 20

- 21. Continuous Improvement Capability Levels Level Capability Levels Key Characteristics Level 0 Incomplete Level 1 Performed Level 2 Managed Not performed or only partially performed Specific goals of the process area not being satisfied Process not embedded in the organisation Process achieves the required work Specific goals of the process area are satisfied Planned and implemented according to policy Operation is monitored, controlled and reviewed Evaluated for adherence to process documentation Those performing the process have required training, skills, resources and responsibilities to generate controlled deliverables Level 3 Defined October 23, 2013 Process consistency maintained through specific process descriptions and procedures being customised from set of common standard processes using customisation standards to suit given requirements Defined and documented in detail – roles, responsibilities, measures, inputs, outputs, entry and exit criteria Implementation and operational feedback compiled in process repository Proactive process measurement and management Process interrelationships defined 21

- 22. Achieving Capability Levels For Process Areas Common Standards Exist That Are Customised Ensuring Consistency Policies Exist For Processes Processes Are Performed Level 0 Process Are Planned And Monitored Level 1 Level 3 Level 2 Defined Managed Performed Incomplete October 23, 2013 22

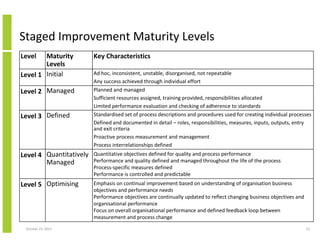

- 23. Staged Improvement Maturity Levels Level Maturity Levels Level 1 Initial Level 2 Managed Level 3 Defined Key Characteristics Ad hoc, inconsistent, unstable, disorganised, not repeatable Any success achieved through individual effort Planned and managed Sufficient resources assigned, training provided, responsibilities allocated Limited performance evaluation and checking of adherence to standards Standardised set of process descriptions and procedures used for creating individual processes Defined and documented in detail – roles, responsibilities, measures, inputs, outputs, entry and exit criteria Proactive process measurement and management Process interrelationships defined Level 4 Quantitatively Managed Quantitative objectives defined for quality and process performance Performance and quality defined and managed throughout the life of the process Process-specific measures defined Performance is controlled and predictable Level 5 Optimising Emphasis on continual improvement based on understanding of organisation business objectives and performance needs Performance objectives are continually updated to reflect changing business objectives and organisational performance Focus on overall organisational performance and defined feedback loop between measurement and process change October 23, 2013 23

- 24. Achieving Maturity Levels Processes Are Controlled and Predictable Common Standards Exist That Are Customised Ensuring Consistency Level 1 Level 4 Level 3 Level 2 Continual SelfImprovement Level 5 Standard Approach To Measurement Disciplined Approach To Processes Process Link to Overall Organisation Objectives Optimising Quantitatively Managed Defined Managed Initial October 23, 2013 24

- 25. Staged Improvement Measurement and Representation Maturity Model Seeks to Gauge Overall Organisation Maturity Across All Process Areas Maturity Level 1 Process Area 1 Process 1 Maturity Level 2 Process Area 2 Process N Process 1 Process Area N Process N Process N Generic Goals Process N Specific Goals Generic Practices Specific Practices Generic Practice 1 Generic Practice N Specific Practice 1 Sub-Practice 1.1 October 23, 2013 Maturity Level N Sub-Practice 1.M Specific Practice N Sub-Practice N.1 Sub-Practice N.M 25

- 26. Maturity Model • Maturity Model Maturity Level 1 Maturity Level 2 Maturity Level 3 Maturity Level 4 Maturity Level 5 Process 2.1 Process 3.1 Process 4.1 Process 5.1 Process 2.2 Process 3.2 Process 4.2 Process 5.2 Process 2.3 Process 3.3 Process 4.3 Process 2.4 October 23, 2013 To be at Maturity Level N means that all processes in previous maturity levels have been implemented Process 4.4 26

- 27. Achieving Maturity Levels Level 5 Optimising Level 4 Quantitatively Managed Level 3 Initial Process Process October 23, 2013 Process Process + + Process + Process Process Process Process Process Process Process Process Process Process Process Level 1 Process Process Level 2 Process Process Process Defined Managed Process 27

- 28. Achieving Maturity Levels What Are The Real Benefits of Achieving a Higher Maturity Level? Level 5 What Is The Real Cost of Achieving a Higher Maturity Level? Level 4 What Is The Real Cost of Maintaining The Higher Maturity Level? Quantitatively Managed Level 3 Initial Process October 23, 2013 Process + + Process + Process Process Process Process Process Process Process Process Process Process Process Process Process Process Process Process Process Level 2 Managed Process Process Defined Level 1 Optimising 28

- 29. Continuous Improvement Measurement and Representation Seeks to Gauge The Condition Of One Or More Individual Process Areas Process Area 1 Process 1 Maturity Model Maturity Level 1 Maturity Level 2 Process Area 2 Process N Process 1 Process Area N Process N Process N Generic Goals Process N Specific Goals Generic Practices Specific Practices Generic Practice 1 October 23, 2013 Maturity Level N Generic Practice N Specific Practice 1 Specific Practice N 29

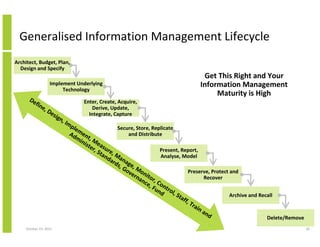

- 30. Generalised Information Management Lifecycle Architect, Budget, Plan, Design and Specify Implement Underlying Technology De fi ne ,D esi gn , Im Get This Right and Your Information Management Maturity is High Enter, Create, Acquire, Derive, Update, Integrate, Capture ple Secure, Store, Replicate Ad men and Distribute mi t, M nis e ter asu , S re, Present, Report, tan M Analyse, Model da an ag rds , G e, M ov on Preserve, Protect and ern it or, Recover an ce Co , F nt un rol d ,S Archive and Recall taf f, T rai na nd Delete/Remove October 23, 2013 30

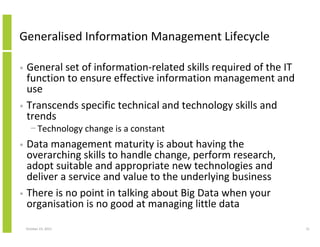

- 31. Generalised Information Management Lifecycle General set of information-related skills required of the IT function to ensure effective information management and use • Transcends specific technical and technology skills and trends • − Technology change is a constant Data management maturity is about having the overarching skills to handle change, perform research, adopt suitable and appropriate new technologies and deliver a service and value to the underlying business • There is no point in talking about Big Data when your organisation is no good at managing little data • October 23, 2013 31

- 32. Generalised Information Management Lifecycle Architect, Budget, Plan, Design and Specify Implement Underlying Technology De fi ne ,D esi gn , Im Enter, Create, Acquire, Derive, Update, Integrate, Capture What Processes Are Needed To Implement Effectively the Stages in the Information Lifecycle? ple Secure, Store, Replicate Ad men and Distribute mi t, M nis e ter asu , S re, Present, Report, tan M Analyse, Model da an ag rds , G e, M ov on Preserve, Protect and ern it or, Recover an ce Co , F nt un rol d ,S Archive and Recall taf f, T rai na nd Delete/Remove October 23, 2013 32

- 33. Dimensions of Information Management Lifecycle Information Type Dimension Operational Analytic Master and Data Data Reference Data Unstructured Data Architect, Budget, Plan, Design and Specify Implement Underlying Technology Lifecycle Dimension Enter, Create, Acquire, Derive, Update, Integrate, Capture Secure, Store, Replicate and Distribute Present, Report, Analyse, Model Preserve, Protect and Recover Archive and Recall Delete/Remove Define, Design, Implement, Measure, Manage, Monitor, Control, Staff, Train and Administer, Standards, Governance, Fund October 23, 2013 33

- 34. Dimensions of Information Management Lifecycle • Information lifecycle management needs to span different types of data that are used and managed differently and have different requirements − Operational Data – associated with operational/real-time applications − Master and Reference Data – maintaining system of record or reference for enterprise master data used commonly across the organisation − Analytic Data – data warehouse/business intelligence/analysisoriented applications − Unstructured Data – documents and similar information October 23, 2013 34

- 35. Linking Generalised Information Management Lifecycle to Assessment of Information Maturity • How well do you implement information management? • Where are the gaps and weaknesses? • Where do you need to improve? • Where are your structures and policies sufficient for your needs? October 23, 2013 35

- 36. Dimensions of Data Maturity Models MIKE2.0 Information Maturity Model (IMM) IBM Data Governance Council Maturity Model DAMA DMBOK Enterprise Data Management Council Data Management Maturity Model People/Organisation Data Governance Data Management Goals Policy Organisational Structures & Awareness Stewardship Corporate Culture Technology Compliance Policy Value Creation Measurement Data Risk Management & Compliance Information Security & Privacy Data Architecture Management Data Development Data Operations Management Data Security Management Process/Practice Data Architecture Data Quality Management Classification & Metadata Information Lifecycle Management Audit Information, Logging & Reporting October 23, 2013 Governance Model Data Management Funding Data Requirements Lifecycle Reference and Master Data Management Standards and Procedures Data Warehousing and Business Intelligence Management Document and Content Management Metadata Management Data Quality Management Data Sourcing Architectural Framework Platform and Integration Data Quality Framework Data Quality Assurance 36

- 37. Data Maturity Models • All very different • All contain gaps – none is complete • None links to an information management lifecycle October 23, 2013 37

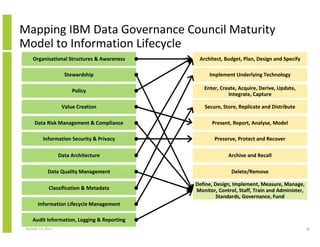

- 38. Mapping IBM Data Governance Council Maturity Model to Information Lifecycle Organisational Structures & Awareness Architect, Budget, Plan, Design and Specify Stewardship Implement Underlying Technology Policy Enter, Create, Acquire, Derive, Update, Integrate, Capture Value Creation Secure, Store, Replicate and Distribute Data Risk Management & Compliance Present, Report, Analyse, Model Information Security & Privacy Preserve, Protect and Recover Data Architecture Archive and Recall Data Quality Management Delete/Remove Classification & Metadata Define, Design, Implement, Measure, Manage, Monitor, Control, Staff, Train and Administer, Standards, Governance, Fund Information Lifecycle Management Audit Information, Logging & Reporting October 23, 2013 38

- 39. IBM Data Governance Council Maturity Model– Capability Areas Organisational Stewardship Structures & Awareness Process Maturity Policy Organisational Process Awareness Value Creation Data Risk Information Management & Security & Compliance Privacy Data Architecture Assets Business Process Maturity Data Integration Accountability Roles & & Responsibility Structures Roles & Metrics Responsibilities Resource Commitment Measurement Standards & Disciplines Quality Communication Value Creation Processes Metrics & Reporting Reporting Responsibility Regulations, standards, and policies Accountability Data asset and risk classification Risk Management Management buy-in Framework Incident Ownership & Response responsibility Certification Training and accountability Policies & Standards Tools Design requirements Process and technology Access Control Identity Requirements Integration Metrics Risk Status Characteristic Organisations Data Models & Metadata Management Analytics Data Quality Management Classification & Information Metadata Lifecycle Management Process Maturity Semantic Capabilities Content Process Maturity Organisational Content Awareness Audit Information, Logging & Reporting Quality Security Technology & Infrastructure Business Value Organisational Reporting Awareness Consistency (Format & Semantics) Business Value Ownership (Roles & Responsibilities) Collection Automation Reporting Automation Evaluation & Measurement Remediation & Reporting October 23, 2013 39

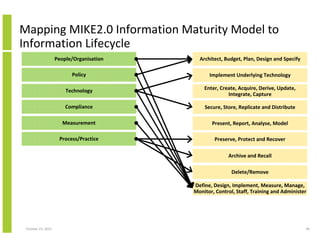

- 40. Mapping MIKE2.0 Information Maturity Model to Information Lifecycle People/Organisation Architect, Budget, Plan, Design and Specify Policy Implement Underlying Technology Technology Enter, Create, Acquire, Derive, Update, Integrate, Capture Compliance Secure, Store, Replicate and Distribute Measurement Present, Report, Analyse, Model Process/Practice Preserve, Protect and Recover Archive and Recall Delete/Remove Define, Design, Implement, Measure, Manage, Monitor, Control, Staff, Training and Administer October 23, 2013 40

- 41. MIKE2.0 Information Maturity Model – Capability Areas People/ Organisation Policy Technology Compliance Measurement Process/Practice Audits Benchmarking Common Data Model Communication Plan B2B Data Integration Cleansing Audits Metadata Management Audits Benchmarking Common Data Services Data Integration (ETL & EAI) Data Ownership Data Quality Metrics Common Data Model Data Quality Metrics Data Quality Metrics Dashboard (Tracking / Trending) Data Analysis Common Data Services Data Analysis Data Analysis Security Profiling / Measurement Common Data Model Metadata Management Communication Plan Data Quality Strategy Data Capture Issue Identification Cleansing Data Capture Data Standardisation Service Level Agreements B2B Data Integration Data Ownership Executive Sponsorship Data Integration (ETL & EAI) Data Quality Metrics Data Quality Metrics Issue Identification Data Standardisation Communication Plan Dashboard (Tracking / Trending) Data Analysis Data Subject Area Coverage Data Quality Strategy Master Data ManagementData Stewardship Data Standardisation Platform Standardisation Data Validation Data Validation Privacy Master Data Management Executive Sponsorship Profiling / Measurement Metadata Management Master Data ManagementRoot Cause Analysis Platform Standardisation Privacy Security Profiling / Measurement Security Security October 23, 2013 Cleansing Dashboard (Tracking / Trending) Data Analysis Data Capture Data Integration (ETL & EAI) Data Ownership Data Quality Metrics Data Standardisation Data Stewardship Executive Sponsorship Issue Identification Master Data Management Metadata Management Privacy Profiling / Measurement 41

- 42. Mapping DAMA DMBOK to Information Lifecycle Data Governance Architect, Budget, Plan, Design and Specify Data Architecture Management Implement Underlying Technology Data Development Enter, Create, Acquire, Derive, Update, Integrate, Capture Data Operations Management Secure, Store, Replicate and Distribute Data Security Management Present, Report, Analyse, Model Reference and Master Data Management Preserve, Protect and Recover Data Warehousing and Business Intelligence Management Archive and Recall Document and Content Management Delete/Remove Metadata Management Define, Design, Implement, Measure, Manage, Monitor, Control, Staff, Training and Administer Data Quality Management October 23, 2013 42

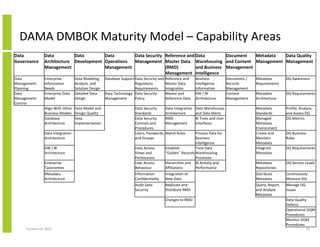

- 43. DAMA DMBOK Maturity Model – Capability Areas Data Governance Document Metadata Data Quality Data Data Data Data Security Reference and Data Architecture Development Operations Management Master Data Warehousing and Content Management Management and Business Management Management Management (RMD) Management Intelligence Data Management Planning Data Management Control Enterprise Information Needs Enterprise Data Model Data Modeling, Analysis, and Solution Design Detailed Data Design Align With Other Business Models Database Architecture Data Model and Design Quality Data Implementation Data Integration Architecture Database Support Data Security and Regulatory Requirements Data Technology Data Security Policy Management Reference and Master Data Integration Master and Reference Data Data Security Data Integration Standards Architecture RMD Data Security Management Controls and Procedures Users, Passwords, Match Rules and Groups Business Intelligence Information DW / BI Architecture Data Warehouses and Data Marts BI Tools and User Interfaces Documents / Records Management Content Management Metadata Requirements DQ Awareness Metadata Architecture DQ Requirements Metadata Standards Managed Metadata Environment Create and Maintain Metadata Integrate Metadata Profile, Analyse, and Assess DQ DQ Metrics DQ Business Rules Enterprise Taxonomies Data Access Views and Permissions User Access Behaviour Process Data for Business Intelligence Tune Data Establish “Golden” Records Warehousing Processes Hierarchies and BI Activity and Affiliations Performance Metadata Repositories DQ Service Levels Metadata Architecture Information Confidentiality Integration of New Data Distribute Metadata Continuously Measure DQ Audit Data Security Replicate and Distribute RMD Query, Report, and Analyse Metadata Manage DQ Issues DW / BI Architecture Changes to RMD October 23, 2013 DQ Requirements Data Quality Defects Operational DQM Procedures Monitor DQM Procedures 43

- 44. Mapping Enterprise Data Management Council Data Management Maturity Model to Information Lifecycle Data Management Goals Architect, Budget, Plan, Design and Specify Corporate Culture Implement Underlying Technology Governance Model Enter, Create, Acquire, Derive, Update, Integrate, Capture Data Management Funding Secure, Store, Replicate and Distribute Data Requirements Lifecycle Present, Report, Analyse, Model Standards and Procedures Preserve, Protect and Recover Data Sourcing Archive and Recall Architectural Framework Delete/Remove Platform and Integration Define, Design, Implement, Measure, Manage, Monitor, Control, Staff, Training and Administer Data Quality Framework Data Quality Assurance October 23, 2013 44

- 45. EDM Council Maturity Model – Capability Areas Data Corporate Management Culture Goals DM Objectives Alignment Data Standards and Data Sourcing Requirements Procedures Lifecycle Standards Sourcing Governance Data Structure Requirements Areas Requirements Definition DM Priorities Communicatio Organisational Business Case Operational Standards Procurement n Strategy Model Impact Promulgation & Provider Management Scope of DM Oversight Funding Data Lifecycle Business Program Model Management Process and Data Flows Governance Data Implementatio Depenedencie n s Lifecycle Human Capital Ontology and Requirements Business Semantics Measurement Data Change Management October 23, 2013 Governance Model Data Management Funding Total Cost of Ownership Architectural Platform and Data Quality Data Quality Framework Integration Framework Assurance Architectural DM Platform Data Quality Standards Strategy Development Architectural Application Data Quality Approach Integration Measurement and Analysis Release Management Historical Data Data Profiling Data Quality Assessment Data Quality for Integration Data Cleansing 45

- 46. Differences in Data Maturity Models • Substantial differences in data maturity models indicate lack of consensus about what comprises information management maturity • There is a need for a consistent approach, perhaps linked to an information lifecycle to ground any assessment of maturity in the actual processes needed to manage information effectively October 23, 2013 46