Manage transfers

This document shows how to manage existing data transfer configurations.

You can also manually trigger an existing transfer, also known as starting a backfill run.

View your transfers

View your existing transfer configurations by viewing information about each transfer, listing all existing transfers, and viewing transfer run history or log messages.

Required roles

To get the permissions that you need to view transfer details,

ask your administrator to grant you the

BigQuery User (roles/bigquery.user) IAM role on the project.

For more information about granting roles, see Manage access to projects, folders, and organizations.

You might also be able to get the required permissions through custom roles or other predefined roles.

Additionally, to view log messages through Google Cloud console, you must

have permissions to view Cloud Logging data. The Logs Viewer role

(roles/logging.viewer) gives you read-only access to all features of

Logging. For more information about the Identity and Access Management (IAM)

permissions and roles

that apply to cloud logs data, see the Cloud Logging access control guide.

For more information about IAM roles in BigQuery Data Transfer Service, see Access control.

Get transfer details

After you create a transfer, you can get information about the transfer's configuration. The configuration includes the values you supplied when you created the transfer, as well as other important information such as resource names.

To get information about a transfer configuration:

Console

Go to the Data transfers page.

Select the transfer for which you want to get the details.

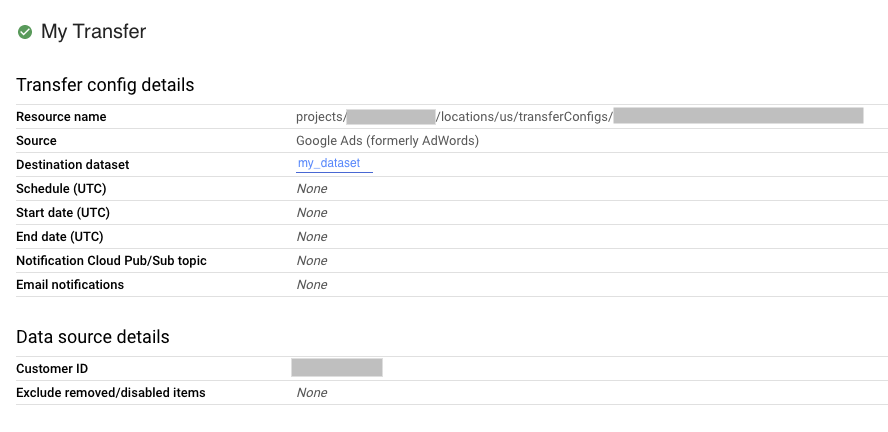

To see the transfer configuration and the data source details, click Configuration on the Transfer details page. The following example shows the configuration properties for a Google Ads transfer:

bq

Enter the bq show command and provide the transfer configuration's

resource name. The --format flag can be used to control the output format.

bq show \

--format=prettyjson \

--transfer_config resource_name

Replace resource_name with the transfer's resource

name (also referred to as the transfer configuration). If you do not know

the transfer's resource name, find the resource name with:

bq ls --transfer_config --transfer_location=location.

For example, enter the following command to display transfer configuration

for

projects/myproject/locations/us/transferConfigs/1234a123-1234-1a23-1be9-12ab3c456de7.

bq show \

--format=prettyjson \

--transfer_config projects/myproject/locations/us/transferConfigs/1234a123-1234-1a23-1be9-12ab3c456de7

API

Use the projects.locations.transferConfigs.get

method and supply the transfer configuration using the name parameter.

Java

Before trying this sample, follow the Java setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Java API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

List transfer configurations

To list all existing transfer configurations in a project:

Console

In the Google Cloud console, go to the Data transfers page.

If there are any transfer configurations in the project, a list of the transfer configurations appears on the data transfers list.

bq

To list all transfer configurations for a project by location, enter the

bq ls command and supply the --transfer_location and --transfer_config

flags. You can also supply the --project_id flag to specify a particular

project. If --project_id isn't specified, the default project is used.

The --format flag can be used to control the output format.

To list transfer configurations for particular data sources, supply the

--filter flag.

To view a particular number of transfer configurations in

paginated format, supply the --max_results flag to specify the number of

transfers. The command returns a page token you supply using the

--page_token flag to see the next n configurations. There is a limit of

1000 configurations that will be returned if --max_results is omitted, and

--max_results will not accept values greater than 1000. If your project

has more than 1000 configurations, use --max_results and --page_token to

iterate through them all.

bq ls \ --transfer_config \ --transfer_location=location \ --project_id=project_id \ --max_results=integer \ --filter=dataSourceIds:data_sources

Replace the following:

locationis the location of the transfer configurations. The location is specified when you create a transfer.project_idis your project ID.integeris the number of results to show per page.data_sourcesis one or more of the following:amazon_s3- Amazon S3 data transferazure_blob_storage- Azure Blob Storage data transferdcm_dt- Campaign Manager data transfergoogle_cloud_storage- Cloud Storage data transfercross_region_copy- Dataset Copydfp_dt- Google Ad Manager data transferadwords- Google Ads data transfergoogle_ads- Google Ads data transfer (preview)merchant_center- Google Merchant Center data transferplay- Google Play data transferscheduled_query- Scheduled queries data transferdoubleclick_search- Search Ads 360 data transferyoutube_channel- YouTube Channel data transferyoutube_content_owner- YouTube Content Owner data transferredshift- Amazon Redshift migrationon_premises- Teradata migration

Examples:

Enter the following command to display all transfer configurations in the

US for your default project. The output is controlled using the --format

flag.

bq ls \

--format=prettyjson \

--transfer_config \

--transfer_location=us

Enter the following command to display all transfer

configurations in the US for project ID myproject.

bq ls \

--transfer_config \

--transfer_location=us \

--project_id=myproject

Enter the following command to list the 3 most recent transfer configurations.

bq ls \

--transfer_config \

--transfer_location=us \

--project_id=myproject \

--max_results=3

The command returns a next page token. Copy the page token and supply it in

the bq ls command to see the next 3 results.

bq ls \

--transfer_config \

--transfer_location=us \

--project_id=myproject \

--max_results=3 \

--page_token=AB1CdEfg_hIJKL

Enter the following command to list Ads and Campaign Manager transfer

configurations for project ID myproject.

bq ls \

--transfer_config \

--transfer_location=us \

--project_id=myproject \

--filter=dataSourceIds:dcm_dt,google_ads

API

Use the projects.locations.transferConfigs.list

method and supply the project ID using the parent parameter.

Java

Before trying this sample, follow the Java setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Java API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Python

Before trying this sample, follow the Python setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Python API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

View transfer run history

As your scheduled transfers are run, a run history is kept for each transfer configuration that includes successful transfer runs and transfer runs that fail. Transfer runs more than 90 days old are automatically deleted from the run history.

To view the run history for a transfer configuration:

Console

In the Google Cloud console, go to the Data transfers page.

Click on the transfer in the data transfers list.

You will be on the RUN HISTORY page for the selected transfer.

bq

To list transfer runs for a particular transfer configuration, enter the bq

ls command and supply the --transfer_run flag. You can also supply the

--project_id flag to specify a particular project. If resource_name

doesn't contain project information, the --project_id value is used. If

--project_id isn't specified, the default project is used. The --format

flag can be used to control the output format.

To view a particular number of transfer runs, supply the --max_results

flag. The command returns a page token you supply using the

--page_token flag to see the next n configurations.

To list transfer runs based on run state, supply the --filter flag.

bq ls \ --transfer_run \ --max_results=integer \ --transfer_location=location \ --project_id=project_id \ --filter=states:state, ... \ resource_name

Replace the following:

integeris the number of results to return.locationis the location of the transfer configurations. The location is specified when you create a transfer.project_idis your project ID.state, ...is one of the following or a comma-separated list:SUCCEEDEDFAILEDPENDINGRUNNINGCANCELLED

resource_nameis the transfer's resource name (also referred to as the transfer configuration). If you do not know the transfer's resource name, find the resource name with:bq ls --transfer_config --transfer_location=location.

Examples:

Enter the following command to display the 3 latest runs for

transfer configuration

projects/myproject/locations/us/transferConfigs/1234a123-1234-1a23-1be9-12ab3c456de7.

The output is controlled using the --format flag.

bq ls \

--format=prettyjson \

--transfer_run \

--max_results=3 \

--transfer_location=us \

projects/myproject/locations/us/transferConfigs/1234a123-1234-1a23-1be9-12ab3c456de7

The command returns a next page token. Copy the page token and supply it in

the bq ls command to see the next 3 results.

bq ls \

--format=prettyjson \

--transfer_run \

--max_results=3 \

--page_token=AB1CdEfg_hIJKL \

--transfer_location=us \

projects/myproject/locations/us/transferConfigs/1234a123-1234-1a23-1be9-12ab3c456de7

Enter the following command to display all failed runs for

transfer configuration

projects/myproject/locations/us/transferConfigs/1234a123-1234-1a23-1be9-12ab3c456de7.

bq ls \

--format=prettyjson \

--transfer_run \

--filter=states:FAILED \

--transfer_location=us \

projects/myproject/locations/us/transferConfigs/1234a123-1234-1a23-1be9-12ab3c456de7

API

Use the projects.locations.transferConfigs.runs.list

method and specify the project ID using the parent parameter.

Java

Before trying this sample, follow the Java setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Java API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

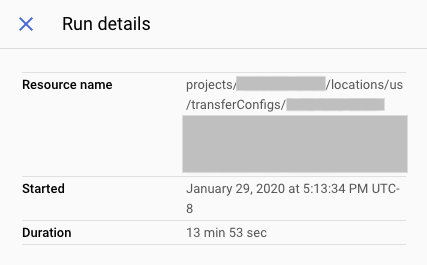

View transfer run details and log messages

When a transfer run appears in the run history, you can view the run details including log messages, warnings and errors, the run name, and the start and end time.

To view transfer run details:

Console

In the Google Cloud console, go to the Data transfers page.

Click on the transfer in the data transfers list.

You will be on the RUN HISTORY page for the selected transfer.

Click on an individual run of the transfer, and the Run details panel will open for that run of the transfer.

In the Run details, note any error messages. This information is needed if you contact Cloud Customer Care. The run details also include log messages and warnings.

bq

To view transfer run details, enter the bq show command and provide the

transfer run's Run Name using the --transfer_run flag. The --format flag

can be used to control the output format.

bq show \ --format=prettyjson \ --transfer_run run_name

Replace run_name with the transfer run's Run Name.

You can retrieve the Run Name by using the bq ls

command.

Example:

Enter the following command to display details for transfer run

projects/myproject/locations/us/transferConfigs/1234a123-1234-1a23-1be9-12ab3c456de7/runs/1a2b345c-0000-1234-5a67-89de1f12345g.

bq show \

--format=prettyjson \

--transfer_run \

projects/myproject/locations/us/transferConfigs/1234a123-1234-1a23-1be9-12ab3c456de7/runs/1a2b345c-0000-1234-5a67-89de1f12345g

To view transfer log messages for a transfer run, enter the bq ls command

with the --transfer_log flag. You can filter log messages by type using

the --message_type flag.

To view a particular number of log messages, supply the --max_results

flag. The command returns a page token you supply using the

--page_token flag to see the next n messages.

bq ls \ --transfer_log \ --max_results=integer \ --message_type=messageTypes:message_type \ run_name

Replace the following:

integeris the number of log messages to return.message_typeis the type of log message to view (a single value or a comma-separated list):INFOWARNINGERROR

run_nameis the transfer run's Run Name. You can retrieve the Run Name using thebq lscommand.

Examples:

Enter the following command to view the first 2 log messages for transfer

run

projects/myproject/locations/us/transferConfigs/1234a123-1234-1a23-1be9-12ab3c456de7/runs/1a2b345c-0000-1234-5a67-89de1f12345g.

bq ls \

--transfer_log \

--max_results=2 \

projects/myproject/locations/us/transferConfigs/1234a123-1234-1a23-1be9-12ab3c456de7/runs/1a2b345c-0000-1234-5a67-89de1f12345g

The command returns a next page token. Copy the page token and supply it in

the bq ls command to see the next 2 results.

bq ls \

--transfer_log \

--max_results=2 \

--page_token=AB1CdEfg_hIJKL \

projects/myproject/locations/us/transferConfigs/1234a123-1234-1a23-1be9-12ab3c456de7/runs/1a2b345c-0000-1234-5a67-89de1f12345g

Enter the following command to view only error messages for transfer run

projects/myproject/locations/us/transferConfigs/1234a123-1234-1a23-1be9-12ab3c456de7/runs/1a2b345c-0000-1234-5a67-89de1f12345g.

bq ls \

--transfer_log \

--message_type=messageTypes:ERROR \

projects/myproject/locations/us/transferConfigs/1234a123-1234-1a23-1be9-12ab3c456de7/runs/1a2b345c-0000-1234-5a67-89de1f12345g

API

Use the projects.transferConfigs.runs.transferLogs.list

method and supply the transfer run's Run Name using the parent parameter.

Java

Before trying this sample, follow the Java setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Java API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Modify your transfers

You can modify existing transfers by editing information on the transfer configuration, updating a user's credentials attached to a transfer configuration, and disabling or deleting a transfer.

Required roles

To get the permissions that you need to modify transfers,

ask your administrator to grant you the

BigQuery Admin (roles/bigquery.admin) IAM role on the project.

For more information about granting roles, see Manage access to projects, folders, and organizations.

You might also be able to get the required permissions through custom roles or other predefined roles.

Update a transfer

After you create a transfer configuration, you can edit the following fields:

- Destination dataset

- Display name

- Any of the parameters specified for the specific transfer type

- Run notification settings

- Service account

You cannot edit the source of a transfer once a transfer is created.

To update a transfer:

Console

In the Google Cloud console, go to the Data transfers page.

Click on the transfer in the data transfers list.

Click EDIT to update the transfer configuration.

bq

Enter the

bq update command,

provide the transfer configuration's resource name using the

--transfer_config flag, and supply the --display_name, --params,

--refresh_window_days, --schedule, or --target_dataset flags. You can

optionally supply a --destination_kms_key flag for scheduled queries

or Cloud Storage

transfers.

bq update \ --display_name='NAME' \ --params='PARAMETERS' \ --refresh_window_days=INTEGER \ --schedule='SCHEDULE' --target_dataset=DATASET_ID \ --destination_kms_key="DESTINATION_KEY" \ --transfer_config \ --service_account_name=SERVICE_ACCOUNT \ RESOURCE_NAME

Replace the following:

NAME: the display name for the transfer configuration.PARAMETERS: the parameters for the transfer configuration in JSON format. For example:--params='{"param1":"param_value1"}'. The following parameters are editable:- Campaign Manager:

bucketandnetwork_id - Google Ad Manager:

bucketandnetwork_code - Google Ads:

customer_id - Google Merchant Center:

merchant_id - Google Play:

bucketandtable_suffix - Scheduled Query:

destination_table_kms_key,destination_table_name_template,partitioning_field,partitioning_type,query, andwrite_disposition - Search Ads 360:

advertiser_id,agency_id,custom_floodlight_variables,include_removed_entities, andtable_filter - YouTube Channel:

table_suffix - YouTube Content Owner:

content_owner_idandtable_suffix

- Campaign Manager:

INTEGER: a value from 0 to 30. For information on setting the refresh window, see the documentation for your transfer type.SCHEDULE: a recurring schedule, such as--schedule="every 3 hours". For a description of theschedulesyntax, see Formatting theschedule.- DATASET_ID: the target dataset for the transfer configuration.

- DESTINATION_KEY: the Cloud KMS key resource ID

—for example,

projects/project_name/locations/us/keyRings/key_ring_name/cryptoKeys/key_name. CMEK is only available for scheduled queries or Cloud Storage transfers. - SERVICE_ACCOUNT: specify a service account to use with this transfer.

- RESOURCE_NAME: the transfer's resource name (also referred to

as the transfer configuration). If you don't know the transfer's resource

name, find the resource name with:

bq ls --transfer_config --transfer_location=location.

Examples:

The following command updates the display name, target dataset,

refresh window, and parameters for Google Ads transfer

projects/myproject/locations/us/transferConfigs/1234a123-1234-1a23-1be9-12ab3c456de7:

bq update \

--display_name='My changed transfer' \

--params='{"customer_id":"123-123-5678"}' \

--refresh_window_days=3 \

--target_dataset=mydataset2 \

--transfer_config \

projects/myproject/locations/us/transferConfigs/1234a123-1234-1a23-1be9-12ab3c456de7

The following command updates the parameters and schedule for Scheduled

Query transfer

projects/myproject/locations/us/transferConfigs/5678z567-5678-5z67-5yx9-56zy3c866vw9:

bq update \

--params='{"destination_table_name_template":"test", "write_disposition":"APPEND"}' \

--schedule="every 24 hours" \

--transfer_config \

projects/myproject/locations/us/transferConfigs/5678z567-5678-5z67-5yx9-56zy3c866vw9

API

Use the projects.transferConfigs.patch

method and supply the transfer's resource name using the

transferConfig.name parameter. If you do not know the transfer's resource

name, find the resource name with:

bq ls --transfer_config --transfer_location=location.

You can also call the following method and supply the project ID using the

parent parameter to list all transfers:

projects.locations.transferConfigs.list.

Java

Before trying this sample, follow the Java setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Java API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Python

Before trying this sample, follow the Python setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Python API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Update credentials

A transfer uses the credentials of the user that created it. If you need to change the user attached to a transfer configuration, you can update the transfer's credentials. This is useful if the user who created the transfer is no longer with your organization.

To update the credentials for a transfer:

Console

In the Google Cloud console, sign in as the user you want to transfer ownership to.

Navigate to the Data transfers page.

Click the transfer in the data transfers list.

Click MORE menu, and then select Refresh credentials.

Click Allow to give the BigQuery Data Transfer Service permission to view your reporting data and to access and manage the data in BigQuery.

bq

Enter the bq update command, provide the transfer configuration's

resource name using the --transfer_config flag, and supply the

--update_credentials flag.

bq update \ --update_credentials=boolean \ --transfer_config \ resource_name

Replace the following:

booleanis a boolean value indicating whether the credentials should be updated for the transfer configuration.resource_nameis the transfer's resource name (also referred to as the transfer configuration). If you do not know the transfer's resource name, find the resource name with:bq ls --transfer_config --transfer_location=location.

Examples:

Enter the following command to update the credentials for Google Ads

transfer

projects/myproject/locations/us/transferConfigs/1234a123-1234-1a23-1be9-12ab3c456de7.

bq update \

--update_credentials=true \

--transfer_config \

projects/myproject/locations/us/transferConfigs/1234a123-1234-1a23-1be9-12ab3c456de7

API

Use the projects.transferConfigs.patch

method and supply the authorizationCode and updateMask parameters.

Java

Before trying this sample, follow the Java setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Java API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Python

Before trying this sample, follow the Python setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Python API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Disable a transfer

When you disable a transfer, disabled is added to the transfer name. When the transfer is disabled, no new transfer runs are scheduled, and no new backfills are allowed. Any transfer runs in progress are completed.

Disabling a transfer does not remove any data already transferred to BigQuery. Data previously transferred incurs standard BigQuery storage costs until you delete the dataset or delete the tables.

To disable a transfer:

Console

In the Google Cloud console, go to the BigQuery page.

Click Transfers.

On the Transfers page, click on the transfer in the list that you want to disable.

Click on DISABLE. To re-enable the transfer, click on ENABLE.

bq

Disabling a transfer is not supported by the CLI.

API

Use the projects.locations.transferConfigs.patch

method and set disabled to true in the

projects.locations.transferConfig resource.

Java

Before trying this sample, follow the Java setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Java API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

To re-enable the transfer:

Before trying this sample, follow the Java setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Java API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Delete a transfer

When a transfer is deleted, no new transfer runs are scheduled. Any transfer runs in progress are stopped.

Deleting a transfer does not remove any data already transferred to BigQuery. Data previously transferred incurs standard BigQuery storage costs until you delete the dataset or delete the tables.

To delete a transfer:

Console

In the Google Cloud console, go to the BigQuery page.

Click Transfers.

On the Transfers page, click on the transfer in the list that you want to delete.

Click on DELETE. As a safety measure you will need to type the word "delete" into a box to confirm your intention.

bq

Enter the bq rm command and provide the transfer configuration's resource

name. You can use the -f flag to delete a transfer config without

confirmation.

bq rm \

-f \

--transfer_config \

resource_name

Where:

- resource_name is the transfer's Resource Name which is also

referred to as the transfer configuration). If you do not know the

transfer's Resource Name, issue the

bq ls --transfer_config --transfer_location=locationcommand to list all transfers.

For example, enter the following command to delete transfer configuration

projects/myproject/locations/us/transferConfigs/1234a123-1234-1a23-1be9-12ab3c456de7.

bq rm \

--transfer_config \

projects/myproject/locations/us/transferConfigs/1234a123-1234-1a23-1be9-12ab3c456de7

API

Use the projects.locations.transferConfigs.delete

method and supply the resource you're deleting using the name parameter.

Java

Before trying this sample, follow the Java setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Java API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Python

Before trying this sample, follow the Python setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Python API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Manually trigger a transfer

You can manually trigger a transfer, also called a backfill run, to load additional data files outside of your automatically scheduled transfers. With data sources that support runtime parameters, you can also manually trigger a transfer by specifying a date or a time range to load past data from.

You can manually initiate data backfills at any time. In addition to source limits, the BigQuery Data Transfer Service supports a maximum of 180 days per backfill request. Simultaneous backfill requests are not supported.

For information on how much data is available for backfill, see the transfer guide for your data source.

Required roles

To get the permissions that you need to modify transfers,

ask your administrator to grant you the

BigQuery Admin (roles/bigquery.admin) IAM role on the project.

For more information about granting roles, see Manage access to projects, folders, and organizations.

You might also be able to get the required permissions through custom roles or other predefined roles.

Manually trigger a transfer or backfill

You can manually trigger a transfer or backfill run with the following methods:

- Select your transfer run using the Google Cloud console, then clicking Run transfer now or Schedule backfill.

- Use the

bq mk –transfer runcommand using thebqcommand-line tool - Call the

projects.locations.transferConfigs.startManualRuns methodAPI method

For detailed instructions about each method, select the corresponding tab:

Console

In the Google Cloud console, go to the Data transfers page.

Select your transfer from the list.

Click Run transfer now or Schedule backfill. Only one option is available depending on the type of transfer configuration.

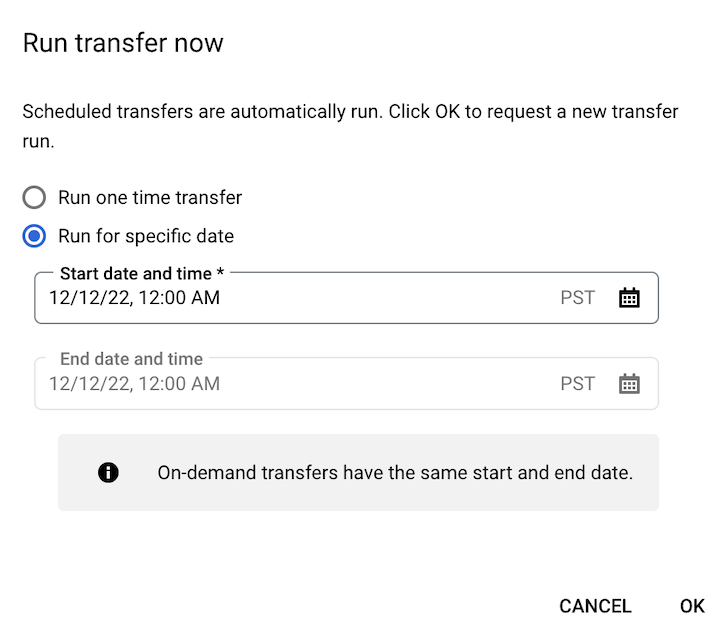

If you clicked Run transfer now, select Run one time transfer or Run for specific date as applicable. If you selected Run for specific date, select a specific date and time:

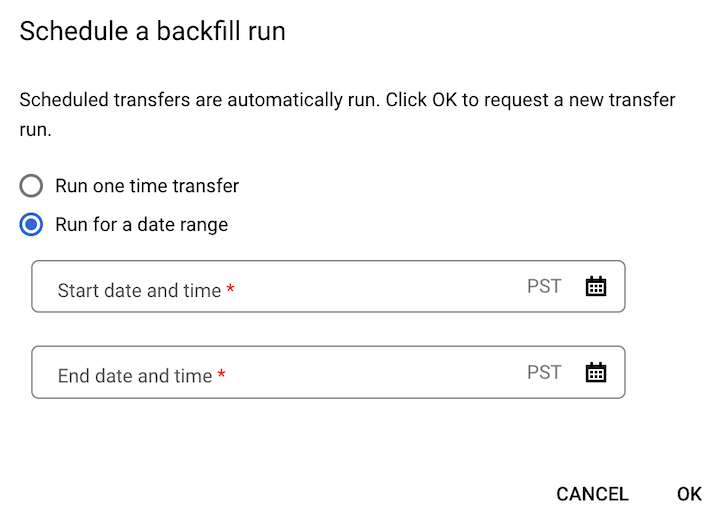

If you clicked Schedule backfill, select Run one time transfer or Run for a date range as applicable. If you selected Run for a date range, select a start and end date and time:

Click OK.

bq

To manually start a transfer run, enter the bq mk command with the

--transfer_run flag:

bq mk \ --transfer_run \ --run_time='RUN_TIME' \ RESOURCE_NAME

Replace the following:

RUN_TIMEis a timestamp that specifies the date of a past transfer. Use timestamps that end in Z or contain a valid time zone offset—for example,2022-08-19T12:11:35.00Zor2022-05-25T00:00:00+00:00.- If your transfer does not have a runtime parameter, or you just want to trigger a transfer now without specifying a past transfer, input your current time in this field.

RESOURCE_NAMEis the resource name listed on your transfer configuration—for example,projects/myproject/locations/us/transferConfigs/1234a123-1234-1a23-1be9-12ab3c456de7.- To find the resource name of a transfer configuration, see Get information about transfers.

- The resource name uses the Relative resource name format.

To manually start a transfer run for a range of dates, enter the bq mk command with the

--transfer_run flag along with a date range:

bq mk \ --transfer_run \ --start_time='START_TIME' \ --end_time='END_TIME' \ RESOURCE_NAME

Replace the following:

START_TIMEandEND_TIMEare timestamps that end in Z or contain a valid time zone offset. These values specifies the time range containing the previous transfer runs that you want to backfill from—for example,2022-08-19T12:11:35.00Zor2022-05-25T00:00:00+00:00RESOURCE_NAMEis the resource name listed on your transfer configuration—for example,projects/myproject/locations/us/transferConfigs/1234a123-1234-1a23-1be9-12ab3c456de7- To find the resource name of a transfer configuration, see Get information about transfers.

- The resource name uses the Relative resource name format.

API

To manually start a transfer run, use the

projects.locations.transferConfigs.startManualRuns

method and provide the transfer configuration resource name using the parent

parameter. To find the resource name of a transfer configuration, see Get information about transfers

"requestedRunTime": "RUN_TIME"

Replace the following:

RUN_TIMEis a timestamp that specifies the date of a past transfer. Use timestamps that end in Z or contain a valid time zone offset—for example,2022-08-19T12:11:35.00Zor2022-05-25T00:00:00+00:00.- If your transfer does not have a runtime parameter, or you just want to trigger a transfer now without specifying a past transfer, input your current time in this field.

To manually start a transfer run for a range of dates, provide a date range:

"requestedTimeRange": {

"startTime": "START_TIME",

"endTime": "END_TIME"

}

Replace the following:

START_TIMEandEND_TIMEare timestamps that end in Z or contain a valid time zone offset. These values specifies the time range containing the previous transfer runs that you want to backfill from—for example,2022-08-19T12:11:35.00Zor2022-05-25T00:00:00+00:00

Java

Before trying this sample, follow the Java setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Java API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Python

Before trying this sample, follow the Python setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Python API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Logging and monitoring

The BigQuery Data Transfer Service exports logs and metrics to Cloud Monitoring and Cloud Logging that provide observability into your transfers. You can use Monitoring to set up dashboards to monitor transfers, evaluate transfer run performance, and view error messages to troubleshoot transfer failures. You can use Logging to view logs related to a transfer run or a transfer configuration.

You can also view audit logs that are available to the BigQuery Data Transfer Service for transfer activity and data access logs.