This project is a port of Andrej Karpathy's llm.c to Mojo, currently in beta. Visit llm.c for a detailed explanation of the original project.

Please note that this repository has not yet been updated to Mojo version 24.5 due to an issue that remains unresolved in the stable release: parallelize won't work with local variables. This issue is addressed in the nightly release, but not in the stable version of Mojo 24.5.

- train_gpt2_basic.mojo: Basic port of train_gpt2.c to Mojo, which does not leverage Mojo's capabilities. Beyond the initial commit, we will not provide further updates for the 'train_gpt2_basic' version, except for necessary bug fixes.

- train_gpt2.mojo: Enhanced version utilizing Mojo's performance gems like vectorization and parallelization. Work in progress.

Before using llm.🔥 for the first time, please run the following preparatory commands:

pip install -r requirements

python prepro_tinyshakespeare.py

python train_gpt2.py- Ensure that the Magic command line tool is installed by following the Modular Docs.

magic shell -e mojo-24-4

mojo train_gpt2.mojoFor a more detailed step-by-step guide including additional setup details and options, please refer to our detailed usage instructions.

A initial version for the nightly release of Mojo 24.5 is now available for testing:

magic shell -e nightly

mojo train_gpt2_nightly.mojoPlease note that the nightly releases are often subject to breaking changes, so you may encounter issues when running train_gpt2_nightly.mojo.

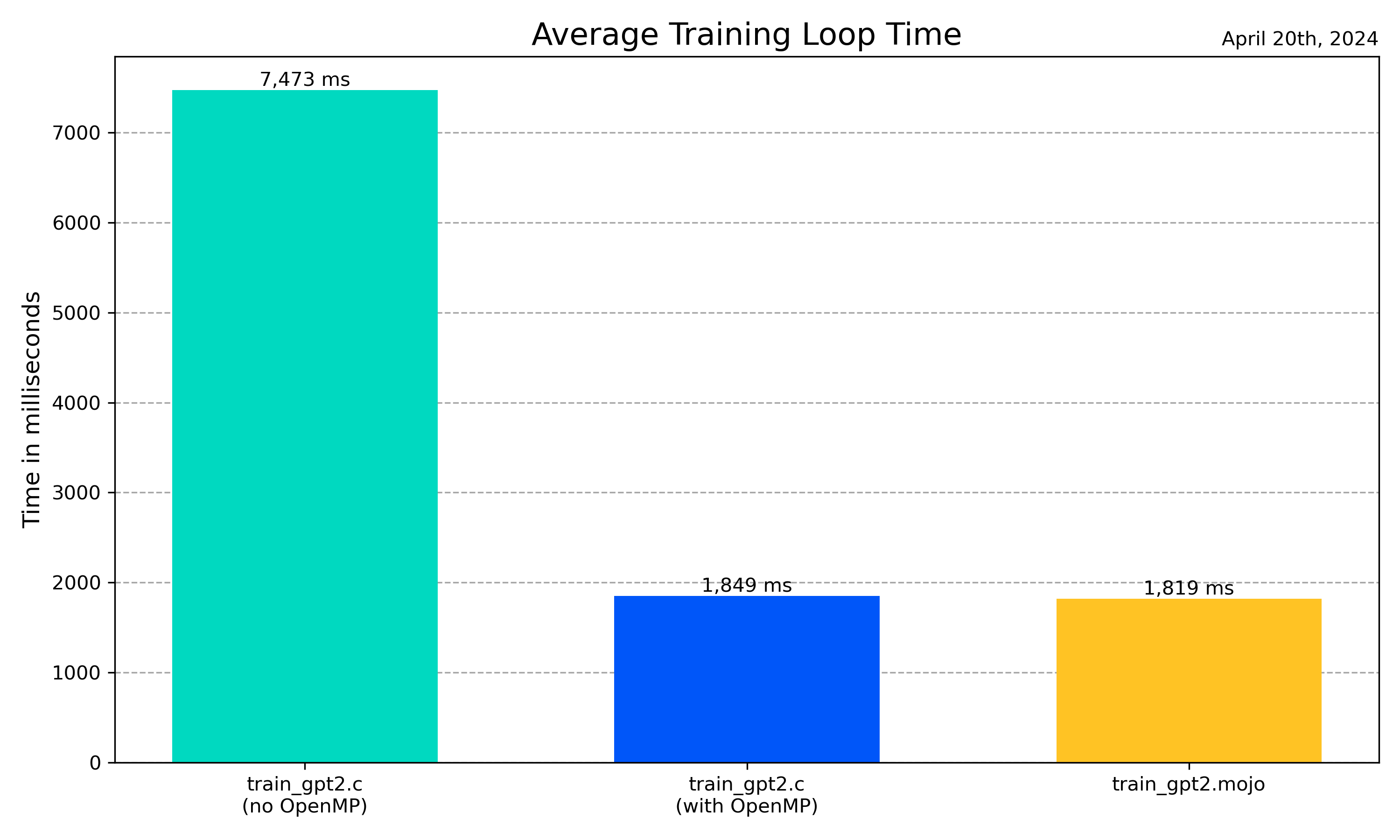

Basic benchmark results: (M2 MacBook Pro)

-

Below are average training loop times, observed across the various implementations. Please note that these results are intended to provide a general comparison rather than precise, repeatable metrics.

-

We are running the OpenMP-enabled train_gpt2.c with 64 threads. (

OMP_NUM_THREADS=64 ./train_gpt2)

| Implementation | Average Training Loop Time |

|---|---|

| train_gpt2.mojo | 1819 ms |

| train_gpt2.c (with OpenMP) | 1849 ms |

| train_gpt2.c (no OpenMP) | 7473 ms |

| train_gpt2_basic.mojo | 54509 ms |

We ported test_gpt2.c from the original repository to Mojo to validate our port's functionality. For instructions on how to run this test and insights into the results it yields, please see our guide here.

At this stage, there are no plans for further development of this app, except for keeping up with Mojo's latest versions. It primarily serves as a proof of concept, showcasing Mojo's ability to implement C-like applications in terms of speed and low-level programming. That said, I’m always open to new ideas or collaboration opportunities, so feel free to reach out to discuss ideas.

- 2024.09.27

- Experimental Mojo 24.5 nightly version

- 2024.09.24

- Switch to the Magic package management tool by Modular

- 2024.06.07

- Update to Mojo 24.4

- 2024.05.04

- Update to Mojo 24.3

- Update llm.c changes

- 2024.04.20

- Further optimization (utilizing unroll_factor of vectorize)

- 2024.04.19

- test_gpt2.mojo added.

- 2024.04.18

- Upgraded project status to Beta.

- Further optimizations of train_gpt2.mojo.

- 2024.04.16

- Vectorize parameter update

- 2024.04.15

- Tokenizer Added -

train_gpt2.cUpdate 2024.04.14 - Bug fix

attention_backward

- Tokenizer Added -

- 2024.04.13

- Initial repository setup and first commit.

MIT