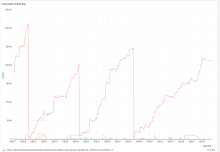

Wikimedia Commons currently has a large backlog of (I believe) refreshLinks jobs, as a result of some edits (and not-yet-processed now-processed edit requests) for highly-used CC license templates: see edit, edit, edit, edit, edit and request, request, request, request. Together, these should result in a large percentage of Commons’ files being re-rendered, and having one templatelinks row each removed from the database (CC T343131).

Currently, the number of links to Template:SDC statement has value (as counted by search – this lags somewhat behind the “real” number, as it depends on a further job, but it should be a decent approximation) is only going down rather slowly; @Nikki estimated that the jobs would take some 10 years to complete at the current rate. Can we increase the rate at which these jobs are run? Discussion in #wikimedia-tech suggests this should be possible in changeprop-jobqueue.