Preprint

Article

The Methods of Determining Temporal Direction Based on Asymmetric Information of Optical Disk for Optimal Fovea Detection

Altmetrics

Downloads

75

Views

19

Comments

0

A peer-reviewed article of this preprint also exists.

This version is not peer-reviewed

Submitted:

25 July 2023

Posted:

27 July 2023

You are already at the latest version

Alerts

Abstract

Accurate localization of the fovea in fundus images is essential for diagnosing retinal diseases. Existing methods often require extensive data and complex processes to achieve high accuracy, posing challenges for practical implementation. In this paper, we propose an effective and efficient approach for fovea detection using simple image processing operations and a geometric approach based on the optical disc's position. A key contribution of this study is the successful determination of the temporal direction by leveraging readable asymmetries related to the optical disc and its surroundings. We discuss three methods based on asymmetry conditions, including blood vessel distribution, cup disc inclination, and optic disc location ratio, for detecting the temporal direction. This enables precise determination of the optimal foveal region of interest (ROI). Through this optimized fovea region, fovea detection is achieved using straightforward morphological and image processing operations. Extensive testing on popular datasets (DRIVE, DiaretDB1, and Messidor) demonstrates outstanding accuracy of 99.04% and a rapid execution time of 0.251 seconds per image. The utilization of asymmetrical conditions for temporal direction detection provides a significant advantage, offering high accuracy and efficiency while competing with existing methods.

Keywords:

Subject: Computer Science and Mathematics - Computer Science

1. Introduction

Diabetic macular edema (DME) is a manifestation of Diabetic Retinopathy, a condition that can lead to vision loss and blindness in affected individuals [1,2]. Consequently, regular eye screenings, conveniently conducted on computers, are strongly recommended to reduce the associated risks [3]. These screenings play a vital role in monitoring disease progression and identifying any concerning lesions. Of particular importance is the accurate detection of essential anatomical structures such as the Optic Disk (OD), blood vessels, and fovea. The fovea, situated at the center of the macula, serves as a reference point for assessing the severity of DME, particularly in cases where it coincides with the presence of hard exudates in the retina [1]. Measuring the distance between the hard exudates and the fovea is crucial for evaluating the severity of DME and necessitates precise detection methods [4]. Additionally, the computational efficiency of the utilized computer-assisted diagnosis techniques significantly impacts the speed of the overall process[5].

Detecting the fovea in a retinal image has proven to be a challenging task due to its unique characteristics. The macula, which encompasses the fovea, appears as a darker region in the retinal image. However, precisely identifying the fovea within this area is complicated because it lacks clear boundaries, making it challenging to distinguish it from the surrounding background. Furthermore, certain retinal images exhibit uneven illumination, further complicating the detection of the fovea.

Several approaches have been employed in detecting the fovea in retinal images. For example, Deep Learning has been applied recently as a cutting-edge method for this detection. Furthermore, it was observed that Convolutional Neural Network (CNN)-based approaches have achieved good results in object detection, including fovea detection [6,8]. Bander et al. [6] used a multistage Deep Learning approach to detect optical disk and fovea in retinal images, while Sedai et al. [7] had put forth a framework for image segmentation that operates in tpwo stages. In addition, Hasan [8] utilized the DR-Net method, an end-to-end encoder-decoder network for fovea detection. Similar to traditional methods, several deep learning methods have also used retinal structures, such as the blood vessels and optic disc (OD), as boundaries to ascertain the location of the fovea. For instance, Song [9] has customized the Bilateral-Vision-Transformer to integrate blood vessel information for improved fovea detection.

It is important to note that the Deep Learning approach requires a large amount of computation, especially during training, as well as a huge quantity of data in order to produce a good detection model [10,11]. The existing methods are typically employed on datasets comprising a substantial number of images. Subsequently, difficulties were also encountered during the development due to the black box characteristic of the computation [12,13].

On the other hand, the conventional approach is an alternative to identify the fovea in a limited amount of data. This approach relies on two conditions: the intensity characteristics of the macula and its geometric location. Syed [14] employed the mean intensity to detect the fovea area. Regarding fovea detection, the common techniques involve template matching and Gaussian functions, utilizing the intensity attributes specific to the foveal area, as exemplified in [15]. Additionally, in [16], the incorporation of the average histogram intensity as a template matching feature has been explored for fovea detection. Furthermore, [17] implemented feature extraction techniques to locate the center of the fovea. In order to enhance effectiveness, other authors employ geometric principles that are seamlessly integrated with existing algorithms.

In terms of the geometric approach for fovea detection, various studies have focused on the fovea's location relative to other anatomical structures. Tobin [18] and Niemeijer [19] utilized the main blood vessels in the retina as a reference point for detecting the fovea area. Similarly, Medhi [20] achieved comparable results by using the main blood vessels to identify the fovea region of interest (ROI). Other investigations have utilized the optic disc (OD) to locate the fovea area [16,17]. Chalakkal [16] employed connected component analysis to define the fovea in relation to the OD, while still considering the role of blood vessels, particularly in determining the foveal ROI. Additionally, aside from the OD's significance as a reference point, other researchers have emphasized that the localization of the fovea is influenced by the direction of search required. For instance, Zheng [17] and Romero-oraá [21] suggested that the fovea is likely positioned in the temporal direction of the OD. The results obtained in this approach can compete with the results from the deep learning approach. However, achieving these outcomes involves a complex step that adversely affects execution time. Therefore, the quest for a more streamlined solution poses a challenge.

In the retinal fundus image, the optic disc and blood vessels exhibit varying pixel densities, resulting in an observable asymmetry. This asymmetry is evident by examining the position of these anatomical structures relative to others or by comparing them to the overall retinal image. Exploiting this characteristic helps identify the temporal direction, thereby pinpointing the precise location of the fovea. This study presents a geometric approach for fovea localization, leveraging the positioning of the OD and temporal direction. The proposed method aims to streamline the determination of the temporal direction by utilizing the asymmetrical conditions in the OD-related image. The study's contributions are as follows:

- Introducing a feature extraction technique that relies on asymmetries associated with the OD and its surrounding area.

- Presenting a method for determining the temporal direction, which proves highly beneficial for foveal ROI detection.

- Enhancing the effectiveness of fovea detection through the utilization of the temporal direction for foveal ROI determination.

This study is presented in several sections, the first section is the introduction, then the second section is the materials and tools used. The third section contains a detailed description of the proposed method, while the fourth presents the results and discussion. Also, the fifth contains conclusions from the existing explanation.

2. Materials and Methods

2.1. Materials

2.1.1. Dataset

This study utilized retinal image data from three publicly available datasets: Digital Retinal Images for Vessel Extraction (DRIVE), DiaretDB1, and Messidor. The DRIVE dataset comprises 40 retinal fundus images with a resolution of 768×584 pixels and three color channels (Red, Green, and Blue). These images were captured with a Field of View (FOV) size of 45° [22]. DiaretDB1 consists of 89 retinal images with a FOV of 50°. This dataset includes color images with dimensions of 1500×1152 pixels [23]. The Messidor dataset contains 1200 retinal images, each with a FOV of 45°. This dataset offers images with different resolutions, including 1140x960, 2240x1488, and 2304x1536 pixels [24].

2.1.2. Environment

A computer with Intel ® Core ™ i5-10400 CPU @ 2.90GHz 16 GB RAM specification and Matlab 2018b software was used to conduct this study.

2.2. Methods

The macula, characterized by a circular area of low intensity on the retinal image [25], houses the fovea at its center. The fovea is positioned 2.5 diameters away from the optic disk in the temporal direction [26] and slightly below the optic disk [27]. This study aimed to precisely determine the location of the fovea. To achieve this, a geometry-based approach utilizing the optic disk as a reference point was employed. The methodology consists of several steps as shown in Figure 1. Firstly, a pre-processing stage was conducted to normalize the retinal image. Subsequently, the OD localization process was executed. The temporal direction was established by leveraging the asymmetry associated with the OD in retinal images. Finally, the fovea's location was determined within the foveal ROI. The following section provides a detailed explanation of the procedure undertaken in this study.

2.2.1. Pre-processing

The retinal images captured by the fundus camera exhibit variations in size and lighting intensity. To establish a robust recognition method for retinal images obtained through a fundus camera, it is crucial to address variations in size and lighting intensity. One approach to achieve this is by ensuring the images used possess similar characteristics. Initially, we perform a pre-processing step on each dataset to ensure uniformity. This involves standardizing the image size, normalizing luminance levels, and accurately detecting the field of view (FOV) for each retinal image.

- Resizing: The retinal images in different datasets vary in size and proportions, depending on the conditions during image capture. In this study, we aim to establish consistency by resizing the images. To maintain the original shape of objects within each retinal image, we preserve the proportions of the image during resizing. The initial result is achieved by setting the height of each image to 564 pixels, while adjusting the width according to the original aspect ratio. The resized image is denoted as in our study.

- Illumination normalization: In order to detect the OD and fovea, a thresholding approach was employed. However, it was observed that uneven illumination, as illustrated in Figure 2(a), negatively impacted the detection accuracy. To address this issue, a normalization step was performed prior to the detection process. In the initial phase, the resized images were subjected to contrast enhancement using Clip Limited Adaptive Histogram Equalization (CLAHE), resulting in the creation of an enhanced image denoted as . This enhancement process specifically utilized the green layer, known for its superior contrast properties (Figure 2(b)). Furthermore, intensity normalization was conducted following the approach described in a previous study [27]. The resulting normalized image, denoted as , is illustrated in Figure 2(c).

- The FOV represents the visible area of the retina captured in an image. The size of the FOV plays a crucial role in estimating the dimensions of retinal objects, particularly the OD. This is particularly valuable in addressing variations in the proportion of retinal area displayed across different datasets. By defining the area, the search location for objects on the retina can be constrained. Additionally, the FOV size is utilized to estimate the location of the fovea through a geometric approach. Segmentation of the FOV area was accomplished by applying Otsu thresholding to the grayscale image, utilizing a threshold value of 0.2 times the Otsu threshold obtained. The segmented FOV is illustrated in Figure 2(d).

2.2.2. Optic Disk Localization

The OD is a distinctive anatomical structure within retinal images, known for its high intensity compared to other features. Due to its prominent characteristics, OD localization is relatively straightforward. It is worth noting that the OD location is often associated with the position of the fovea [27,28]. In this study, the OD serves as a reference point for determining the center of the fovea ROI. Additionally, certain features, such as blood vessels and bright areas on the cup disk, are leveraged to determine the temporal direction. To detect the OD, a thresholding method based on [29] was applied to the pre-processed images . Equation (1) was employed to obtain the OD candidate areas (. The OD candidates are shown in Figure 3(b).

where is the threshold value used, which is 0.85 from the maximum value of .

Occasionally, bright retinal images with high luminance are found at the edge of the FOV, which corresponds to the area around the OD. It was observed that the luminance normalization process conducted during pre-processing does not adequately affect these areas. This can potentially result in the misidentification of the optic disc candidate region. Therefore, this study introduced FOV edge detection as an additional step to mitigate such effects. To encompass a wider range, the edges were subjected to morphological dilation using a disk-shaped structuring element with a radius of 50 pixels. The resulting edge area is denoted as (Figure 3(c)). Equation (2) was employed to obtain the refined optic disc candidate area ) [29]:

The center point of the blob within (Figure 3(e)) is estimated to correspond to the cup disk area of the optic disc. Based on this center point, the subsequent step involves cropping the OD ROI to one-quarter the size of the image. The resulting OD ROI is illustrated in Figure 3(f).

Furthermore, optic disc segmentation is performed through several additional steps. Initially, contrast enhancement is applied using CLAHE with a clip limit of 0.03 and multiple tiles [2]. This process specifically focuses on the red channel, which is least affected by blood vessels, as indicated by Zheng [17]. Subsequently, a morphological opening operation is employed to remove blood vessels in the region, utilizing a disk-shaped structuring element with a radius of 20 pixels.

The OD obtained through Otsu thresholding is then refined using morphological closing and opening operations with disk-shaped structuring elements of sizes 10 and 15, respectively. The largest blob is selected to determine the OD, and the result is obtained by cropping the area according to the bounding box, as depicted in the Figure 3(g). Notably, the center of this area serves as a reference for determining the foveal ROI. It is important to mention that the diameter of the optic disc () is not calculated directly from this obtained area. Instead, is computed as , where w represents the width of the FOV.

2.2.3. Temporal Direction Determination

In this paper, our objective is to utilize the OD appearance in retinal images to detect the fovea and determine the temporal direction. The OD, being present in the retinal image, provides crucial information for locating the fovea, which is typically positioned in the temporal direction relative to the OD. Moreover, the OD itself can act as an indicator of the temporal direction.

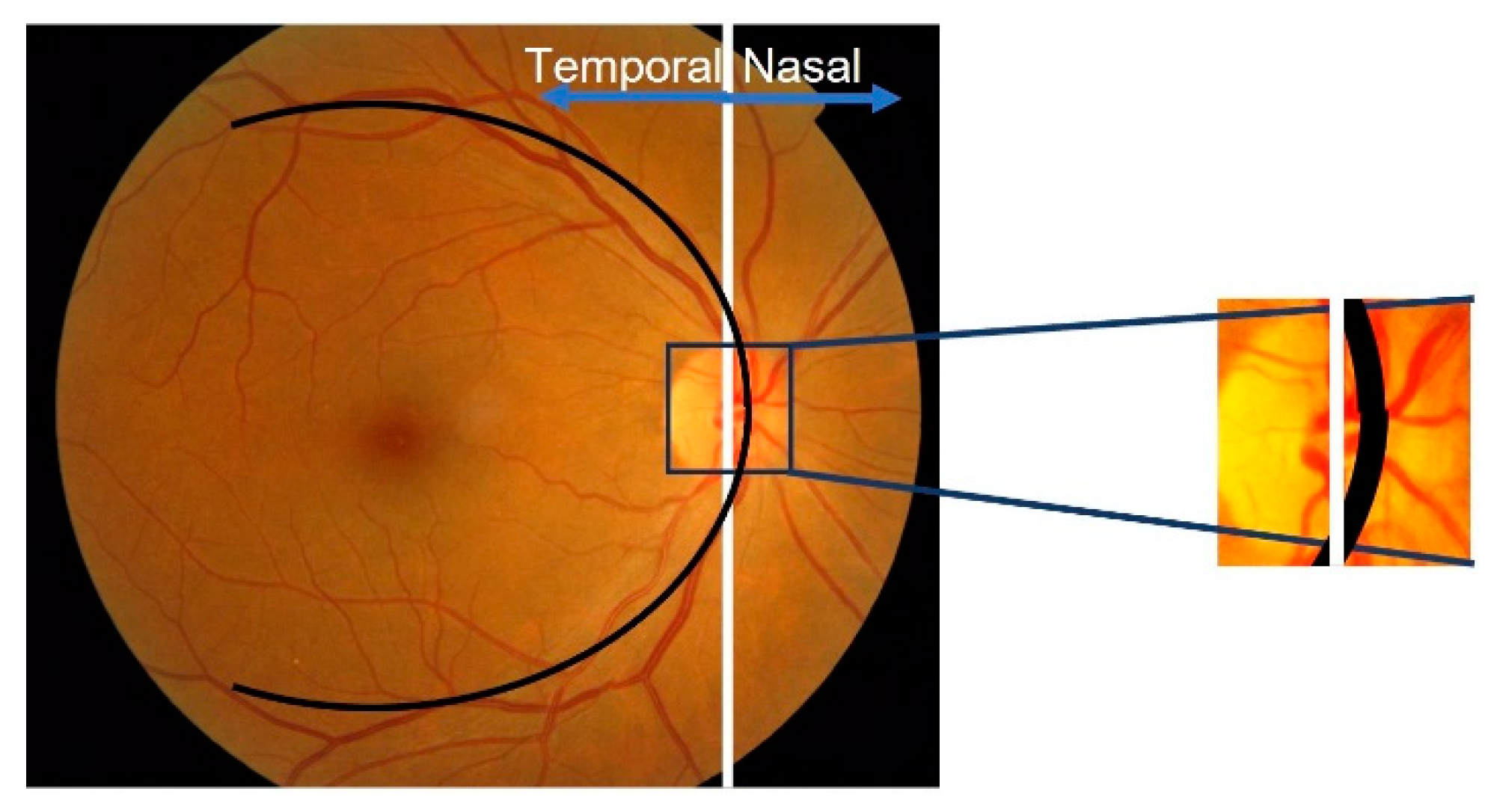

To achieve this, we concentrate on exploiting the observed asymmetry in the OD's appearance (Figure 4), including the brightest area of cup disk and major blood vessels, as distinctive features for identifying the temporal direction in interlaced retinal images. We propose three distinct methods that make use of these existing asymmetrical conditions for temporal direction determination:

- The convergence pattern of blood vessels in the OD: We examine the convergence pattern of blood vessels within the OD. The direction of convergence can provide valuable clues regarding the temporal direction.

- The location of the brightest area of cup disk in the OD: We analyze the OD to identify the cup disk with the highest brightness. By assessing its position within the OD, we can infer the temporal direction.

- The location of the OD in the retinal image: We investigate the position of the OD within the retinal image. Its relative location can offer insights into the temporal direction.

These methods aim to leverage the inherent asymmetry of the OD and its associated features to improve the accuracy and efficiency of determining the temporal direction in retinal images. By employing these approaches, we anticipate significant advancements in retinal imaging analysis.

Convergence pattern of blood vessels in the OD

The blood vessels in the retina exhibit a parabolic shape and converge on the OD, resulting in an asymmetry where the blood vessels cluster predominantly on one side of the OD, as illustrated in Figure 5. This inherent asymmetry was leveraged in our proposed method to determine the temporal direction. The comparison of the number of blood vessel pixels on both sides was performed, taking into account their tendency to cluster on one side of the optic disc. To facilitate this comparison, we focused on the green layer of the retinal image, as it offers better contrast compared to the other two layers.

The blood vessels in the retina exhibit a parabolic shape and converge on the OD, resulting in an asymmetry where the blood vessels cluster predominantly on one side of the OD (Figure 5). This inherent asymmetry was leveraged in our proposed method to determine the temporal direction. The comparison of the number of blood vessel pixels on both sides was performed, taking into account their tendency to cluster on one side of the optic disc. To facilitate this comparison, we focused on the green layer of the retinal image, as it offers better contrast compared to the other two layers.

Once the location of the optic disc was successfully detected, its area was cropped to identify the side corresponding to the temporal direction. At the initial stage of the process, we employed an adaptive operation using the CLAHE method to enhance image contrast. This contrast enhancement was effectively enhanced the visibility of blood vessels against the background image.

Next, the blood vessels within the OD are extracted using a bottom-hat morphological operation and adaptive binarization with Otsu's method. This involves utilizing a 'disk'-shaped structuring element of size 5 pixels. The result is an image called IOV. However, it is observed that the blood vessels in the IOV still retain vertical and horizontal orientations, which can introduce false information. To address this, the orientation direction of the blood vessels is selectively considered.

Comparisons between the left and right sides of the OD were performed using vertically oriented vessel pixels. To facilitate the comparison process, it is essential to optimize the vertically oriented blood vessels and eliminate the horizontally oriented ones prior to the analysis of the left and right sides of the OD. This is accomplished through the sequential application of morphological operations, specifically opening and closing operations. By performing these operations consecutively, the vertically oriented blood vessels are enhanced, while the horizontally oriented ones are attenuated. The resulting image, denoted as , is obtained using rectangular structuring elements with dimensions of 6×3 and 10×3.

Suppose is the number of white pixels on the left side of the image and is the number of white pixels on the right side . The following rules were adopted when determining the temporal direction:

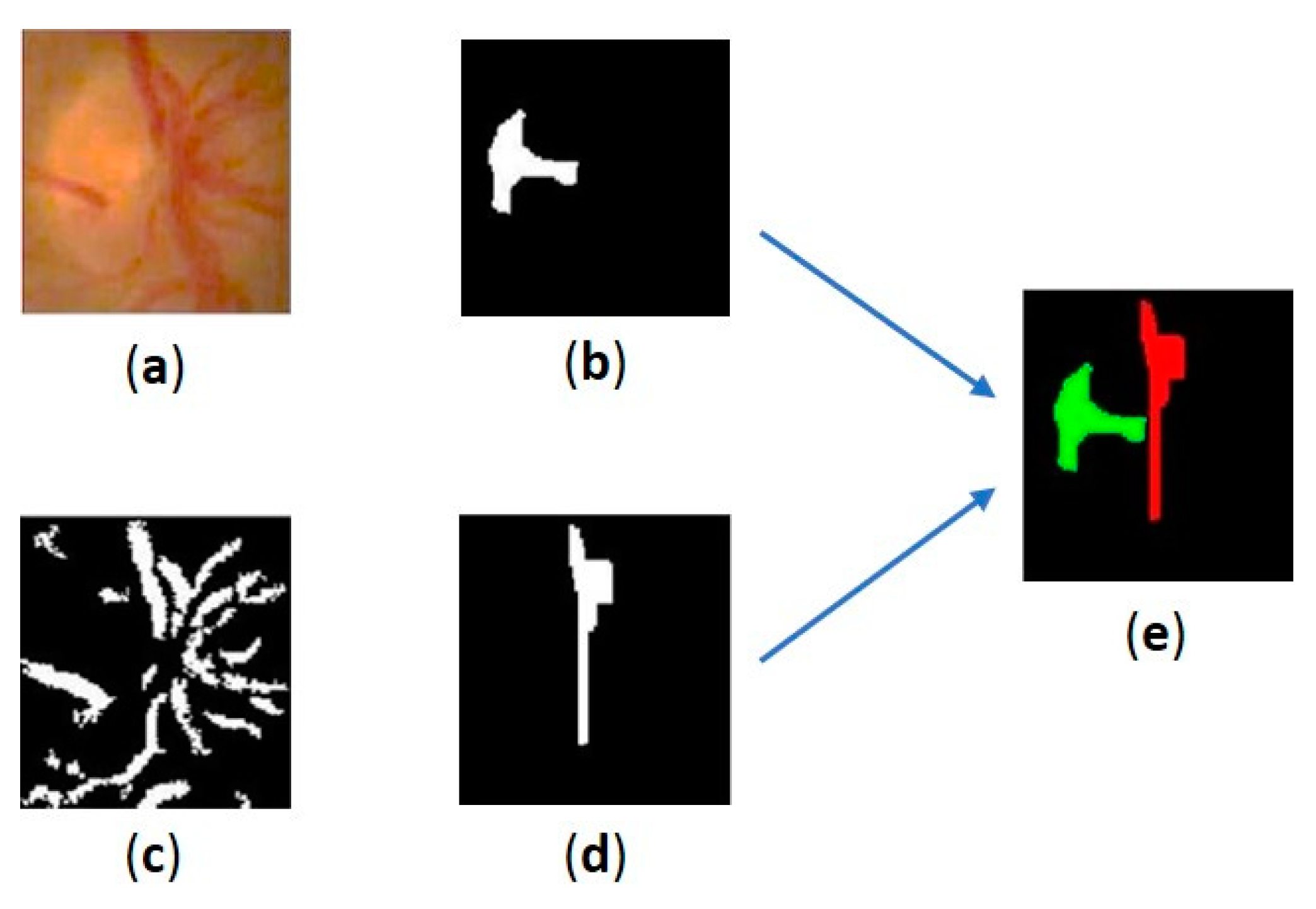

Location of the brightest area of cup disk area in an optic disk

The OD serves as the origin for blood vessels in the retina and exhibits a cup disk structure. The brightest area within the OD corresponds to the cup disk, partially obscured by blood vessels on one side. The remaining cup disk area is situated on the temporal side of the OD. By comparing the position of the cup disk area with that of the vertically oriented blood vessels, we can determine the temporal direction of the retinal image. This is achieved by analyzing the center coordinates of the cup disk area and the vertical vessel center coordinates, which serve as defining features for the temporal direction.

To detect the temporal direction, the OD area must first be segmented as shown in Figure 6(a). Initially, we identify the visible cup disk area and a curve representing the vertical vessels within the OD. To simplify the process, we focus on the brightest part of the cup disk, avoiding the need for precise segmentation. The segmentation is performed on the brightest portion of the cup disk by applying a binary operation with a threshold of 80% of the maximum pixel value within the OD area. This binary operation is conducted on the green layer, which offers optimal contrast. Subsequently, a closing operation is applied to refine the bright area. A circular structuring element (SE) with a diameter of 10 pixels is used for this purpose. The segmentation results are depicted in Figure 6(b). The midpoint coordinates of the bright cup disk area (, ) are then used as reference values for determining the temporal direction.

The curve representing the vertical vessels in the OD are extracted using a combination of morphological operations. Initially, the contrast of the OD image is enhanced using CLAHE, followed by emphasizing the blood vessels using bottom-hat operation. Figure 6(c) shows the results of the extraction of blood vessels in OD. This operation employs a circular SE with a radius of 5 pixels. The lines representing the vertical vessels are obtained through a combination of opening and closing operations. The SE used has a rectangular shape with a vertical orientation. The first combination employs an SE of size 6×3 for opening and 10×3 for closing. In the second combination, an SE of size 13×1 and 50×15 is used. The resulting curve are illustrated in Figure 6(d). Finally, the coordinates of the blood vessel center (, are extracted from the last obtained blob. An illustration of the relative location of the cup disc's brightest area to the curve representing the vertical vessels in OD is shown in Figure 6(e).

Once the coordinates of the cup disk center and the vertical blood vessel center within the OD are determined, the temporal location on the retinal image can be established. In general, the temporal area on the retinal image is determined based on the location of the cup disk with respect to the vertical vessels, as shown in Figure 7. To simplify the search process, this study compares the x-axis values of each coordinate point. The temporal area is defined according to the following rules:

If {the cup vessel area on the left of the vertical vessel OD}

temporal direction = LEFT

Else {the cup vessel area on the right of the vertical vessel OD}

temporal direction = RIGHT

Location of the OD in the retinal image

In diabetic retinopathy examination, it is essential for the retinal image used in screening to have a visible macular condition at the center. This recommendation is supported by The Health Technology Board for Scotland [30,31]. In this context, the OD is situated at the edges of the retinal area, both on the left and right sides. This indicates that the temporal direction can be determined by comparing its location with the center coordinates of the retinal image.

It has been observed that when the OD is on the right side of the image, the abscissa center is greater than the retinal image center, indicating a temporal direction towards the left. Conversely, when the OD is on the left side, the abscissa is smaller than the image center, and the temporal direction points to the right, as depicted in Figure 8.

The temporal direction is determined with the following rule:

where w denotes the retinal image width, and is the abscissa value of the OD center obtained during OD detection.

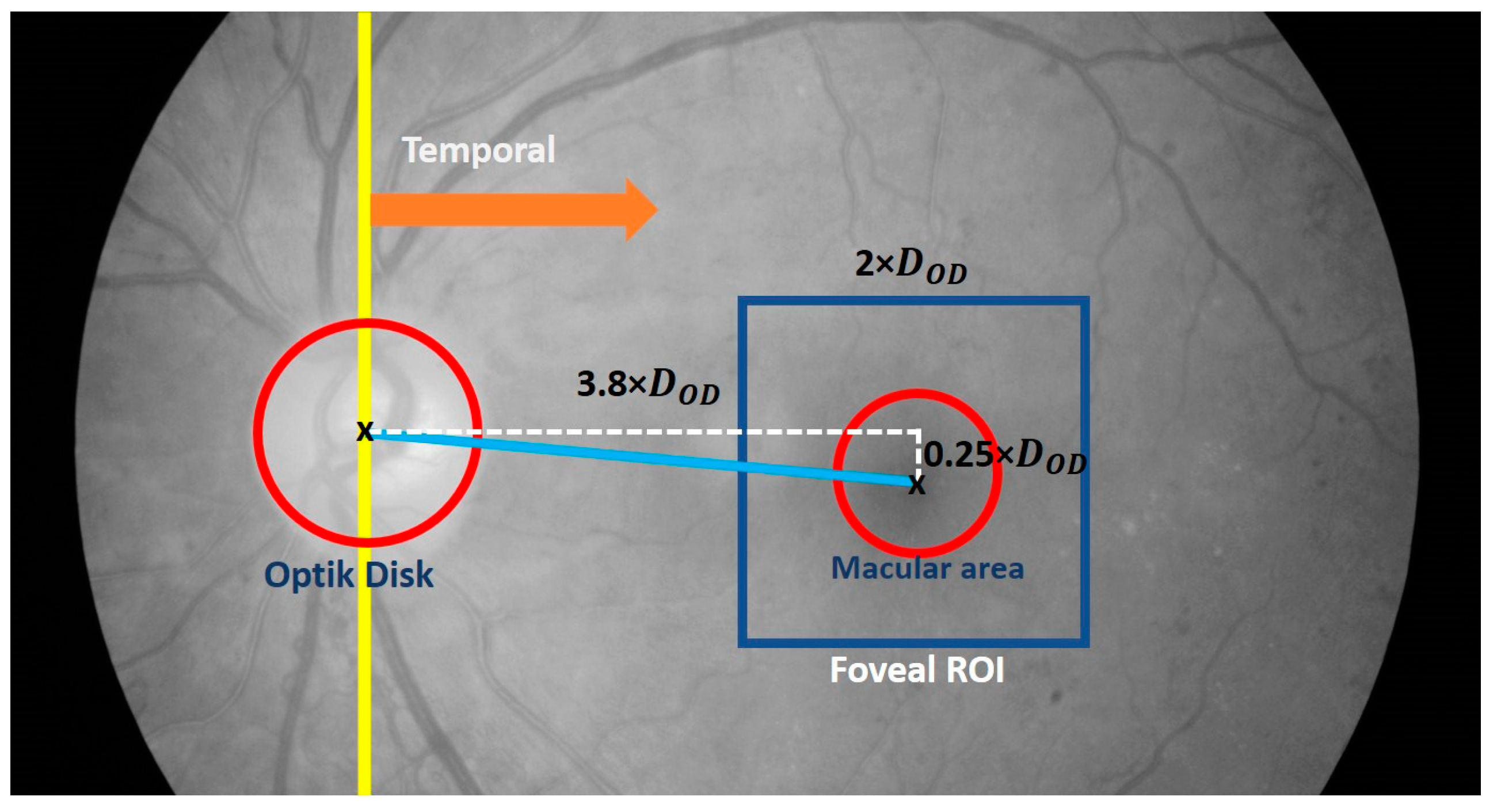

2.2.4. The Fovea Detection

According to established medical definitions, the macula, with the fovea as its center, is situated in the temporal area, and the fovea is approximately 2.5 times the diameter of the OD. This implies that a comprehensive search for the fovea should be conducted within a restricted area aligned with this definition. In the detection process, a geometric approach is employed, with the OD location serving as a reference. The effectiveness of the proposed method relies on accurately determining the size and location of the ROI corresponding to the fovea.

To initiate the detection process and minimize errors, the determination of the foveal ROI occurs after obtaining the OD location and temporal direction. This allows for the limitation of the detection area and reduces the likelihood of errors. Moreover, the location is obtained by utilizing the OD as a reference based on the gathered information. Figure 9 visually depicts the construction of the foveal ROI, which takes the form of a square with dimensions of 2 times the OD diameter (2×), where represents the OD diameter. This size is chosen to account for potential inaccuracies in the ROI location, aiming to minimize errors. The selection of the multiplier parameter is performed through experimentation with three estimated values: 2.0, 2.25, and 2.5.

Furthermore, the center location of the fovea ROI is determined using Equations (3) and (4).

where represents the coordinates of the foveal ROI center and are the coordinates of the OD center location.

The subsequent step involves detecting the fovea within the foveal ROI. This process utilizes a combination of thresholding techniques and morphological operations. Initially, the contrast within the foveal ROI (Figure 10(a)) is enhanced using CLAHE with a clip limit of 1. This results in an improved foveal ROI image with enhanced contrast (Figure 10(b)), revealing darker areas and clearer edges. To facilitate the recognition process, the resulting image is negated, generating the complement of the image () as shown in Figure 10(c).

The thresholding process is then applied to obtain a binary foveal image () by utilizing an adaptive threshold value of 77% of the maximum intensity of . The result of the thresholding process is shown in Figure 10(d). It should be noted that I_mb may still contain some degree of noise. To mitigate this, a cleaning and repair procedure is conducted using morphological dilation and erosion operations, as described in Equation (5):

Where is the obtained macular image, is the erosion morphology operation with a disc-shaped α-element structure measuring 15, and represents the dilation operation with a disc-shaped measuring 5, and then the fovea is extracted from the area containing (Figure 10(e)).

3. Results

The proposed method was evaluated on three datasets, namely DRIVE, DiaretDB1, and Messidor. The appropriated images from the DRIVE were 35. This is because 5 images in this dataset do not show the foveal area that was carried out in [17]. Similarly, this test uses 1136 from Messidor images in line with [32]. In DiaretDB1, all images were considered in the evaluation. This evaluation involved a meticulous comparison of the obtained results with ground truth, with the supervision of an ophthalmologist.

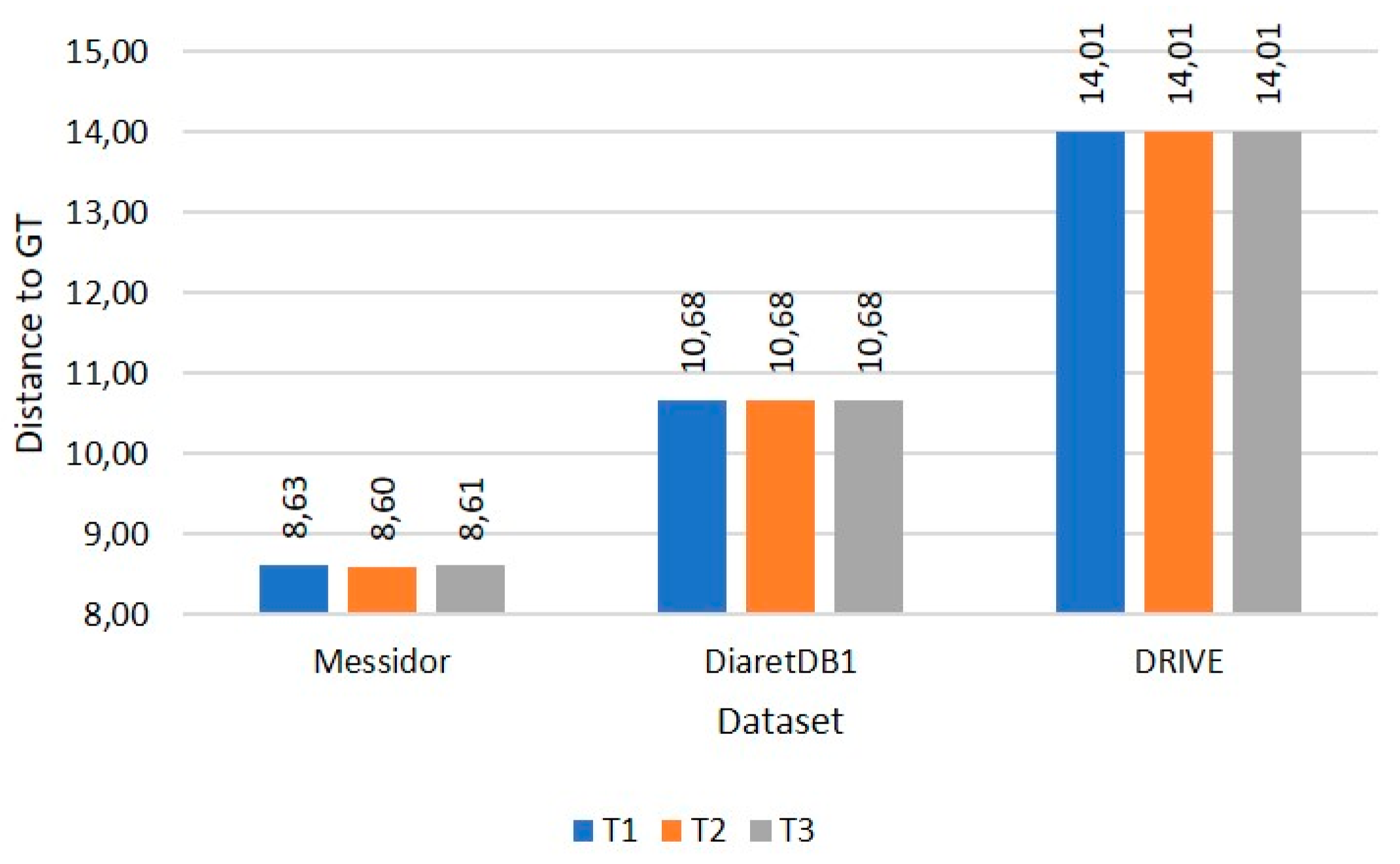

Impressively, the OD detection test achieved remarkable accuracy rates. The method exhibited a flawless accuracy of 100% for the DRIVE dataset, while for the DiaretDB1 and Messidor datasets, the accuracies were equally impressive, measuring 98.88% and 98.59% respectively. Additionally, Table 1 provides an overview of the accuracy rates for determining the temporal direction. Three different methods were employed for determining the temporal direction: T1, T2, and T3. T1 involved comparing the blood vessel pixels, T2 relied on the location of the cup disk area and the perpendicularity of the blood vessels, while T3 utilized the position of the OD relative to the center of the image. In order to evaluate the performance of the proposed algorithm in determining the temporal direction, an assessment was conducted on the images where the OD has been successfully detected.

During the evaluation of fovea detection, accuracy was quantitatively assessed by measuring the Euclidean distance between the detected fovea and the Ground Truth (GT). This approach facilitated a precise quantitative comparison. Furthermore, Figure 11, Figure 12 and Figure 13 illustrate the performance of the three methods used to determine the temporal direction, while Table 2 provides a concise summary of their performance metrics.

Of the three methods, T3 exhibited the highest performance, showcasing its superiority in determining the temporal direction. The significance of these variations became particularly evident when testing on datasets with a larger number of images, such as the Messidor dataset. Interestingly, T3 also demonstrated comparable performance to the DRIVE and DiaretDB1 datasets, despite their smaller data sizes. In terms of accuracy, T3 outperformed T1 and T2, achieving accuracies of 98.24%, 96.83%, and 97.54% respectively. Furthermore, T3 showcased superior computational efficiency, with a processing time of 0.291 seconds per image, compared to T1 and T2, which required 0.294 and 0.295 seconds respectively.

The average accuracy and computational time for the three datasets are 99.04% and 0.251 seconds/image. Table 3 shows the comparison results of the performance with other studies.

4. Discussion

Upon analysis, it was observed that the method using the comparison of the OD location and the image center for determining the temporal direction exhibited the highest performance among the three methods. The performance differences were particularly noticeable when testing on datasets with a large number of images, such as the Messidor dataset. However, in the DRIVE and DiaretDB1 datasets with a smaller number of images, the three methods showed no significant difference in performance. It was found that using the bright area on the cup disc as a feature for determining the temporal direction becomes problematic when the distribution of bright areas is not concentrated on one side as expected. In cases where the bright area is distributed circularly around the blood vessels, the expected asymmetry does not appear, causing the center point of this bright area to sometimes shift to the opposite side and resulting in incorrect temporal direction determination.

Similarly, using blood vessels in OD as a feature is disturbed when the distribution of these blood vessels is evenly spread within the OD. Consequently, when applying morphological operations to the vascular pixels in the OD, there are several images that do not exhibit the expected asymmetry and provide an incorrect temporal direction. Overall, the evaluation results indicate that all three methods of determining the temporal direction perform well.

This suggests that all the methods are capable of providing good temporal direction results for generating an optimal foveal region of interest (ROI). The use of a small rectangular foveal ROI, based on the geometric location of the fovea, enhances the effectiveness of the proposed method. By limiting the detection area to the foveal region only, the ROI prevents other objects from being covered, leading to a higher accuracy in fovea detection, averaging 99.04%. These findings demonstrate that the proposed method is stable and accurate, despite relying on simple image processing operations such as binaryization and morphology. Additionally, the use of these straightforward image processing operations has contributed to reducing the computational time to 0.251 seconds per image.

When compared to other studies, the proposed method exhibits competitive accuracy and computation time. In comparison to classical approaches, it outperforms the accuracy achieved by Zheng [17] and Medhi [15]. Although it slightly trails behind the performance of Chalakkal [16] and Romero-oraá [18], it surpasses both methods significantly in terms of computational time. Test results on large-sized images indicate that the proposed method is 85.2× faster than Chalakkal [16] and 92.2× faster than Romero-oraá [18]. This improved performance is attributed to the efficient detection process, where an effective temporal direction detection plays a crucial role in determining the optimal foveal ROI. With an optimal ROI, the detection process can be executed efficiently through simple binary and morphological operations.

Furthermore, the accuracy of the proposed method surpasses that of Al-Bander [5] and slightly below Song [9], which employs a deep learning approach, achieving an accuracy of 98.24% compared to 96.6% and 100% respectively. However, it is important to note that the computational time required for Al-Bander is the fastest at approximately 0.007 seconds per image. Nonetheless, a direct comparison of the computation time between the proposed method and the deep learning approach is not feasible due to the significantly different computing devices used [16]. Deep learning approaches generally demand high computing power, especially during model development.

Most of the detection errors encountered in the proposed method can be attributed to imperfect OD detection. As explained earlier, the accurate detection of the OD is crucial for determining the temporal direction and the location of the foveal region of interest (ROI). When an error occurs during the OD detection process, it can lead to errors in determining the temporal direction and the reference point for the foveal ROI. Consequently, this can result in inaccurate foveal ROI determination and ultimately lead to erroneous fovea detection.

5. Conclusions

The methods used to determine the temporal direction based on the OD yielded impressive results. Among the three methods tested, the one comparing the OD position with the image center showed the best performance. Although there were challenges with using bright areas and blood vessels as features, overall, all three methods performed well. The proposed methods effectively generated ROIs for the fovea by utilizing a small rectangular ROI based on the fovea's geometric location.

It demonstrated stability, accuracy, and computational efficiency, surpassing traditional approaches in terms of accuracy and computation time. While it slightly lagged behind certain methods in accuracy, it excelled in computational speed. Imperfect OD detection was the main cause of detection errors, affecting the determination of the temporal direction and fovea detection.

Author Contributions

Conceptualization, H.A.W, A.H. and R.S.; methodology, H.A.W; software, H.A.W.; validation, H.A.W.. M.B.S.; formal analysis, H.A.W.. A.H. and R.S.; investigation, H.A.W.. M.B.S.; data curation, H.A.W.. M.B.S.; writing—original draft preparation, H.A.W.; writing—review and editing, A.H., R.S. and M.B.S.; visualization, H.A.W.; supervision, A.H., R.S. and M.B.S.; project administration, A.H.; funding acquisition, A.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Research Directorate of Universitas Gadjah Mada in the Rekognisi Tugas Akhir (RTA) 2022 scheme.

Data Availability Statement

This study used images from three public datasets, namely DRIVE, DiaretDB1 and Messidor. The DRIVE dataset and documentation can be found at “https://drive.grand-challenge.org/”, while DiaretDB1 can be found at “https://www.it.lut.fi/project/imageret/diaretdb1/”. The Messidor dataset is kindly provided by the Messidor program partners at “https://www.adcis.net/en/third-party/messidor/”.

Conflicts of Interest

The authors declare no conflict of interest

References

- Ciulla, T.A.; Amador, A.G.; Zinman, B. Diabetic Retinopathy and Diabetic Macular Edema: Pathophysiology, Screening, and Novel Therapies. Diabetes Care 2003, 26, 2653–2664. [Google Scholar] [CrossRef] [PubMed]

- Aiello LP, Gardner T., King GL, Blankenship G, Cavallerano JD, Ferris LF, et al. Diabetic Retinopathy. Medicine (Baltimore) [Internet]. 2015, 43, 13–19. Available online: http://linkinghub.elsevier.com/retrieve/pii/S1357303914002990.

- Kiire, C.A.; Porta, M.; Chong, V. Medical management for the prevention and treatment of diabetic macular edema. Surv. Ophthalmol. 2013, 58, 459–465. [Google Scholar] [CrossRef]

- Medhi JP, Dandapat S. Analysis of Maculopathy in Color Fundus Images. In: 2014 Annual IEEE India Conference (INDICON). Pune: IEEE; 2014. p. 1–4.

- Ilyasova, N.; Demin, N.; Andriyanov, N. Development of a Computer System for Automatically Generating a Laser Photocoagulation Plan to Improve the Retinal Coagulation Quality in the Treatment of Diabetic Retinopathy. Symmetry 2023, 15, 287. [Google Scholar] [CrossRef]

- Al-Bander, B.; Al-Nuaimy, W.; Williams, B.M.; Zheng, Y. Multiscale sequential convolutional neural networks for simultaneous detection of fovea and optic disc. Biomed. Signal Process. Control. 2018, 40, 91–101. [Google Scholar] [CrossRef]

- Sedai, S.; Tennakoon, R.; Roy, P.; Cao, K.; Garnavi, R. Multi-stage segmentation of the fovea in retinal fundus images using fully Convolutional Neural Networks. Proc - Int Symp Biomed Imaging. 2017, 1083–1086. [CrossRef]

- Hasan, M.K.; Alam, M.A.; Elahi, M.T.E.; Roy, S.; Martí, R. DRNet: Segmentation and localization of optic disc and Fovea from diabetic retinopathy image. Artif. Intell. Med. 2021, 111, 102001. [Google Scholar] [CrossRef]

- Song S, Dang K, Yu Q, Wang Z, Coenen F, Su J, et al. Bilateral-ViT For Robust Fovea Localization. Proc - Int Symp Biomed Imaging. 2022;2022-March.

- Mamoshina P, Vieira A, Putin E, Zhavoronkov A. Applications of Deep Learning in Biomedicine. Mol Pharm. 2016;13(5):1445–54.

- Royer, C.; Sublime, J.; Rossant, F.; Paques, M. Unsupervised Approaches for the Segmentation of Dry ARMD Lesions in Eye Fundus cSLO Images. J. Imaging 2021, 7, 143. [Google Scholar] [CrossRef]

- Lakshminarayanan, V.; Kheradfallah, H.; Sarkar, A.; Balaji, J.J. Automated Detection and Diagnosis of Diabetic Retinopathy: A Comprehensive Survey. J. Imaging 2021, 7, 165. [Google Scholar] [CrossRef] [PubMed]

- Camara, J.; Neto, A.; Pires, I.M.; Villasana, M.V.; Zdravevski, E.; Cunha, A. Literature Review on Artificial Intelligence Methods for Glaucoma Screening, Segmentation, and Classification. J. Imaging 2022, 8, 19. [Google Scholar] [CrossRef]

- Syed, A.M.; Akram, M.U.; Akram, T.; Muzammal, M.; Khalid, S.; Khan, M.A. Fundus Images-Based Detection and Grading of Macular Edema Using Robust Macula Localization. IEEE Access 2018, 6, 58784–58793. [Google Scholar] [CrossRef]

- Fleming, A.D.; A Goatman, K.; Philip, S.; A Olson, J.; Sharp, P.F. Automatic detection of retinal anatomy to assist diabetic retinopathy screening. Phys. Med. Biol. 2006, 52, 331–345. [Google Scholar] [CrossRef] [PubMed]

- Chalakkal, R.J.; Abdulla, W.H.; Thulaseedharan, S.S. Automatic detection and segmentation of optic disc and fovea in retinal images. IET Image Process. 2018, 12, 2100–2110. [Google Scholar] [CrossRef]

- Zheng S, Pan L, Chen J, Yu L. Automatic and Efficient Detection of The Fovea Center in Retinal Images. In: Proceedings - 2014 7th International Conference on BioMedical Engineering and Informatics, BMEI 2014. 2014. p. 145–50.

- Tobin, K.W.; Chaum, E.; Govindasamy, V.P.; Karnowski, T.P. Detection of Anatomic Structures in Human Retinal Imagery. IEEE Trans. Med Imaging 2007, 26, 1729–1739. [Google Scholar] [CrossRef] [PubMed]

- Niemeijer, M.; Abramoff, M.D.; van Ginneken, B. Segmentation of the Optic Disc, Macula and Vascular Arch in Fundus Photographs. IEEE Trans. Med Imaging 2007, 26, 116–127. [Google Scholar] [CrossRef]

- Medhi, J.P.; Dandapat, S. An effective fovea detection and automatic assessment of diabetic maculopathy in color fundus images. Comput. Biol. Med. 2016, 74, 30–44. [Google Scholar] [CrossRef]

- Romero-Oraá, R.; García, M.; Oraá-Pérez, J.; López, M.I.; Hornero, R. A robust method for the automatic location of the optic disc and the fovea in fundus images. Comput. Methods Programs Biomed. 2020, 196, 105599. [Google Scholar] [CrossRef]

- Staal, J.; Abramoff, M.D.; Niemeijer, M.; Viergever, M.A.; Van Ginneken, B. Ridge-Based Vessel Segmentation in Color Images of the Retina. IEEE Trans. Med Imaging 2004, 23, 501–509. [Google Scholar] [CrossRef] [PubMed]

- Kauppi T, Kalesnykiene V, Kamarainen J-K, Lensu, Lasse & Sorri I, Raninen A, Voutilainen R, et al. The DIARETDB1 Diabetic Retinopathy Database. In: Proc Medical Image Understanding and Analysis (MIUA). 2007.

- Decencière, E.; Zhang, X.; Cazuguel, G.; Lay, B.; Cochener, B.; Trone, C.; Gain, P.; Ordonez, R.; Massin, P.; Erginay, A.; et al. Feedback on a publicly distributed image database: The messidor database. Image Anal. Ster. 2014, 33, 231. [Google Scholar] [CrossRef]

- Kamble, R.; Kokare, M.; Deshmukh, G.; Hussin, F.A.; Mériaudeau, F. Localization of optic disc and fovea in retinal images using intensity based line scanning analysis. Comput. Biol. Med. 2017, 87, 382–396. [Google Scholar] [CrossRef] [PubMed]

- Aquino, A. Establishing the macular grading grid by means of fovea centre detection using anatomical-based and visual-based features. Comput. Biol. Med. 2014, 55, 61–73. [Google Scholar] [CrossRef]

- Chin, K.S.; Trucco, E.; Tan, L.; Wilson, P.J. Automatic fovea location in retinal images using anatomical priors and vessel density. Pattern Recognit. Lett. 2013, 34, 1152–1158. [Google Scholar] [CrossRef]

- Welfer, D.; Scharcanski, J.; Marinho, D.R. Fovea center detection based on the retina anatomy and mathematical morphology. Comput. Methods Programs Biomed. 2011, 104, 397–409. [Google Scholar] [CrossRef] [PubMed]

- Septiarini, A.; Harjoko, A.; Pulungan, R.; Ekantini, R. Optic Disc and Cup Segmentation by Automatic Thresholding with Morphological Operation for Glaucoma Evaluation. Signal Image Video Process 2017, 11, 945–952. [Google Scholar] [CrossRef]

- Fleming, A.D.; Philip, S.; Goatman, K.A.; Olson, J.A.; Sharp, P.F. Automated Assessment of Diabetic Retinal Image Quality Based on Clarity and Field Definition. Investig. Opthalmology Vis. Sci. 2006, 47, 1120–5. [Google Scholar] [CrossRef] [PubMed]

- Facey K, Cummins E, Macpherson K, Morris A, Reay L, Slattery J. Organisation of services for diabetic retinopathy screening. Health Technology Assessment Report 1. 2002.

- Gegundez-Arias, M.E.; Marin, D.; Bravo, J.M.; Suero, A. Locating the fovea center position in digital fundus images using thresholding and feature extraction techniques. Comput. Med Imaging Graph. 2013, 37, 386–393. [Google Scholar] [CrossRef] [PubMed]

Figure 1.

The flow diagram of fovea detection.

Figure 2.

Pre-processing (a) original color image (b) the green layer of image (c) normalized image (d) FOV of the image.

Figure 2.

Pre-processing (a) original color image (b) the green layer of image (c) normalized image (d) FOV of the image.

Figure 3.

Results of the OD detection process, (a) image (b) thresholding results (c) image FOV edges (d) crop image (e) the OD candidate blob location (f) initial OD detection (g) final OD detection result.

Figure 3.

Results of the OD detection process, (a) image (b) thresholding results (c) image FOV edges (d) crop image (e) the OD candidate blob location (f) initial OD detection (g) final OD detection result.

Figure 4.

Illustration of the retinal image and temporal/nasal direction.

Figure 5.

Appearance of blood vessels converging on a single side of the OD.

Figure 6.

Results of the extraction process for the bright parts of the cup disk and blood vessels, (a) the segmented OD, (b) the brightest area of the cup disk, (c) the blood vessels in OD, (d) the curve representing the vertical vessels in OD, (e) location of the brightest area of cup disk vs. the curve.

Figure 6.

Results of the extraction process for the bright parts of the cup disk and blood vessels, (a) the segmented OD, (b) the brightest area of the cup disk, (c) the blood vessels in OD, (d) the curve representing the vertical vessels in OD, (e) location of the brightest area of cup disk vs. the curve.

Figure 7.

The determining process result of the temporal area, (a) initial image with OD on the right side, (b) comparison of the location of the cup disk bright area and vertical blood vessels of the image (a), (c) the temporal area of the image (a). (d) initial image with OD on the left side, (e) comparison of the locations of the cup disk bright areas and vertical blood vessels OD of the image (a), (f) the temporal area of the image (d).

Figure 7.

The determining process result of the temporal area, (a) initial image with OD on the right side, (b) comparison of the location of the cup disk bright area and vertical blood vessels of the image (a), (c) the temporal area of the image (a). (d) initial image with OD on the left side, (e) comparison of the locations of the cup disk bright areas and vertical blood vessels OD of the image (a), (f) the temporal area of the image (d).

Figure 8.

Illustration of the relationship between OD locations and center coordinates of the retinal image.

Figure 8.

Illustration of the relationship between OD locations and center coordinates of the retinal image.

Figure 9.

Illustration of the foveal ROI (shown in blue squares).

Figure 10.

The fovea extraction process, (a) foveal ROI in the green channel, (b) results of contrast enhancement with CLAHE, (c) complement of (b), (d) results of binarization of (c),(e) results of dilation + erosion from (d), (f) the fovea detection results.

Figure 10.

The fovea extraction process, (a) foveal ROI in the green channel, (b) results of contrast enhancement with CLAHE, (c) complement of (b), (d) results of binarization of (c),(e) results of dilation + erosion from (d), (f) the fovea detection results.

Figure 11.

Accuracy comparison of each temporal direction determination method.

Figure 12.

Comparison of distance to GT of each temporal direction determination method.

Figure 13.

Computational time comparison of each temporal direction determination method.

Table 1.

Performance evaluation of the temporal direction determintion.

| Method | DRIVE (%) |

DiaretDB1 (%) |

Messidor (%) |

|---|---|---|---|

| T1 | 100 | 100 | 98.66 |

| T2 | 100 | 100 | 99.29 |

| T3 | 100 | 100 | 100 |

Table 2.

Summary of the fovea detection.

| Temporal determination method |

Accuracy (%) |

Distance | Computational time (s/image) |

| DRIVE | |||

| T1 | 100 | 14.1 | 0.200 |

| T2 | 100 | 14.1 | 0.202 |

| T3 | 100 | 14.1 | 0.190 |

| DiaretDB1 | |||

| T1 | 98.88 | 10.68 | 0.277 |

| T2 | 98.88 | 10.68 | 0.278 |

| T3 | 98.88 | 10.68 | 0.270 |

| Messidor | |||

| T1 | 96.83 | 8.63 | 0.294 |

| T2 | 97.54 | 8.60 | 0.295 |

| T3 | 98.24 | 8.61 | 0.291 |

Table 3.

Comparison of the fovea detection results with other studies.

| Method | Accuracy | Computational time (/image) | Computational time improvement |

| Zheng [17] | DRIVE : 100% DiaretDB1 : 93.3% |

12 seconds 12 seconds |

62.2× 43.4× |

| Medhi [20] | DRIVE : 100% DiaretDB1 : 95.51% |

- | - |

| Chalakkal [16] | DRIVE : 100% DiaretDB1 : 95.5% Messidor : 98.5% |

25 seconds 25 seconds 25 seconds |

130.6× 91.6× 85.2× |

| Romero-oraá [21] | DRIVE : 100% DiaretDB1 : 100% Messidor : 99.67% |

0.54 seconds 14.55 seconds 27.04 seconds |

1.8× 52.8× 92.2× |

| Al Bander [6] | Messidor : 96.6% |

- | - |

| Song [9] | Messidor : 100% |

- | - |

| Proposed method | DRIVE : 100% DiaretDB1 : 98.87% Messidor : 98.24% |

0.19 seconds 0.27 seconds 0.29 seconds |

- - - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Copyright: This open access article is published under a Creative Commons CC BY 4.0 license, which permit the free download, distribution, and reuse, provided that the author and preprint are cited in any reuse.

The Methods of Determining Temporal Direction Based on Asymmetric Information of Optical Disk for Optimal Fovea Detection

Helmie Arif Wibawa

et al.

,

2023

Automated Optic Disc Localization from Smartphone-Captured Low Quality Fundus Images Using YOLOv8n Model

Solomon Gebru Abay

et al.

,

2023

Enhanced Vascular Bifurcations Mapping: Refining Fundus Image Registration

Jesús Eduardo Ochoa-Astorga

et al.

,

2024

MDPI Initiatives

Important Links

© 2024 MDPI (Basel, Switzerland) unless otherwise stated