- System Analysis and Design Tutorial

- System Analysis and Design - Home

- System Analysis & Design - Overview

- Differences between System Analysis and System Design

- System Analysis and Design - Communication Protocols

- Horizontal and Vertical Scaling in System Design

- Capacity Estimation in Systems Design

- Roles of Web Server and Proxies in Designing Systems

- Clustering and Load Balancing

- System Development Life Cycle

- System Development Life Cycle

- System Analysis and Design - Requirement Determination

- System Analysis and Design - Systems Implementation

- System Analysis and Design - System Planning

- System Analysis and Design - Structured Analysis

- System Design

- System Analysis and Design - Design Strategies

- System Analysis and Design - Software Deployment

- Software Deployment Example Using Docker

- Functional Vs. Non-functional Requirements

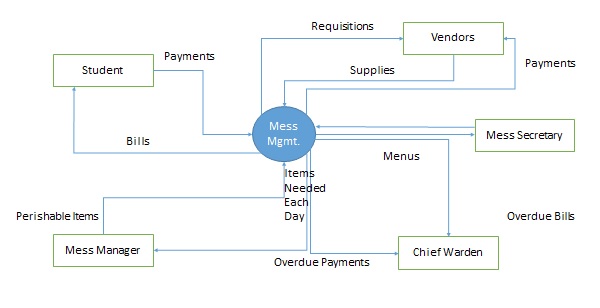

- Data Flow Diagrams(DFD)

- Data Flow Diagram - What It Is?

- Data Flow Diagram - Types and Components

- Data Flow Diagram - Development

- Data Flow Diagram - Balancing

- Data Flow Diagram - Decomposition

- Databases in System Design

- System Design - Databases

- System Design - Database Sharding

- System Design - Database Replication

- System Design - Database Federation

- System Design - Designing Authentication System

- Database Design Vs. Database Architecture

- Database Federation Vs. Database Sharding

- High Level Design(HLD)

- System Design - High Level Design

- System Design - Availability

- System Design - Consistency

- System Design - Reliability

- System Design - CAP Theorem

- System Design - API Gateway

- Low Level Design(LLD)

- System Design - Low Level Design

- System Design - Authentication Vs. Authorization

- System Design - Performance Optimization Techniques

- System Design - Containerization Architecture

- System Design - Modularity and Interfaces

- System Design - CI/CD Pipelines

- System Design - Data Partitioning Techniques

- System Design - Essential Security Measures

- System Implementation

- Input / Output & Forms Design

- Testing and Quality Assurance

- Implementation & Maintenance

- System Security and Audit

- Object-Oriented Approach

- System Analysis & Design Resources

- Quick Guide

- Useful Resources

- Discussion

System Analysis and Design - Quick Guide

System Analysis and Design - Overview

Systems development is systematic process which includes phases such as planning, analysis, design, deployment, and maintenance. Here, in this tutorial, we will primarily focus on −

- Systems analysis

- Systems design

Systems Analysis

It is a process of collecting and interpreting facts, identifying the problems, and decomposition of a system into its components.

System analysis is conducted for the purpose of studying a system or its parts in order to identify its objectives. It is a problem solving technique that improves the system and ensures that all the components of the system work efficiently to accomplish their purpose.

Analysis specifies what the system should do.

Systems Design

It is a process of planning a new business system or replacing an existing system by defining its components or modules to satisfy the specific requirements. Before planning, you need to understand the old system thoroughly and determine how computers can best be used in order to operate efficiently.

System Design focuses on how to accomplish the objective of the system.

System Analysis and Design (SAD) mainly focuses on −

- Systems

- Processes

- Technology

What is a System?

The word System is derived from Greek word Systema, which means an organized relationship between any set of components to achieve some common cause or objective.

A system is “an orderly grouping of interdependent components linked together according to a plan to achieve a specific goal.”

Constraints of a System

A system must have three basic constraints −

A system must have some structure and behavior which is designed to achieve a predefined objective.

Interconnectivity and interdependence must exist among the system components.

The objectives of the organization have a higher priority than the objectives of its subsystems.

For example, traffic management system, payroll system, automatic library system, human resources information system.

Properties of a System

A system has the following properties −

Organization

Organization implies structure and order. It is the arrangement of components that helps to achieve predetermined objectives.

Interaction

It is defined by the manner in which the components operate with each other.

For example, in an organization, purchasing department must interact with production department and payroll with personnel department.

Interdependence

Interdependence means how the components of a system depend on one another. For proper functioning, the components are coordinated and linked together according to a specified plan. The output of one subsystem is the required by other subsystem as input.

Integration

Integration is concerned with how a system components are connected together. It means that the parts of the system work together within the system even if each part performs a unique function.

Central Objective

The objective of system must be central. It may be real or stated. It is not uncommon for an organization to state an objective and operate to achieve another.

The users must know the main objective of a computer application early in the analysis for a successful design and conversion.

Elements of a System

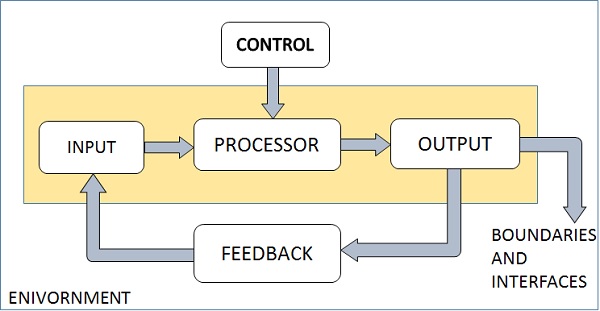

The following diagram shows the elements of a system −

Outputs and Inputs

The main aim of a system is to produce an output which is useful for its user.

Inputs are the information that enters into the system for processing.

Output is the outcome of processing.

Processor(s)

The processor is the element of a system that involves the actual transformation of input into output.

It is the operational component of a system. Processors may modify the input either totally or partially, depending on the output specification.

As the output specifications change, so does the processing. In some cases, input is also modified to enable the processor for handling the transformation.

Control

The control element guides the system.

It is the decision–making subsystem that controls the pattern of activities governing input, processing, and output.

The behavior of a computer System is controlled by the Operating System and software. In order to keep system in balance, what and how much input is needed is determined by Output Specifications.

Feedback

Feedback provides the control in a dynamic system.

Positive feedback is routine in nature that encourages the performance of the system.

Negative feedback is informational in nature that provides the controller with information for action.

Environment

The environment is the “supersystem” within which an organization operates.

It is the source of external elements that strike on the system.

It determines how a system must function. For example, vendors and competitors of organization’s environment, may provide constraints that affect the actual performance of the business.

Boundaries and Interface

A system should be defined by its boundaries. Boundaries are the limits that identify its components, processes, and interrelationship when it interfaces with another system.

Each system has boundaries that determine its sphere of influence and control.

The knowledge of the boundaries of a given system is crucial in determining the nature of its interface with other systems for successful design.

Types of Systems

The systems can be divided into the following types −

Physical or Abstract Systems

Physical systems are tangible entities. We can touch and feel them.

Physical System may be static or dynamic in nature. For example, desks and chairs are the physical parts of computer center which are static. A programmed computer is a dynamic system in which programs, data, and applications can change according to the user's needs.

Abstract systems are non-physical entities or conceptual that may be formulas, representation or model of a real system.

Open or Closed Systems

An open system must interact with its environment. It receives inputs from and delivers outputs to the outside of the system. For example, an information system which must adapt to the changing environmental conditions.

A closed system does not interact with its environment. It is isolated from environmental influences. A completely closed system is rare in reality.

Adaptive and Non Adaptive System

Adaptive System responds to the change in the environment in a way to improve their performance and to survive. For example, human beings, animals.

Non Adaptive System is the system which does not respond to the environment. For example, machines.

Permanent or Temporary System

Permanent System persists for long time. For example, business policies.

Temporary System is made for specified time and after that they are demolished. For example, A DJ system is set up for a program and it is dissembled after the program.

Natural and Manufactured System

Natural systems are created by the nature. For example, Solar system, seasonal system.

Manufactured System is the man-made system. For example, Rockets, dams, trains.

Deterministic or Probabilistic System

Deterministic system operates in a predictable manner and the interaction between system components is known with certainty. For example, two molecules of hydrogen and one molecule of oxygen makes water.

Probabilistic System shows uncertain behavior. The exact output is not known. For example, Weather forecasting, mail delivery.

Social, Human-Machine, Machine System

Social System is made up of people. For example, social clubs, societies.

In Human-Machine System, both human and machines are involved to perform a particular task. For example, Computer programming.

Machine System is where human interference is neglected. All the tasks are performed by the machine. For example, an autonomous robot.

Man–Made Information Systems

It is an interconnected set of information resources to manage data for particular organization, under Direct Management Control (DMC).

This system includes hardware, software, communication, data, and application for producing information according to the need of an organization.

Man-made information systems are divided into three types −

Formal Information System − It is based on the flow of information in the form of memos, instructions, etc., from top level to lower levels of management.

Informal Information System − This is employee based system which solves the day to day work related problems.

Computer Based System − This system is directly dependent on the computer for managing business applications. For example, automatic library system, railway reservation system, banking system, etc.

Systems Models

Schematic Models

A schematic model is a 2-D chart that shows system elements and their linkages.

Different arrows are used to show information flow, material flow, and information feedback.

Flow System Models

A flow system model shows the orderly flow of the material, energy, and information that hold the system together.

Program Evaluation and Review Technique (PERT), for example, is used to abstract a real world system in model form.

Static System Models

They represent one pair of relationships such as activity–time or cost–quantity.

The Gantt chart, for example, gives a static picture of an activity-time relationship.

Dynamic System Models

Business organizations are dynamic systems. A dynamic model approximates the type of organization or application that analysts deal with.

It shows an ongoing, constantly changing status of the system. It consists of −

Inputs that enter the system

The processor through which transformation takes place

The program(s) required for processing

The output(s) that result from processing.

Categories of Information

There are three categories of information related to managerial levels and the decision managers make.

Strategic Information

This information is required by topmost management for long range planning policies for next few years. For example, trends in revenues, financial investment, and human resources, and population growth.

This type of information is achieved with the aid of Decision Support System (DSS).

Managerial Information

This type of Information is required by middle management for short and intermediate range planning which is in terms of months. For example, sales analysis, cash flow projection, and annual financial statements.

It is achieved with the aid of Management Information Systems (MIS).

Operational information

This type of information is required by low management for daily and short term planning to enforce day-to-day operational activities. For example, keeping employee attendance records, overdue purchase orders, and current stocks available.

It is achieved with the aid of Data Processing Systems (DPS).

Differences between System Analysis and System Design

Introduction

System analysis and system design are two critical phases in the development lifecycle of a software system. While they are often used interchangeably, they serve distinct purposes and involve different methodologies. This article will delve into the key differences between system analysis and system design, their roles in the development process, and the techniques used in each phase.

System Analysis

System analysis is the initial phase of a software development project where the requirements of the system are gathered, analyzed, and documented. It involves understanding the problem domain, identifying the stakeholders, and defining the scope and objectives of the system.

Key Activities in System Analysis

Requirement Gathering− Identifying the needs and expectations of the users and stakeholders.

Requirement Analysis− Analyzing the gathered requirements to ensure consistency, feasibility, and completeness.

Feasibility Study− Assessing the technical, economic, and operational feasibility of the proposed system.

Process Modelling− Creating diagrams and models to represent the current and proposed business processes.

Data Modelling− Defining the data entities, attributes, and relationships within the system.

Techniques Used in System Analysis

Interviews− Gathering information from stakeholders through face-to-face or online interviews.

Surveys− Collecting data from a large number of respondents using questionnaires.

Observation− Observing the current system in operation to understand its processes and workflows.

Document Analysis− Examining existing documents, reports, and manuals.

Prototyping− Creating simplified models or mock-up’s of the system to gather feedback and refine requirements.

System Design

System design is the subsequent phase where the detailed specifications of the system are developed. It involves designing the architecture, components, interfaces, and data structures that will implement the requirements defined in the analysis phase.

Key Activities in System Design

Architectural Design− Determining the overall structure and components of the system.

Component Design− Designing individual components and their interactions.

Interface Design− Specifying the interfaces between components and with external systems.

Data Design− Designing the database schema and data structures.

Detailed Design− Creating detailed specifications for each component, including algorithms and data flow.

Techniques Used in System Design

Unified Modelling Language (UML)− A standardized modelling language used to visualize, specify, construct, and document software systems.

Data Flow Diagrams (DFDs)− Diagrams that illustrate the flow of data through a system.

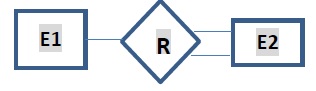

Entity-Relationship Diagrams (ERDs)− Diagrams that represent the entities and relationships between them in a database.

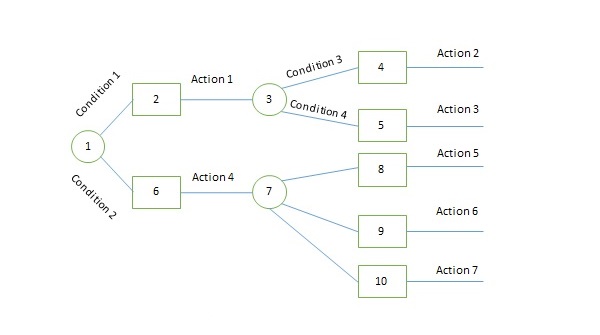

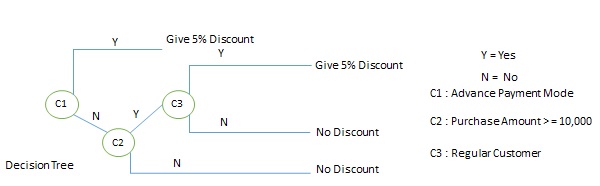

Decision Trees− Diagrams that show the possible outcomes and decisions in a process.

State Transition Diagrams− Diagrams that represent the different states a system can be in and the transitions between them.

Key Differences Between System Analysis and System Design

| Sr.No. | Feature | System Analysis | System Design |

|---|---|---|---|

| 1 | Focus | Understanding the problem domain and gathering requirements. | Specifying the solution and designing the system. |

| 2 | Output | Requirements document | System design specifications |

| 3 | Techniques | Interviews, surveys, observation, document analysis | UML, DFDs, ERDs, decision trees, state transition diagrams |

| 4 | Level of Detail | High-level understanding | Detailed specifications |

The Relationship Between System Analysis and System Design

System analysis and system design are closely interconnected. The output of the analysis phase (the requirements document) serves as the input for the design phase. The design specifications must align with the requirements to ensure that the developed system meets the needs of the users and stakeholders.

Conclusion

System analysis and system design are essential phases in the development of software systems. While they have distinct roles and methodologies, they work together to ensure that the final product meets the desired requirements and delivers value to the users. By effectively conducting system analysis and design, organizations can develop high-quality, efficient, and user-friendly software systems.

System Analysis & Design - Communication Protocols

This article covers different layers of communication, key protocols, and their practical significance.

Introduction

Effective communication is essential in modern networking systems, as it ensures seamless interaction between different devices, applications, and services. Communication protocols act as standardized sets of rules, enabling interoperability, reliability, and security in these systems. From the early days of telegraphy to modern-day internet communication, protocols have evolved to meet the growing demands of data exchange and complex applications.

This article explores the concepts of communication and the various types of protocols that govern data transfer, focusing on the significance of these protocols in today's digital landscape.

Communication Overview

Communication, in technical terms, refers to the exchange of data between two or more systems, either locally or over a network. To facilitate such exchanges, specific rules must be adhered to, ensuring that the sender and receiver can understand each other. This includes data formatting, transmission methods, and error checking.

Types of Communication

Synchronous vs. Asynchronous Communication−

Synchronous− Data is sent and received in real-time (e.g. live video conferencing).

Asynchronous− Data can be sent and processed independently (e.g., emails, messaging).

Unicast, Broadcast, and Multicast Communication−

Unicast− One-to-one communication (e.g. a client-server request).

Broadcast− One-to-many communication (e.g., TV broadcasts, Ethernet data).

Multicast− One-to-select-many (e.g., video conferencing with selected participants).

Half-duplex and Full-duplex Communication−

Half-duplex− Data transmission in one direction at a time (e.g. walkie-talkies).

Full-duplex− Simultaneous transmission and reception (e.g. telephone).

Protocols: The Backbone of Communication

A protocol is a set of rules that defines how data is transmitted and received across networks. It dictates every aspect of communication, from the format of the data to the mechanisms that ensure its delivery. Protocols operate at various levels, each tailored to a specific function within a network.

Importance of Protocols

Standardization− Protocols ensure devices from different manufacturers and environments can communicate.

Reliability− Protocols incorporate error-checking and correction mechanisms.

Interoperability− They allow systems with different architectures to communicate.

Efficiency− Data is transferred efficiently by organizing it into packets or frames.

The OSI Model: A Layered Approach

To understand the different protocols used in communication, we need to explore the Open Systems Interconnection (OSI) Model, which provides a framework for network communication divided into seven layers.

Physical Layer− Deals with the physical transmission of data (e.g. cables, switches).

Data Link Layer− Manages data transfer between directly connected nodes (e.g. MAC addresses, Ethernet).

Network Layer− Handles routing of data across networks (e.g. IP).

Transport Layer− Provides error-checking and guarantees data delivery (e.g. TCP).

Session Layer− Manages sessions between applications (e.g., establishing and terminating connections).

Presentation Layer− Transforms data into the format required by the application layer (e.g., encryption, compression).

Application Layer− Interfaces with end-user applications (e.g. HTTP, FTP).

Each layer in the OSI model has its own set of protocols, each performing specific functions to facilitate communication.

Common Protocols and Their Functions

Transport Layer Protocols

TCP (Transmission Control Protocol)− TCP is connection-oriented, ensuring data is transmitted reliably by establishing a connection between sender and receiver. It uses error-checking mechanisms and ensures data arrives in order, making it ideal for applications requiring accuracy (e.g., web browsing, email).

UDP (User Datagram Protocol)− UDP is connectionless, which makes it faster than TCP. However, it does not guarantee the delivery of data, making it useful for time-sensitive applications like streaming, where speed is more important than perfect accuracy.

Internet Layer Protocols

IP (Internet Protocol)− IP is responsible for addressing and routing packets of data to ensure they reach the correct destination. It operates at the network layer and uses addresses to identify both the sender and receiver.

IPv4 vs. IPv6− IPv4 is the most widely used version, with a 32-bit address scheme. IPv6 is the successor to IPv4, using a 128-bit address to accommodate the growing number of devices on the internet.

Application Layer Protocols

HTTP/HTTPS− HTTP (Hypertext Transfer Protocol) is the foundation of data communication for the World Wide Web. HTTPS adds a layer of security through encryption, making it essential for secure communication on the web.

FTP (File Transfer Protocol)− Used for transferring files between computers. Though widely used, it has largely been replaced by more secure alternatives like SFTP and FTPS, which incorporate encryption.

SMTP (Simple Mail Transfer Protocol)− Facilitates the sending of emails. Often used in combination with IMAP or POP for retrieving emails from servers.

DNS (Domain Name System)− Translates human-readable domain names (e.g., www.example.com) into IP addresses that computers can understand.

Wireless Protocols

Wi-Fi (IEEE 802.11)− A set of standards for wireless local area networks (WLANs) that enable devices to communicate over wireless networks.

Bluetooth− Used for short-range communication between devices, such as connecting wireless headphones to a phone.

Security Protocols: Ensuring Safe Communication

With the rise of cyber threats, security protocols have become integral to maintaining data integrity, confidentiality, and authentication. Several protocols work to secure data in transit.

SSL/TLS (Secure Socket Layer / Transport Layer Security)− These protocols secure communication over networks, especially the internet, by encrypting data. SSL has been deprecated in favour of TLS due to vulnerabilities.

IPsec (Internet Protocol Security)− A suite of protocols used to secure Internet Protocol (IP) communications by authenticating and encrypting each IP packet in a communication session.

SSH (Secure Shell)− Provides a secure method for remote login and other secure network services over an unsecured network.

Future of Communication Protocols

As technology continues to evolve, the demand for faster, more secure, and reliable communication protocols grows. New protocols are being developed to accommodate emerging technologies, including the Internet of Things (IoT), 5G networks, and quantum computing.

5G Protocols− The fifth-generation mobile networks promise faster data transfer rates, reduced latency, and better support for IoT devices, requiring new protocols like 5G-NR (New Radio) and 5GC (5G Core Network).

IoT Communication Protocols− Protocols such as MQTT (Message Queuing Telemetry Transport) and CoAP (Constrained Application Protocol) are lightweight and ideal for the low-power, low-bandwidth requirements of IoT devices.

Quantum Communication Protocols− With the development of quantum computing, researchers are exploring new protocols that leverage quantum entanglement and superposition to create secure communication channels, potentially revolutionizing encryption and network security.

Conclusion

Communication and protocols form the backbone of modern networking, enabling devices and systems to communicate efficiently, reliably, and securely. As technology advances, so too do the protocols that govern data exchange, adapting to new requirements such as higher speeds, increased security, and the ability to handle more devices. The future holds exciting prospects, with advancements like 5G and quantum communication set to redefine the way we think about and use communication protocols.

Horizontal and Vertical Scaling in System Design

Introduction

As applications and systems grow in size and complexity, ensuring they remain efficient and responsive becomes a key challenge. This leads to the discussion of scalability, which is the system’s ability to handle increased load. Scalability can be achieved through two primary methods: horizontal scaling (scale-out) and vertical scaling (scale-up). Both approaches come with distinct advantages, challenges, and best-use cases. In this article, we'll explore the concepts of horizontal and vertical scaling in detail, discussing their respective architectures, benefits, and limitations, as well as how to choose the right scaling method for specific scenarios.

What is Scaling?

Before diving into the specifics of horizontal and vertical scaling, it is essential to understand what scaling entails.

Scaling refers to the process of adjusting resources—such as computing power, storage, or network capabilities—to ensure that an application can handle increased demand without sacrificing performance. As systems grow and face more users, more transactions, or increased data throughput, scaling ensures that the system maintains its efficiency and does not become a bottleneck.

There are two fundamental approaches to scaling: vertical and horizontal.

Vertical Scaling (Scale-Up)

Vertical scaling, or scaling up, involves enhancing the capacity of a single machine or server by adding more powerful resources to it. These resources may include−

Adding more CPU (Central Processing Units).

Increasing RAM (Random Access Memory).

Expanding storage capacity (SSD/HDD).

Increasing network bandwidth capacity.

Essentially, vertical scaling is about making a single machine more powerful by upgrading its components or replacing it with a stronger version.

Vertical Scaling

In vertical scaling, the application continues to run on the same physical machine, but the machine's capabilities are improved. For example, if an application hosted on a server becomes slow due to an increased number of requests, vertical scaling might involve replacing that server with a more powerful one (e.g., from a 16-core processor to a 32-core processor).

The architecture remains unchanged—there is still only one machine or node, albeit one that is much more powerful.

Benefits of Vertical Scaling

Simplicity− Vertical scaling is generally straightforward since it does not require major changes to the application architecture. You simply add more resources to the existing server.

Reduced Complexity− Managing a single server reduces the complexity of operations, including maintenance and monitoring.

Consistency− Since the system runs on a single machine, data consistency is easier to maintain, particularly in cases involving databases, where consistency guarantees are paramount.

Ideal for Monolithic Applications− Monolithic applications, which are tightly coupled and difficult to break down into smaller components, may benefit from vertical scaling since the entire application runs on one machine.

Limitations of Vertical Scaling

Single Point of Failure− With only one machine handling the entire application, it becomes a single point of failure. If the server crashes or faces hardware issues, the whole system may go down.

Resource Limits− Eventually, a physical server can only be upgraded so much. There is a limit to how much CPU, RAM, or storage can be added to a single machine. This "ceiling" may restrict long-term scalability.

Cost− High-end servers and components are expensive. Upgrading a server to the most advanced hardware options can result in substantial upfront costs.

Downtime During Upgrades− Depending on the system, upgrading hardware (e.g., adding RAM or storage) might require shutting down the server, which leads to downtime. In mission-critical systems, even brief downtime can be problematic.

Horizontal Scaling (Scale-Out)

Horizontal scaling, or scaling out, involves adding more machines or nodes to the system, effectively distributing the load across multiple devices. Instead of upgrading the power of a single machine, you add more machines to a cluster to share the load.

Horizontal Scaling Architecture

In horizontal scaling, the architecture is designed for a distributed system where multiple servers (or nodes) work together to handle the increased load.

For example, a website experiencing a surge in traffic might add more servers to handle the additional requests. A load balancer is typically used to distribute traffic evenly across these servers, ensuring that no single machine is overwhelmed. In a distributed database system, horizontal scaling means partitioning the data across multiple machines (sharding) to handle more transactions or larger datasets.

Benefits of Horizontal Scaling

No Theoretical Limit− One of the biggest advantages of horizontal scaling is that there is no theoretical limit to how many servers you can add. As demand grows, you can continue adding machines, thus providing potentially infinite scalability.

Fault Tolerance− Since multiple machines are involved, if one node fails, the system can continue to operate using the remaining nodes. This redundancy provides greater fault tolerance.

Cost Efficiency at Scale− Instead of investing in one high-end machine, horizontal scaling allows the use of many lower-end machines. This can be more cost-effective in the long run, especially in cloud environments where resources can be allocated dynamically.

Better for Cloud and Distributed Applications− Horizontal scaling is ideal for cloud-native and distributed systems like microservices architectures, where different parts of the application can run independently on different servers.

Limitations of Horizontal Scaling

Increased Complexity− Managing multiple servers is inherently more complex than managing a single server. It requires more sophisticated tools for orchestration, monitoring, and load balancing.

Data Consistency Issues− In distributed systems, maintaining data consistency across multiple nodes can be challenging, especially in applications where strong consistency is crucial (e.g., financial applications).

Network Overhead− With more servers, communication between nodes increases, potentially leading to network latency and overhead. Ensuring efficient communication between machines becomes a key concern.

Scaling the Entire Stack− For certain workloads, scaling horizontally might require that the entire application stack (e.g., databases, caches, and processing systems) be designed for horizontal distribution, which may require significant refactoring.

Horizontal vs. Vertical Scaling: A Comparison

| Sr.No. | Feature | Horizontal Scaling | Vertical Scaling |

|---|---|---|---|

| 1 | Definition | Adding more machines or nodes | Enhancing resources on a single machine |

| 2 | Cost | More cost-effective for long-term | Expensive upfront for powerful hardware |

| 3 | Fault Tolerance | High, due to multiple nodes | Low, due to a single point of failure |

| 4 | Complexity | Higher (requires orchestration) | Lower (single machine to manage) |

| 5 | Limits | No theoretical limit | Hardware limits |

| 6 | Downtime During Upgrades | Little to no downtime | May require downtime |

| 7 | Use Case | Cloud, distributed apps, microservices | Monolithic, single-machine systems |

Hybrid Approaches

In many cases, organizations use a combination of both horizontal and vertical scaling. For example, they may start with vertical scaling to meet initial needs and switch to horizontal scaling as demand increases. In cloud environments like AWS or Google Cloud, auto-scaling features allow companies to seamlessly switch between the two, depending on the load.

Some systems also employ hybrid architectures, using vertical scaling for certain components (e.g., databases) while horizontally scaling other components (e.g., web servers). This hybrid approach can offer the best of both worlds but comes at the cost of increased architectural complexity.

When to Choose Horizontal or Vertical Scaling

The choice between horizontal and vertical scaling depends on several factors−

Application Architecture− Distributed systems or microservices naturally lend themselves to horizontal scaling, whereas monolithic applications are easier to scale vertically.

Budget− Horizontal scaling may be more cost-effective in the cloud, where additional instances can be spun up as needed. Vertical scaling may result in high costs due to expensive hardware.

Consistency Requirements− Applications requiring strict data consistency (like banking systems) may favour vertical scaling due to simpler data management.

Expected Growth− If your application is expected to grow rapidly, horizontal scaling may be more appropriate since it offers theoretically unlimited scaling potential.

Conclusion

Both horizontal and vertical scaling are critical concepts in system architecture, each with unique strengths and challenges. Vertical scaling offers simplicity but is limited by hardware constraints, while horizontal scaling provides virtually limitless scalability at the cost of increased complexity. Understanding the nuances of these scaling methods is essential for building efficient, reliable, and scalable systems, especially as more applications migrate to cloud-native architectures.

Selecting the right scaling strategy depends on factors such as your application’s architecture, budget, and scalability needs. In today’s cloud-driven environment, a hybrid approach that leverages the benefits of both horizontal and vertical scaling may offer the most flexibility and cost-effectiveness.

Capacity Estimation in Systems Design

Introduction

Capacity estimation is essential in systems design, involving the process of predicting the required resources—such as server capacity, storage, network bandwidth, and database performance—necessary to handle expected workloads. Proper estimation prevents system bottlenecks, reduces operational costs, and ensures a smooth user experience. This article explores fundamental concepts, estimation methods, tools, and considerations involved in capacity estimation, especially within large-scale distributed systems.

Understanding Capacity Estimation

Definition and Importance: Explain capacity estimation as a planning strategy to ensure a system can handle expected and peak workloads without failure.

Key Metrics

Throughput− Transactions per second or requests per second.

Latency− Time to complete a transaction or request.

Response Time− The total time a user waits for a response.

Load and Concurrency− The number of concurrent users or operations.

Utilization− Percentage of capacity used.

Business Impact− Outline the cost implications of over-provisioning and the risk of under-provisioning.

Fundamental Concepts in Capacity Estimation

Capacity vs. Performance− Distinguish between capacity, focusing on the quantity of service (e.g., number of requests handled), and performance, emphasizing the quality of service (e.g., response time).

Scalability− Discuss how systems should be designed to scale horizontally (adding more instances) and vertically (upgrading resources).

System Bottlenecks− Types of bottlenecks (CPU, memory, I/O, network) and their impact on capacity.

Steps in Capacity Estimation

Define Requirements− Identify the expected workload, peak traffic, and availability needs.

Analyze Historical Data− Use historical system data to find patterns and identify trends.

Model the System−

Workload Modelling− Characterize the types and intensity of workloads (e.g., read-heavy vs. write-heavy operations).

Resource Consumption Modelling− Quantify resource usage for each workload (CPU, memory, disk I/O).

Concurrency and Scaling Factors− Include factors for concurrency and examine how each resource is affected.

Conduct Load Testing− Perform stress and load tests to validate models and identify bottlenecks.

Estimate Growth− Forecast workload growth based on business expectations.

Provision Resources− Calculate the required resources for the projected capacity with a margin for peak usage.

Capacity Estimation Techniques

Analytical Techniques

Queuing Theory− Used to predict performance under different load conditions.

Little’s Law− Applies to systems in steady state to estimate relationships among arrival rate, throughput, and response time.

Empirical Techniques

Load Testing− Simulating real-world load to identify the maximum handling capacity.

Simulation Modelling− Creating virtual models of systems to analyze resource utilization and traffic patterns.

Predictive Techniques−

Machine Learning Models− Leveraging historical data with predictive models to forecast capacity.

Time Series Analysis− Analyzing past workload patterns to predict future demand trends.

Tools for Capacity Estimation

Load Testing Tools

Apache JMeter− For simulating loads on networks and testing system performance.

Gatling− A high-performance load testing tool for web applications.

Monitoring and Analytics Tools

Prometheus & Grafana− Used for monitoring, alerting, and visualizing real-time metrics.

Datadog− Offers performance monitoring with real-time alerts for resource thresholds.

Capacity Planning and Forecasting Tools

Amazon CloudWatch− Provides monitoring and automatic scaling recommendations.

Google Stackdriver− Monitoring and logging for GCP, with resource-based capacity planning.

Custom Solutions− Building custom scripts and tools to collect, analyze, and forecast data specific to system needs.

Challenges in Capacity Estimation

Demand Uncertainty− Variability in demand and unpredictable spikes.

Changing System Architecture− Challenges when infrastructure or software changes.

Distributed Systems Complexity− Increased complexity when scaling distributed systems across regions or data centres.

Resource Dependencies− Complex interdependencies between resources that can lead to bottlenecks or scaling issues.

Cost-Benefit Balance− Balancing cost considerations against desired performance levels.

Best Practices for Effective Capacity Estimation

Regular Capacity Reviews− Conduct frequent reviews and updates to capacity plans based on evolving workloads.

Utilize Automation− Implement automated tools for load testing, monitoring, and scaling.

Build in Redundancy− Design systems with failover and redundancy to avoid single points of failure.

Monitor and Alert− Set up alerts for key metrics to catch bottlenecks early.

Collaborate with Stakeholders− Align capacity plans with business objectives, budget constraints, and expected growth.

Conclusion

Capacity estimation is a proactive step in systems design that ensures a balance between cost, performance, and user satisfaction. By understanding core concepts, employing effective estimation techniques, and using the right tools, system architects can forecast capacity needs and build robust, scalable systems. Capacity estimation is an ongoing process that, when done correctly, can yield cost savings, high performance, and optimal user experience.

Roles of Web Server and Proxies in Designing Systems

This article covers roles of web servers and proxies while preparing a system designs and architectures.

Understanding Web Servers

Definition

A web server is software or hardware that serves web content to clients over the internet.

Functionality

Handling HTTP requests and responses.

Storing and serving static content (HTML, CSS, JavaScript).

Running dynamic applications (e.g., through CGI, PHP, or frameworks like Spring Boot).

Examples of Web Servers

Apache Tomcat

Apache Http

Nginx

Microsoft IIS

Core Responsibilities of Web Servers

Content Delivery

Responding to requests for web pages and resources.

Serving static files and rendering dynamic content.

Resource Management

Managing connections and sessions.

Load balancing to handle multiple requests.

Security Features

HTTPS support through SSL/TLS.

Basic authentication and access control.

Understanding Proxies

Definition

A proxy server acts as an intermediary between a client and a destination server.

Types of Proxies

Forward proxies− Used by clients to access the internet.

Reverse proxies− Positioned in front of web servers to handle requests on their behalf.

Common Use Cases

Caching

Filtering

Content Delivery

Roles of Proxies

Performance Optimization

Caching frequently accessed content to reduce load times.

Reducing bandwidth usage by compressing content.

Security Enhancement

Hiding client IP addresses for anonymity.

Protecting against attacks (e.g., DDoS – Distributed Denial of Service).

Access Control

Filtering traffic based on rules (e.g., content filtering in organizations).

Enforcing company policies for web access.

Interplay Between Web Servers and Proxies

How They Work Together

Proxies forwarding requests to web servers and relaying responses back to clients.

Load balancing techniques involving multiple web servers behind a reverse proxy.

Real-world Example

You can discuss a scenario in which a proxy server is used to enhance the performance and security of a web server.

Challenges and Considerations

Web Server Challenges

Handling high traffic volumes.

Managing security vulnerabilities.

Proxy Challenges

Configuring proxies correctly for optimal performance.

Balancing anonymity and security with usability.

Best Practices

Regular updates and security patches for servers and proxies.

Implementing monitoring tools to track performance and security threats.

Advancements in Web Servers

Web servers are undergoing significant transformations driven by the need for speed and scalability. The rise of microservices architecture is pushing developers to adopt lightweight web servers that can efficiently handle numerous requests. Technologies such as Docker and Kubernetes facilitate containerization, allowing applications to run in isolated environments. This approach enhances resource utilization and simplifies deployment.

Moreover, the integration of serverless computing is revolutionizing the traditional web server model. Platforms like AWS Lambda and Azure Functions enable developers to run code in response to events without managing server infrastructure. This not only reduces operational overhead but also allows for automatic scaling based on demand.

Security remains a top priority, with advancements in TLS (Transport Layer Security) and HTTP/3 protocols enhancing data protection and reducing latency. The adoption of AI-driven security solutions is also on the rise, helping to identify and mitigate threats in real-time.

Clustering and Load Balancing

Introduction to Clustering and Load Balancing

Clustering and load balancing are essential for modern applications to ensure they are scalable, highly available, and perform well under varying loads. Here's why they are significant.

Clustering

High Availability− Clustering ensures that if one server goes down, others can take over, minimizing downtime and ensuring continuous availability.

Scalability− By adding more nodes to a cluster, applications can handle more users and more data without performance degradation.

Fault Tolerance− Clusters are designed to continue operating even when individual nodes fail, which enhances the resilience of the application.

Resource Management− Distributes workloads across multiple nodes, optimizing resource usage and preventing any single node from becoming a bottleneck.

Load Balancing

Efficient Resource Utilization− Load balancing distributes incoming traffic across multiple servers, ensuring that no single server is overwhelmed, which optimizes resource utilization.

Improved Performance− By balancing the load, applications can respond faster to user requests, enhancing the overall user experience.

Redundancy− Load balancing ensures that if one server fails, traffic can be redirected to other operational servers, providing redundancy.

Scalability− Easily scales by adding more servers to the pool, allowing applications to handle increasing traffic seamlessly.

Key Concepts of Clustering

Types of Clustering

High-Availability (HA) Clustering− For fault tolerance and minimal downtime.

Load Balancing Clustering− Distributing workloads to multiple nodes. If a node fails, the request is transferred to the next node.

Storage Clustering− For managing data in distributed systems.

Examples of clustering solutions− Kubernetes, Apache Kafka, Hadoop.

Key Concepts of Load Balancing

Objectives− Avoid overloading any single server, reduce response times, and optimize resource usage.

Types of Load Balancers

Hardware Load Balancers− Specialized devices.

Software Load Balancers− Run on commodity hardware or virtual instances.

DNS Load Balancing− Uses DNS (Domain Name System) to route requests to different servers.

Load Balancing Algorithms and Techniques

Round Robin− Requests are distributed sequentially across servers.

Least Connections− Directs traffic to the server with the fewest active connections.

Weighted Round Robin and Least Connections− Assigns weights to servers based on capacity.

IP Hashing− Routes requests based on the client’s IP address.

Random− Routes requests to random servers.

Dynamic Load Balancing− Adapts based on current server performance.

Tools and Technologies for Load Balancing

Nginx− A popular open-source reverse proxy and load balancer.

HAProxy− A fast and reliable load balancer for TCP and HTTP based applications.

AWS Elastic Load Balancing (ELB)− Load balancing for AWS resources, including EC2 and containers.

Azure Load Balancer− Manages traffic for applications on Microsoft Azure.

Traefik− A modern load balancer for microservices, with built-in support for Kubernetes.

Clustering Technologies and Architectures

Apache Kafka− A distributed streaming platform that supports clustering.

Kubernetes− Manages containerized applications and scales them automatically.

Apache Cassandra− A distributed NoSQL database designed for clustering and fault tolerance.

Active-Active vs. Active-Passive Clustering− In an active-active setup, all nodes (servers) in the cluster are actively processing requests simultaneously. In an active-passive setup, only one node (or a primary set of nodes) is actively handling requests at any time, while the other node(s) remain on standby.

Configuring Load Balancers for Different Applications

Web Applications− Using HTTP/HTTPS load balancing.

Database Load Balancing− Balancing read and write requests (e.g., with MySQL).

Microservices and APIs− Configuring API gateways with load balancing.

Real-time Applications− Configuring WebSocket load balancing for low latency.

Monitoring and Maintaining Clustering and Load Balancing Systems

Importance of Monitoring− Ensure uptime, performance, and detect issues.

Tools for Monitoring

Prometheus and Grafana− Metric collection and visualization.

Datadog and New Relic− End-to-end monitoring for cloud and on-premise environments.

ELK Stack− Logs analysis for load balancer and cluster events.

Common Maintenance Tasks− Updating configurations, scaling up/down, handling node failures.

Identifying and resolving common load balancing and clustering issues.

Here's a look at common issues that arise in load balancing and clustering, along with strategies to identify and resolve them. These issues often relate to misconfiguration, capacity limitations, and network constraints, and addressing them effectively helps maintain high availability and performance.

Uneven Load Distribution

Symptoms− Some servers experience high CPU or memory usage, while others remain underutilized.

Causes− This can be due to a poorly configured load balancing algorithm (e.g., Round Robin may not work well if servers have unequal processing capabilities) or an incorrect weighting setup in Weighted Round Robin or Least Connections algorithms.

Resolution

Adjust the load balancing algorithm to one that matches the application’s requirements. Use a Weighted Load Balancing approach to match server capacities.

For cloud-based solutions, consider auto-scaling policies to add resources automatically under high load conditions.

Session Persistence (Sticky Sessions) Issues

Sticky sessions, also known as session affinity, is a technique used in load balancing to ensure that a user's requests are always directed to the same server throughout a session.

Symptoms− Users are logged out unexpectedly or lose session data when redirected to different servers.

Causes− Load balancers may be configured without sticky sessions, leading to loss of session continuity if a user’s requests are routed to different servers.

Resolution

Enable session persistence (sticky sessions) on the load balancer to ensure that requests from a given client in the same session are routed to the same server.

For more scalable solutions, implement distributed session management (e.g., session data stored in a database or distributed cache like Redis) to avoid dependency on individual servers.

Configuration Drift

Symptoms− Inconsistent behaviour across nodes, such as different software versions or configurations.

Causes− Manual configuration changes lead to mismatches across cluster nodes.

Resolution

Use configuration management tools like Ansible, Puppet, or Chef to ensure consistent configurations across all nodes.

Implement infrastructure as code (IaC) practices, using tools like Terraform to enforce versioned and consistent configuration states.

DNS Caching Issues in DNS Load Balancing

Symptoms− Clients are directed to unhealthy nodes even after.

Causes− DNS caching at the client side or intermediary resolvers can keep IP mappings of decommissioned or faulty nodes.

Resolution

Reduce the Time-to-Live (TTL) on DNS records to ensure faster propagation of changes in DNS-based load balancers.

Use failover DNS records that redirect traffic to alternative nodes in case primary nodes are unreachable.

Logging and Monitoring Challenges

Symptoms− Lack of insight into traffic patterns, unbalanced loads, or delays in troubleshooting issues.

Causes− Inadequate monitoring or logging on the load balancer and clustering nodes.

Resolution

Integrate monitoring tools such as Prometheus, Grafana, or Datadog for real-time metrics.

Use centralized logging (e.g., ELK Stack or Fluentd) to aggregate logs from different nodes and provide unified access.

Set up alerting systems to notify administrators of unusual patterns, such as sudden traffic spikes, server failures, or high latencies.

Future of Clustering and Load Balancing

Trends in Clustering and Load Balancing

Edge Computing− Deploying clusters closer to data sources for latency reduction.

AI-driven Load Balancing− Using machine learning to optimize request routing.

Serverless Architectures− Impact of serverless on traditional load balancing.

Potential Challenges− Increased complexity in managing distributed systems, security concerns.

System Development Life Cycle

An effective System Development Life Cycle (SDLC) should result in a high quality system that meets customer expectations, reaches completion within time and cost evaluations, and works effectively and efficiently in the current and planned Information Technology infrastructure.

System Development Life Cycle (SDLC) is a conceptual model which includes policies and procedures for developing or altering systems throughout their life cycles.

SDLC is used by analysts to develop an information system. SDLC includes the following activities −

- requirements

- design

- implementation

- testing

- deployment

- operations

- maintenance

Phases of SDLC

Systems Development Life Cycle is a systematic approach which explicitly breaks down the work into phases that are required to implement either new or modified Information System.

Feasibility Study or Planning

Define the problem and scope of existing system.

Overview the new system and determine its objectives.

Confirm project feasibility and produce the project Schedule.

During this phase, threats, constraints, integration and security of system are also considered.

A feasibility report for the entire project is created at the end of this phase.

Analysis and Specification

Gather, analyze, and validate the information.

Define the requirements and prototypes for new system.

Evaluate the alternatives and prioritize the requirements.

Examine the information needs of end-user and enhances the system goal.

A Software Requirement Specification (SRS) document, which specifies the software, hardware, functional, and network requirements of the system is prepared at the end of this phase.

System Design

Includes the design of application, network, databases, user interfaces, and system interfaces.

Transform the SRS document into logical structure, which contains detailed and complete set of specifications that can be implemented in a programming language.

Create a contingency, training, maintenance, and operation plan.

Review the proposed design. Ensure that the final design must meet the requirements stated in SRS document.

Finally, prepare a design document which will be used during next phases.

Implementation

Implement the design into source code through coding.

Combine all the modules together into training environment that detects errors and defects.

A test report which contains errors is prepared through test plan that includes test related tasks such as test case generation, testing criteria, and resource allocation for testing.

Integrate the information system into its environment and install the new system.

Maintenance/Support

Include all the activities such as phone support or physical on-site support for users that is required once the system is installing.

Implement the changes that software might undergo over a period of time, or implement any new requirements after the software is deployed at the customer location.

It also includes handling the residual errors and resolve any issues that may exist in the system even after the testing phase.

Maintenance and support may be needed for a longer time for large systems and for a short time for smaller systems.

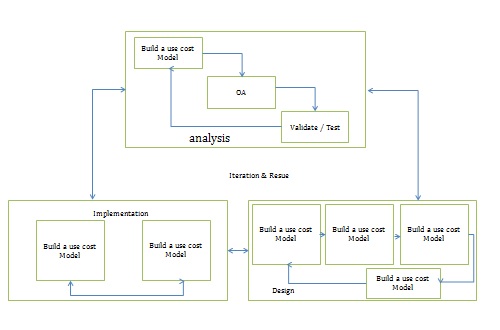

Life Cycle of System Analysis and Design

The following diagram shows the complete life cycle of the system during analysis and design phase.

Role of System Analyst

The system analyst is a person who is thoroughly aware of the system and guides the system development project by giving proper directions. He is an expert having technical and interpersonal skills to carry out development tasks required at each phase.

He pursues to match the objectives of information system with the organization goal.

Main Roles

Defining and understanding the requirement of user through various Fact finding techniques.

Prioritizing the requirements by obtaining user consensus.

Gathering the facts or information and acquires the opinions of users.

Maintains analysis and evaluation to arrive at appropriate system which is more user friendly.

Suggests many flexible alternative solutions, pick the best solution, and quantify cost and benefits.

Draw certain specifications which are easily understood by users and programmer in precise and detailed form.

Implemented the logical design of system which must be modular.

Plan the periodicity for evaluation after it has been used for some time, and modify the system as needed.

Attributes of a Systems Analyst

The following figure shows the attributes a systems analyst should possess −

Interpersonal Skills

- Interface with users and programmer.

- Facilitate groups and lead smaller teams.

- Managing expectations.

- Good understanding, communication, selling and teaching abilities.

- Motivator having the confidence to solve queries.

Analytical Skills

- System study and organizational knowledge

- Problem identification, problem analysis, and problem solving

- Sound commonsense

- Ability to access trade-off

- Curiosity to learn about new organization

Management Skills

- Understand users jargon and practices.

- Resource & project management.

- Change & risk management.

- Understand the management functions thoroughly.

Technical Skills

- Knowledge of computers and software.

- Keep abreast of modern development.

- Know of system design tools.

- Breadth knowledge about new technologies.

System Analysis and Design - Requirement Determination

Introduction

In the realm of systems analysis and design, requirement determination is a critical phase that sets the foundation for successful software development. It involves gathering, analyzing, and documenting the needs and expectations of stakeholders to ensure that the final system meets its intended purpose. This article explores the importance of requirement determination, its methodologies, challenges, and best practices, providing a comprehensive overview for both novice and experienced practitioners.

Importance of Requirement Determination

Requirement determination is essential for several reasons−

Clarity of Purpose− Clearly defined requirements help stakeholders understand the system's purpose and functionality, reducing ambiguity.

Stakeholder Satisfaction− Engaging stakeholders early and accurately capturing their needs leads to greater satisfaction with the final product.

Cost and Time Efficiency− Well-documented requirements minimize the risk of costly changes during later development stages, leading to a more efficient project lifecycle.

Risk Management− Identifying potential issues early allows teams to devise strategies to mitigate risks before they escalate.

Framework for Development− Requirements serve as a guide for system design, coding, testing, and implementation, ensuring alignment throughout the development process.

Methodologies for Requirement Determination

Several methodologies can be employed during the requirement determination phase, each with its strengths and weaknesses−

Interviews

Interviews involve direct discussions with stakeholders to elicit their needs and preferences. They can be structured, semi-structured, or unstructured, allowing for flexibility in gathering information.

Advantages

Direct insights from users.

Opportunity for follow-up questions and clarification.

Disadvantages

Time-consuming.

Potential for biased responses if not carefully managed.

Surveys and Questionnaires

Surveys allow for the collection of data from a larger group of stakeholders. They can be used to gather quantitative data, making it easier to analyse trends and common requirements.

Advantages

Reach a broad audience quickly.

Can provide statistical insights.

Disadvantages

Limited depth of information.

Potentially low response rates.

Workshops and Focus Groups

Workshops and focus groups gather stakeholders in a collaborative environment to discuss requirements. This method encourages interaction and can lead to creative solutions.

Advantages

Fosters collaboration and discussion.

Generates diverse ideas and perspectives.

Disadvantages

Dominant voices may overshadow quieter participants.

Requires skilled facilitation to be effective.

Observation

Observation involves studying users in their natural environment to understand how they interact with existing systems. This method can reveal hidden needs and workflows.

Advantages

Provides real-world context.

Can uncover issues that users may not articulate.

Disadvantages

Time-intensive.

Observer bias can affect findings.

Document Analysis

Reviewing existing documentation such as user manuals, system specifications, and business process diagrams can provide insights into current systems and inform new requirements.

Advantages

Leverages existing knowledge.

Identifies gaps in current systems.

Disadvantages

Documentation may be outdated or incomplete.

Requires expertise to interpret effectively.

Challenges in Requirement Determination

Despite its importance, requirement determination is fraught with challenges−

Changing Requirements− As projects evolve, stakeholders may change their minds about what they need, complicating the process.

Stakeholder Conflicts− Different stakeholders may have conflicting needs or priorities, making consensus difficult.

Communication Barriers− Misunderstandings can arise due to jargon, assumptions, or differing perspectives, leading to incomplete or incorrect requirements.

Incomplete Information− Stakeholders may not fully understand their needs, leading to gaps in the requirements.

Time Constraints− Tight project timelines can pressure teams to rush through the requirement determination phase, increasing the likelihood of errors.

Best Practices for Effective Requirement Determination

To overcome challenges and enhance the effectiveness of requirement determination, consider the following best practices−

Involve Stakeholders Early and Often− Engage users and stakeholders from the outset and maintain ongoing communication throughout the project.

Use Multiple Techniques− Employ a combination of methodologies to gather comprehensive insights and validate findings.

Document Requirements Clearly− Use clear, concise language and structured formats (e.g., use cases, user stories) to document requirements for easy reference.

Prioritize Requirements− Work with stakeholders to prioritize requirements based on business value, feasibility, and urgency, ensuring that critical needs are addressed first.

Conduct Regular Reviews− Schedule regular reviews of the requirements with stakeholders to validate and adjust as necessary, ensuring alignment throughout the project.

Leverage Prototyping− Use prototypes or wireframes to visualize requirements and gather feedback, helping stakeholders clarify their needs.

Maintain Traceability− Establish a traceability matrix to track requirements from initial gathering through design, development, and testing, ensuring that all requirements are met.

Conclusion

Requirement determination is a vital step in the systems analysis and design process. By understanding its importance, employing appropriate methodologies, addressing challenges, and following best practices, organizations can significantly enhance the likelihood of project success. A well-executed requirement determination phase not only leads to a system that meets user needs but also fosters collaboration, reduces risks, and ultimately contributes to stakeholder satisfaction and business success.

System Analysis and Design - Systems Implementation

Introduction

Definition and significance of systems implementation in project management and IT.

Role in bridging the gap between design and operation.

Overview of the key steps in implementing a new system.

Planning for Implementation

Defining Scope and Objectives

Understanding project goals.

Importance of aligning implementation with business strategy.

Resource Allocation

Identifying resources (human, technical, and financial).

Resource planning and timeline management.

Risk Assessment

Recognizing potential implementation risks.

Establishing contingency plans to mitigate risks.

Choosing the Right Implementation Approach

Big Bang Approach

Replacing old systems with new ones at once.

Pros and cons of immediate transition.

Phased Implementation

Gradual deployment in stages.

Benefits of controlling scope and user adaptation.

Parallel Implementation

Running old and new systems concurrently.

Advantages for validation and testing.

Pilot Implementation

Deploying the system in a limited area to assess performance.

Benefits in risk reduction before full-scale roll-out.

Preparing for Change

Change Management

Building a culture open to system changes.

Strategies for managing resistance to new systems.

Training Programs

Designing training to ensure users are proficient.

Role of continuous learning and support in successful implementation.

Communication Planning

Keeping stakeholders informed throughout implementation.

Techniques for clear, transparent communication.

Testing and Quality Assurance

Importance of Testing

Types of testing (e.g., unit testing, integration testing, user acceptance testing).

Ensuring reliability and performance before going live.

User Acceptance Testing (UAT)

Importance of user validation in real-world scenarios.

Collecting feedback and refining the system.

Quality Control Measures

Defining benchmarks for performance and user satisfaction.

Implementing feedback loops for continuous improvement.

System Go-Live and Rollout

Final Preparations

Verifying system functionality and security.

Setting up data migration and backup processes.

Executing the Rollout

Following a clear, documented go-live strategy.

Monitoring performance and managing user inquiries.

Post-Implementation Support

Helpdesk and technical support plans.

Importance of rapid response to issues post-rollout.

Evaluation and Monitoring

Assessing System Performance

Metrics to evaluate functionality, speed, and reliability.

Gathering quantitative and qualitative data.

User Feedback and Adaptations

Collecting user feedback to gauge satisfaction.

Planning updates or modifications based on real user needs.

Maintenance and Continuous Improvement

Establishing a maintenance schedule for ongoing reliability.

Identifying opportunities for system enhancement.

Case Studies and Best Practices

Examples of Successful Implementations

Brief case studies highlighting varied approaches (e.g., phased, pilot).

Lessons Learned

Common challenges and how to overcome them.

Best practices for seamless implementation.

Conclusion

Recap of the critical steps in systems implementation−

Emphasis on the strategic importance of careful planning and execution.

Final thoughts on the role of flexibility and adaptability in successful implementations.

System Analysis & Design - System Planning

What is Requirements Determination?

A requirement is a vital feature of a new system which may include processing or capturing of data, controlling the activities of business, producing information and supporting the management.

Requirements determination involves studying the existing system and gathering details to find out what are the requirements, how it works, and where improvements should be made.

Major Activities in requirement Determination

Requirements Anticipation

It predicts the characteristics of system based on previous experience which include certain problems or features and requirements for a new system.

It can lead to analysis of areas that would otherwise go unnoticed by inexperienced analyst. But if shortcuts are taken and bias is introduced in conducting the investigation, then requirement Anticipation can be half-baked.

Requirements Investigation

It is studying the current system and documenting its features for further analysis.

It is at the heart of system analysis where analyst documenting and describing system features using fact-finding techniques, prototyping, and computer assisted tools.

Requirements Specifications

It includes the analysis of data which determine the requirement specification, description of features for new system, and specifying what information requirements will be provided.

It includes analysis of factual data, identification of essential requirements, and selection of Requirement-fulfillment strategies.

Information Gathering Techniques

The main aim of fact finding techniques is to determine the information requirements of an organization used by analysts to prepare a precise SRS understood by user.

Ideal SRS Document should −

- be complete, Unambiguous, and Jargon-free.

- specify operational, tactical, and strategic information requirements.

- solve possible disputes between users and analyst.

- use graphical aids which simplify understanding and design.

There are various information gathering techniques −

Interviewing

Systems analyst collects information from individuals or groups by interviewing. The analyst can be formal, legalistic, play politics, or be informal; as the success of an interview depends on the skill of analyst as interviewer.

It can be done in two ways −

Unstructured Interview − The system analyst conducts question-answer session to acquire basic information of the system.

Structured Interview − It has standard questions which user need to respond in either close (objective) or open (descriptive) format.

Advantages of Interviewing

This method is frequently the best source of gathering qualitative information.

It is useful for them, who do not communicate effectively in writing or who may not have the time to complete questionnaire.

Information can easily be validated and cross checked immediately.

It can handle the complex subjects.

It is easy to discover key problem by seeking opinions.

It bridges the gaps in the areas of misunderstandings and minimizes future problems.

Questionnaires

This method is used by analyst to gather information about various issues of system from large number of persons.

There are two types of questionnaires −

Open-ended Questionnaires − It consists of questions that can be easily and correctly interpreted. They can explore a problem and lead to a specific direction of answer.

Closed-ended Questionnaires − It consists of questions that are used when the systems analyst effectively lists all possible responses, which are mutually exclusive.

Advantages of questionnaires

It is very effective in surveying interests, attitudes, feelings, and beliefs of users which are not co-located.

It is useful in situation to know what proportion of a given group approves or disapproves of a particular feature of the proposed system.

It is useful to determine the overall opinion before giving any specific direction to the system project.

It is more reliable and provides high confidentiality of honest responses.

It is appropriate for electing factual information and for statistical data collection which can be emailed and sent by post.

Review of Records, Procedures, and Forms

Review of existing records, procedures, and forms helps to seek insight into a system which describes the current system capabilities, its operations, or activities.

Advantages

It helps user to gain some knowledge about the organization or operations by themselves before they impose upon others.

It helps in documenting current operations within short span of time as the procedure manuals and forms describe the format and functions of present system.

It can provide a clear understanding about the transactions that are handled in the organization, identifying input for processing, and evaluating performance.

It can help an analyst to understand the system in terms of the operations that must be supported.

It describes the problem, its affected parts, and the proposed solution.

Observation

This is a method of gathering information by noticing and observing the people, events, and objects. The analyst visits the organization to observe the working of current system and understands the requirements of the system.

Advantages

It is a direct method for gleaning information.

It is useful in situation where authenticity of data collected is in question or when complexity of certain aspects of system prevents clear explanation by end-users.

It produces more accurate and reliable data.

It produces all the aspect of documentation that are incomplete and outdated.

Joint Application Development (JAD)

It is a new technique developed by IBM which brings owners, users, analysts, designers, and builders to define and design the system using organized and intensive workshops. JAD trained analyst act as facilitator for workshop who has some specialized skills.

Advantages of JAD

It saves time and cost by replacing months of traditional interviews and follow-up meetings.

It is useful in organizational culture which supports joint problem solving.

Fosters formal relationships among multiple levels of employees.

It can lead to development of design creatively.

It Allows rapid development and improves ownership of information system.

Secondary Research or Background Reading

This method is widely used for information gathering by accessing the gleaned information. It includes any previously gathered information used by the marketer from any internal or external source.

Advantages

It is more openly accessed with the availability of internet.

It provides valuable information with low cost and time.

It act as forerunner to primary research and aligns the focus of primary research.

It is used by the researcher to conclude if the research is worth it as it is available with procedures used and issues in collecting them.

Feasibility Study

Feasibility Study can be considered as preliminary investigation that helps the management to take decision about whether study of system should be feasible for development or not.

It identifies the possibility of improving an existing system, developing a new system, and produce refined estimates for further development of system.

It is used to obtain the outline of the problem and decide whether feasible or appropriate solution exists or not.

The main objective of a feasibility study is to acquire problem scope instead of solving the problem.

The output of a feasibility study is a formal system proposal act as decision document which includes the complete nature and scope of the proposed system.

Steps Involved in Feasibility Analysis

The following steps are to be followed while performing feasibility analysis −

Form a project team and appoint a project leader.

Develop system flowcharts.

Identify the deficiencies of current system and set goals.

Enumerate the alternative solution or potential candidate system to meet goals.

Determine the feasibility of each alternative such as technical feasibility, operational feasibility, etc.

Weight the performance and cost effectiveness of each candidate system.