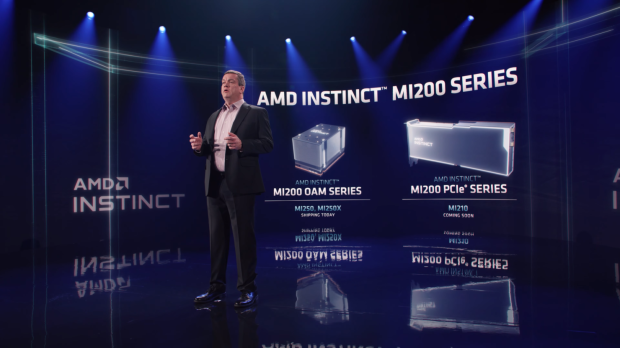

AMD will be introducing its next-gen CDNA 2-based Instinct MI200 series cards in the coming months, with some more news on the Instinct MI210 with a single graphics compute die.

The new AMD Instinct MI210 has been teased with the new CDNA 2 architecture and next-gen "Aldebaran" GPU, which will pack some serious GPU horsepower. The new AMD Instinct MI210 in BabelStream with HIP is around 40% faster than the MI100.

This is according to George Markomanolis, an Engineer working on the upcoming LUMI supercomputer & lead HPC scientist at CSC, who got remote access to the AMD Instinct MI210. The single GCD (graphics compute die) features 104 of the 128 CUs that the full Aldebaran GPU packs with 6656 stream processors, while the higher-end MI250X features 110 CUs per GPU die for a total of 7040 stream processors.

AMD's upcoming Instinct MI210 will have the Aldebaran GPU with 64GB of HBM2e memory, with the flagship Instinct MI250X packing a juicy 128GB of HBM2e memory. As for compute performance, the Aldebaran-based Instinct MI210 should have around 44-46 TFLOPs of FP32 compute performance, with the 64GB of HBM2e memory on a 4096-bit memory interface at 3.2 Gbps per pin meaning we're in for a huge 1.6TB/sec of memory bandwidth.

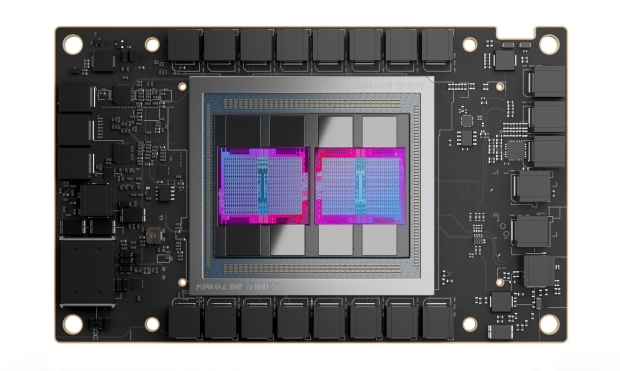

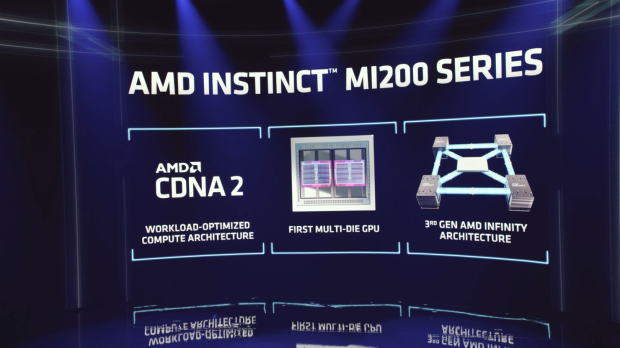

The first MCM technology is now here in the form of the codename Aldebaran GPU, offering up 2 x GPU chiplets and an enormous 128GB of ultra-fast HBM2e memory. There's an incredible amount of GPU horsepower, memory bandwidth, and so much more going on inside of the AMD Instinct MI200.

Inside, the new Aldebaran GPU has two dies: one secondary, and one primary. Each of the GPU dies has 8 Shader Engines each, for a total of 16 Shader Engines while each of the Shader Engines has 16 Compute Units with full-rate FP64, packed FP32, and 2nd Generation Matrix Engine for FP16 and BF16 operations.

- Read more: AMD Instinct MI250X: Aldebaran MCM GPU, 128GB HBM2e memory, 500W power

- Read more: AMD to unveil next-gen Instinct MI250 HPC GPUs, Milan-X CPUs on Nov 8

- Read more: AMD Instinct MI250X accelerator: MCM GPU, 128GB HBM2e, 500W TDP

- Read more: AMD Aldebaran: AMD's first MCM GPU will launch later this year

Each of the GPU dies has 128 compute units or 8192 stream processors -- this means the Aldebaran GPU has a monster amount of compute cores: 220 compute cores, and an even more monstrous 14,080 stream processors. The Aldebaran GPU is also powered by a brand new XGMI interconnect, with each of the GPU chiplets featuring a VCN 2.6 engine and main I/O controller.

AMD is using an 8-channel memory interface with 1024-bit interfaces, for a total of a huge 8192-bit memory bus. AMD is using 2GB HBM2e DRAM modules, with each interface supporting 2GB each -- for a total of 16GB of ultra-fast HBM2e memory per stack.

There are 8 stacks in total so 8 x 16GB = 128GB of ultra-fast HBM2e memory on the Aldebaran-based AMD Instinct MI200. NVIDIA doesn't come close to this, with their A100 featuring a total of 80GB of HBM2e memory -- while the Aldebaran-based AMD Instinct MI200 has a huge 3.2TB/sec of memory bandwidth... NVIDIA can't compete with that, as the A100 only has 2TB/sec of memory bandwidth.

- AMD CDNA 2 architecture - 2nd Gen Matrix Cores accelerating FP64 and FP32 matrix operations, delivering up to 4X the peak theoretical FP64 performance vs. AMD previous-gen GPUs.

- Leadership Packaging Technology - Industry-first multi-die GPU design with 2.5D Elevated Fanout Bridge (EFB) technology delivers 1.8X more cores and 2.7X higher memory bandwidth vs. AMD previous-gen GPUs, offering the industry's best aggregate peak theoretical memory bandwidth at 3.2 terabytes per second.

- 3rd Gen AMD Infinity Fabric technology - Up to 8 Infinity Fabric links connect the AMD Instinct MI200 with 3rd Gen EPYC CPUs and other GPUs in the node to enable unified CPU/GPU memory coherency and maximize system throughput, allowing for an easier on-ramp for CPU codes to tap the power of accelerators.

Performance-wise, NVIDIA shouldn't be taking this lightly -- AMD has multiple record wins with the Instinct MI200 in the HPC market over NVIDIA's flagship A100. There's up to 3x+ performance gains on the MI200 over the A100, which is mighty, mighty impressive.

AMD will be offering its Aldebaran GPU in 3 different configurations:

- OAM: only MI250, MI250X,and dual-slot PCIe MI200

- MI250X: 14,080 cores and 383 TFLOPs of FP16 compute performance

- MI250: 13,312 cores and 362 TFLOPs of FP16 compute performance

- 128GB of HBM2e memory on both GPUs.

Forrest Norrod, senior vice president and general manager, Data Center and Embedded Solutions Business Group, AMD explains: "AMD Instinct MI200 accelerators deliver leadership HPC and AI performance, helping scientists make generational leaps in research that can dramatically shorten the time between initial hypothesis and discovery".

"With key innovations in architecture, packaging and system design, the AMD Instinct MI200 series accelerators are the most advanced data center GPUs ever, providing exceptional performance for supercomputers and data centers to solve the world's most complex problems".